Optimal Blog

Articles and Podcasts on Customer Service, AI and Automation, Product, and more

At Optimal, we know the reality of user research: you've just wrapped up a fantastic interview session, your head is buzzing with insights, and then... you're staring at hours of video footage that somehow needs to become actionable recommendations for your team.

User interviews and usability sessions are treasure troves of insight, but the reality is reviewing hours of raw footage can be time-consuming, tedious, and easy to overlook important details. Too often, valuable user stories never make it past the recording stage.

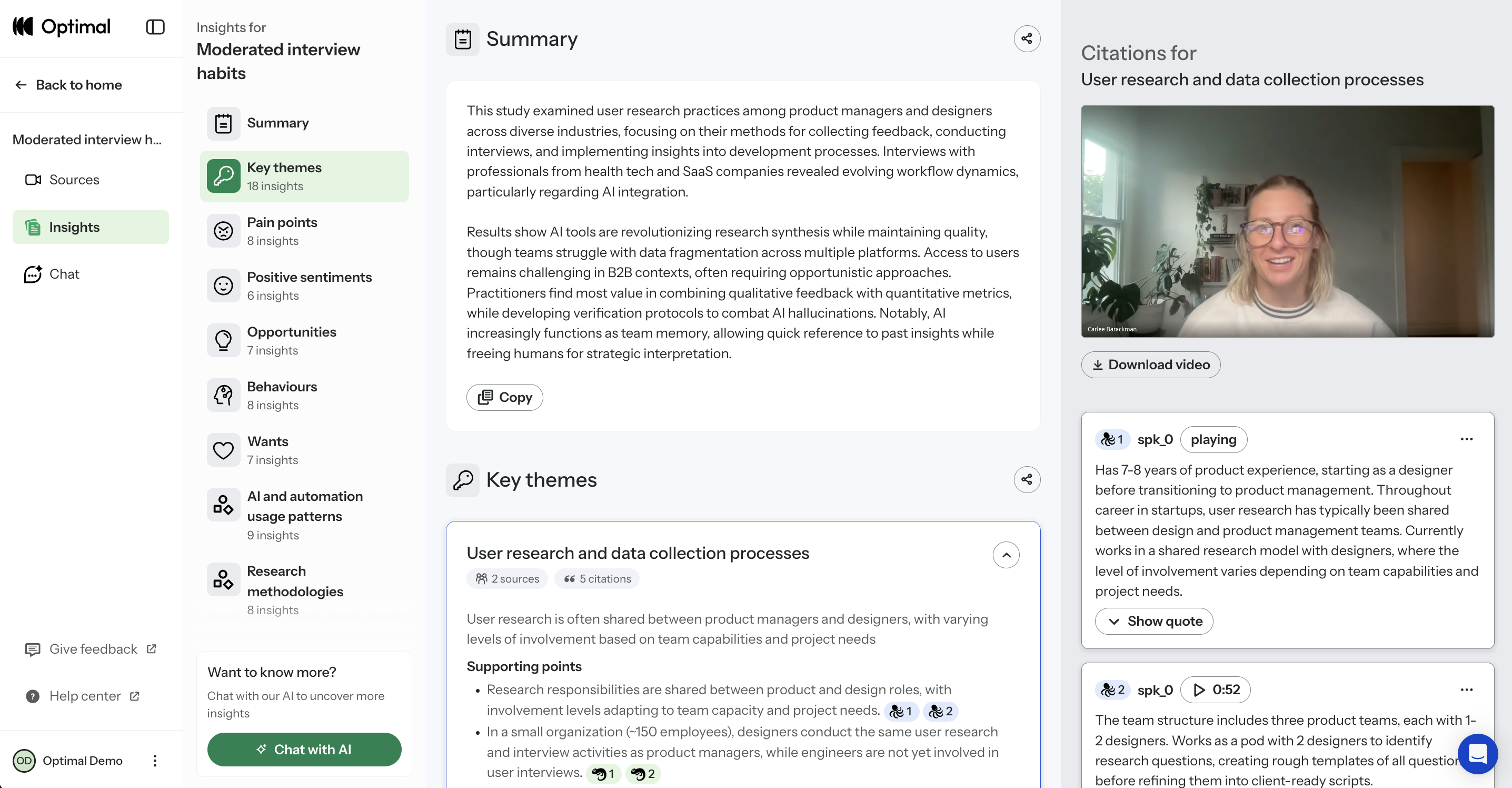

That's why we’re excited to announce the launch of early access for Interviews, a brand-new tool that saves you time with AI and automation, turns real user moments into actionable recommendations, and provides the evidence you need to shape decisions, bring stakeholders on board, and inspire action.

Interviews, Reimagined

What once took hours of video review now takes minutes. With Interviews, you get:

- Instant clarity: Upload your interviews and let AI automatically surface key themes, pain points, opportunities, and other key insights.

- Deeper exploration: Ask follow-up questions and anything with AI chat. Every insight comes with supporting video evidence, so you can back up recommendations with real user feedback.

- Automatic highlight reels: Generate clips and compilations that spotlight the takeaways that matter.

- Real user voices: Turn insight into impact with user feedback clips and videos. Share insights and download clips to drive product and stakeholder decisions.

Groundbreaking AI at Your Service

This tool is powered by AI designed for researchers, product owners, and designers. This isn’t just transcription or summarization, it’s intelligence tailored to surface the insights that matter most. It’s like having a personal AI research assistant, accelerating analysis and automating your workflow without compromising quality. No more endless footage scrolling.

The AI used for Interviews as well as all other AI with Optimal is backed by AWS Amazon Bedrock, ensuring that your AI insights are supported with industry-leading protection and compliance.

What’s Next: The Future of Moderated Interviews in Optimal

This new tool is just the beginning. Soon, you’ll be able to manage the entire moderated interview process inside Optimal, from recruitment to scheduling to analysis and sharing.

Here’s what’s coming:

- Recruit users using Optimal’s managed recruitment services.

- View your scheduled sessions directly within Optimal. Link up with your own calendar.

- Connect seamlessly with Zoom, Google Meet, or Teams.

Imagine running your full end-to-end interview workflow, all in one platform. That’s where we’re heading, and Interviews is our first step.

Ready to Explore?

Interviews is available now for our latest Optimal plans with study limits. Start transforming your footage into minutes of clarity and bring your users’ voices to the center of every decision. We can’t wait to see what you uncover.

Want to learn more and see it in action? Join us for our upcoming webinar on Oct 21st at 12 PM PST.

Topics

Research Methods

Popular

All topics

Latest

Behind the scenes of UX work on Trade Me's CRM system

We love getting stuck into scary, hairy problems to make things better here at Trade Me. One challenge for us in particular is how best to navigate customer reaction to any change we make to the site, the app, the terms and conditions, and so on. Our customers are passionate both about the service we provide — an online auction and marketplace — and its place in their lives, and are rightly forthcoming when they're displeased or frustrated. We therefore rely on our Customer Service (CS) team to give customers a voice, and to respond with patience and skill to customer problems ranging from incorrectly listed items to reports of abusive behavior.

The CS team uses a Customer Relationship Management (CRM) system, Trade Me Admin, to monitor support requests and manage customer accounts. As the spectrum of Trade Me's services and the complexity of the public website have grown rapidly, the CRM system has, to be blunt, been updated in ways which have not always been the prettiest. Links for new tools and reports have simply been added to existing pages, and old tools for services we no longer operate have not always been removed. Thus, our latest focus has been to improve the user experience of the CRM system for our CS team.

And though on the surface it looks like we're working on a product with only 90 internal users, our changes will have flow on effects to tens of thousands of our members at any given time (from a total number of around 3.6 million members).

The challenges of designing customer service systems

We face unique challenges designing customer service systems. Robert Schumacher from GfK summarizes these problems well. I’ve paraphrased him here and added an issue of my own:

1. Customer service centres are high volume environments — Our CS team has thousands of customer interactions every day, and and each team member travels similar paths in the CRM system.

2. Wrong turns are amplified — With so many similar interactions, a system change that adds a minute more to processing customer queries could slow down the whole team and result in delays for customers.

3. Two people relying on the same system — When the CS team takes a phone call from a customer, the CRM system is serving both people: the CS person who is interacting with it, and the caller who directs the interaction. Trouble is, the caller can't see the paths the system is forcing the CS person to take. For example, in a previous job a client’s CS team would always ask callers two or three extra security questions — not to confirm identites, but to cover up the delay between answering the call and the right page loading in the system.

4. Desktop clutter — As a result of the plethora of tools and reports and systems, the desktop of the average CS team member is crowded with open windows and tabs. They have to remember where things are and also how to interact with the different tools and reports, all of which may have been created independently (ie. work differently). This presents quite the cognitive load.

5. CS team members are expert users — They use the system every day, and will all have their own techniques for interacting with it quickly and accurately. They've also probably come up with their own solutions to system problems, which they might be very comfortable with. As Schumacher says, 'A critical mistake is to discount the expert and design for the novice. In contact centers, novices become experts very quickly.'

6. Co-design is risky — Co-design workshops, where the users become the designers, are all the rage, and are usually pretty effective at getting great ideas quickly into systems. But expert users almost always end up regurgitating the system they're familiar with, as they've been trained by repeated use of systems to think in fixed ways.

7. Training is expensive — Complex systems require more training so if your call centre has high churn (ours doesn’t – most staff stick around for years) then you’ll be spending a lot of money. …and the one I’ve added:

8. Powerful does not mean easy to learn — The ‘it must be easy to use and intuitive’ design rationale is often the cause of badly designed CRM systems. Designers mistakenly design something simple when they should be designing something powerful. Powerful is complicated, dense, and often less easy to learn, but once mastered lets staff really motor.

Our project focus

Our improvement of Trade Me Admin is focused on fixing the shattered IA and restructuring the key pages to make them perform even better, bringing them into a new code framework. We're not redesigning the reports, tools, code or even the interaction for most of the reports, as this will be many years of effort. Watching our own staff use Trade Me Admin is like watching someone juggling six or seven things.

The system requires them to visit multiple pages, hold multiple facts in their head, pattern and problem-match across those pages, and follow their professional intuition to get to the heart of a problem. Where the system works well is on some key, densely detailed hub pages. Where it works badly, staff have to navigate click farms with arbitrary link names, have to type across the URL to get to hidden reports, and generally expend more effort on finding the answer than on comprehending the answer.

Groundwork

The first thing that we did was to sit with CS and watch them work and get to know the common actions they perform. The random nature of the IA and the plethora of dead links and superseded reports became apparent. We surveyed teams, providing them with screen printouts and three highlighter pens to colour things as green (use heaps), orange (use sometimes) and red (never use). From this, we were able to immediately remove a lot of noise from the new IA. We also saw that specific teams used certain links but that everyone used a core set. Initially focussing on the core set, we set about understanding the tasks under those links.

The complexity of the job soon became apparent – with a complex system like Trade Me Admin, it is possible to do the same thing in many different ways. Most CRM systems are complex and detailed enough for there to be more than one way to achieve the same end and often, it’s not possible to get a definitive answer, only possible to ‘build a picture’. There’s no one-to-one mapping of task to link. Links were also often arbitrarily named: ‘SQL Lookup’ being an example. The highly-trained user base are dependent on muscle memory in finding these links. This meant that when asked something like: “What and where is the policing enquiry function?”, many couldn’t tell us what or where it was, but when they needed the report it contained they found it straight away.

Sort of difficult

Therefore, it came as little surprise that staff found the subsequent card sort task quite hard. We renamed the links to better describe their associated actions, and of course, they weren't in the same location as in Trade Me Admin. So instead of taking the predicted 20 minutes, the sort was taking upwards of 40 minutes. Not great when staff are supposed to be answering customer enquiries!

We noticed some strong trends in the results, with links clustering around some of the key pages and tasks (like 'member', 'listing', 'review member financials', and so on). The results also confirmed something that we had observed — that there is a strong split between two types of information: emails/tickets/notes and member info/listing info/reports.

We built and tested two IAs

After card sorting, we created two new IAs, and then customized one of the IAs for each of the three CS teams, giving us IAs to test. Each team was then asked to complete two tree tests, with 50% doing one first and 50% doing the other first. At first glance, the results of the tree test were okay — around 61% — but 'Could try harder'. We saw very little overall difference between the success of the two structures, but definitely some differences in task success. And we also came across an interesting quirk in the results.

Closer analysis of the pie charts with an expert in Trade Me Admin showed that some ‘wrong’ answers would give part of the picture required. In some cases so much so that I reclassified answers as ‘correct’ as they were more right than wrong. Typically, in a real world situation, staff might check several reports in order to build a picture. This ambiguous nature is hard to replicate in a tree test which wants definitive yes or no answers. Keeping the tasks both simple to follow and comprehensive proved harder than we expected.

For example, we set a task that asked participants to investigate whether two customers had been bidding on each other's auctions. When we looked at the pietree (see screenshot below), we noticed some participants had clicked on 'Search Members', thinking they needed to locate the customer accounts, when the task had presumed that the customers had already been found. This is a useful insight into writing more comprehensive tasks that we can take with us into our next tests.

What’s clear from analysis is that although it’s possible to provide definitive answers for a typical site’s IAs, for a CRM like Trade Me Admin this is a lot harder. Devising and testing the structure of a CRM has proved a challenge for our highly trained audience, who are used to the current system and naturally find it difficult to see and do things differently. Once we had reclassified some of the answers as ‘correct’ one of the two trees was a clear winner — it had gone from 61% to 69%. The other tree had only improved slightly, from 61% to 63%.

There were still elements with it that were performing sub-optimally in our winning structure, though. Generally, the problems were to do with labelling, where, in some cases, we had attempted to disambiguate those ‘SQL lookup’-type labels but in the process, confused the team. We were left with the dilemma of whether to go with the new labels and make the system initially harder to use for staff but easier to learn for new staff, or stick with the old labels, which are harder to learn. My view is that any new system is going to see an initial performance dip, so we might as well change the labels now and make it better.

The importance of carefully structuring questions in a tree test has been highlighted, particularly in light of the ‘start anywhere/go anywhere’ nature of a CRM. The diffuse but powerful nature of a CRM means that careful consideration of tree test answer options needs to be made, in order to decide ‘how close to 100% correct answer’ you want to get.

Development work has begun so watch this space

It's great to see that our research is influencing the next stage of the CRM system, and we're looking forward to seeing it go live. Of course, our work isn't over— and nor would we want it to be! Alongside the redevelopment of the IA, I've been redesigning the key pages from Trade Me Admin, and continuing to conduct user research, including first click testing using Chalkmark.

This project has been governed by a steadily developing set of design principles, focused on complex CRM systems and the specific needs of their audience. Two of these principles are to reduce navigation and to design for experts, not novices, which means creating dense, detailed pages. It's intense, complex, and rewarding design work, and we'll be exploring this exciting space in more depth in upcoming posts.

"Could I A/B test two content structures with tree testing?!"

"Dear Optimal Worshop

I have two huge content structures I would like to A/B test. Do you think Treejack would be appropriate?"

— Mike

Hi Mike (and excellent question)!

Firstly, yes, Treejack is great for testing more than one content structure. It’s easy to run two separate Treejack studies — even more than two. It’ll help you decide which structure you and your team should run with, and it won’t take you long to set them up.

When you’re creating the two tree tests with your two different content structures, include the same tasks in both tests. Using the same tasks will give an accurate measure of which structure performs best. I’ve done it before and I found that the visual presentation of the results — especially the detailed path analysis pietrees — made it really easy to compare Test A with Test B.

Plus (and this is a big plus), if you need to convince stakeholders or teammates of which structure is the most effective, you can’t go past quantitative data, especially when its presented clearly — it’s hard to argue with hard evidence!

Here’s two example of the kinds of results visualizations you could compare in your A/B test: the pietree, which shows correct and incorrect paths, and where people ended up:

And the overall Task result, which breaks down success and directness scores, and has plenty of information worth comparing between two tests:

Keep in mind that running an A/B tree test will affect how you recruit participants — it may not be the best idea to have the same participants complete both tests in one go. But it’s an easy fix — you could either recruit two different groups from the same demographic, or test one group and have a gap (of at least a day) between the two tests.

I’ve one more quick question: why are your two content structures ‘huge’?

I understand that sometimes these things are unavoidable — you potentially work for a government organization, or a university, and you have to include all of the things. But if not, and if you haven’t already, you could run an open card sort to come up with another structure to test (think of it as an A/B/C test!), and to confirm that the categories you’re proposing work for people.

You could even run a closed card sort to establish which content is more important to people than others (your categories could go from ‘Very important’ to ‘Unimportant’, or ‘Use everyday’ to ‘Never use’, for example). You might be able to make your content structure a bit smaller, and still keep its usefulness. Just a thought... and of course, you could try to get this information from your analytics (if available) but just be cautious of this because of course analytics can only tell you what people did and not what they wanted to do.

All the best Mike!

Designing for delight in the industry of fun: My story and observations

Emotional. Playful. Delightful.These words resonate with user experience (UX) practitioners. We put them in the titles of design books. We build products that move up the design hierarchy of needs, with the goal to go beyond just reliability, usability, and productivity. We want to truly delight the people who use our products.Designing for delight has parallels in the physical world. I see this in restaurants which offer not only delicious food but also an inviting atmosphere; in stores that don’t just sell clothes but also provide superior customer service. Whole industries operate on designing for delight.

The amusement industry has done this for over 500 years. The world’s oldest operating amusement park, Bakken, first opened for guests in 1583 – about 300 years before the first modern roller coaster. Amusement parks experienced a boom in growth in the US in the 1970s. As of November 2014, China had 59 new amusement parks under construction. Today, hundreds of millions of guests each year visit amusement parks throughout the world.I've been fortunate to work in the amusement industry as the owner of a digital UX design company called Thrill & Create. Here is my story of how I got to do this kind of work, and my observations as a UX practitioner in this market.

Making user-centred purveyors of joy

I've been a fan of amusement parks for most of my life. And it’s somewhat hereditary. Much of my family still lives in Central Florida, and several of them have annual passes to Walt Disney World. My mom was a Cast Member at the Magic Kingdom during its opening season.Although I grew up living east of Washington, DC, I spent most of my childhood waiting for our annual trip to an amusement park. I was a different kind of amusement enthusiast: scared to death of heights, loath to ride roller coasters, but so interested in water rides and swimming that my family thought I was a fish. The love for roller coasters would show up much later. But the collection of park maps from our annual trips grew, and in the pre-RollerCoaster Tycoon days, I would sketch designs for amusement parks.

In college, my interest in amusement received a healthy boost from the internet. In the mid-2000s, before fan communities shifted toward Facebook, unofficial websites were quite popular. My home park — a roller coaster enthusiasts’ term for the park that we visit most frequently, not necessarily the closest park to us — had several of these fansites.The fansites would typically last for a year or two and enjoy somewhat of a rivalry with other fansites before their creators would move on to a different hobby and close their sites. The fansites’ forums became an interesting place to share knowledge and learn history about my home park. They also gave us a place to discuss what we would do if we owned the park, to echo rumors we had heard, and start our own rumors. Some quite active forums still exist for this.

Of course, many of us on the forums wanted to be the first to hear a rumor. So we follow the industry blogs, which are typically the first sources of the news. Screamscape has been announcing amusement-industry rumors since the 1990s. And Screamscape and other sites like it announce news not only in the parks but around the industry. Regular Screamscape readers learn about ride manufacturers, trade names for each kind of ride, industry trade shows, and much more. And the International Association of Amusement Parks and Attractions (IAAPA) keeps an eye on the industry as well.Several years ago, I transitioned from being a software developer to starting a user experience design company. It is now called Thrill & Create. I was faced with a challenge: how to compete against the commoditization of freelance design services. Ultimately, selecting a niche was the answer. And seeing IAAPA’s iconic roller coaster sign outside the Orange County Convention Center during a trip to Central Florida was all I needed to shift my strategy toward the amusement industry.

What UX looks like in the Amusement Industry

Periodically, I see new articles about amusement parks in UX blogs. Here are my observations about how UX looks in the industry, both in the physical world and the digital world.

Parks are focused on interactive rides

The amusement industry is known for introducing rides that are bigger, taller, and faster. But the industry has a more interactive future. Using cleverly-designed shops which produce some of the longest waits in the park, Universal Studios has sold many interactive wands to give guests additional experiences in Hogsmeade and Diagon Alley.

At IAAPA, interactivity and interactive rides are very hot topics. Interactive shooting dark rides came to many parks in the early 2000s. Wonder Mountain’s Guardian, a 2014 addition to Canada’s Wonderland, features the world’s longest interactive screen and a ride program that changes completely for the Halloween season.Also coming in 2015 is the elaborately-themed Justice League: Battle for Metropolis rides at two Six Flags parks. And Wet‘n’Wild Las Vegas will debut 'Slideboarding', which allows riders to participate in a video game by touching targets on their way down the slide, and is marketed as 'the world's first waterslide gaming experience'.

UX design is well-established for the physical space

Terms like 'amusement industry', 'attractions industry', and 'themed entertainment industry' can be interchangeable, but they do have different focuses, and different user experience design needs. The amusement industry encompasses amusement parks, theme parks, zoos, aquaria, museums, and their suppliers; the attractions industry also includes other visitor attractions.Several experience design companies have worked extensively on user-centered themed entertainment.

Jack Rouse Associates, who see themselves as “audience advocates”, have worked with over 35 clients in themed entertainment, including Universal, Ocean Park, and LEGOLAND. Thinkwell Group, which touts a 'guest-centric approach to design', showcases 14 theme park and resort projects and attractions work in 12 countries.Consultants in the industry have been more intentionally user-centered. Sasha Bailyn and her team at Entertainment Designer write regularly about physical-world experience design, including UX, in themed entertainment. Russell Essary, owner of Interactive Magic, applies user-centered design to exhibit design, wayfinding, game design, and much more.

In-house teams, agencies, and freelancers are becoming more common

Several large park chains have in-house or contracted UX design teams. Most mid-sized parks work with in-house marketing staff or with outside design companies. One company I know of specializes in web design and development for the amusement industry. Smaller parks and ride companies tend to work with local web designers, or occasionally free website vendors.

Some of the best redesigns in the amusement industry recently have involved UX designers. Parc Astérix, north of Paris, hired a UX designer for a redesign with immersive pictures, interesting shapes, and unique iconography. SeaWorld Parks & Entertainment, working with UX designers, unifies their brand strongly across their corporate site, the sites for SeaWorld and Busch Gardens, and individual park sites. The Memphis Zoo’s website, which showcases videos of their animals, was built by an agency that provides UI design and UX design among their other services.

Sometimes, amusement sites with a great user experience are not made by UX practitioners. The website for Extreme Engineering has a very strong, immersive visual design which communicates their brand well. When I contacted them to learn who designed their site, I was surprised to learn that their head of marketing had designed it.

What I think is going well

Several developments have encouraging me in my mission to help the amusement industry become more user-centered.

UX methods are producing clear wins for my clients and their users

My clients in the industry so far have had significant fan followings. Fans have seen my user-centered approach, and they have been eager to help me improve their favorite sites. I told a recent client that it would take a week to get enough responses from his site visitors on an OptimalSort study. Within a few hours, we exceeded our target number of responses.

When I worked on redesign concepts for a network of park fansites, I ran separate OptimalSort studies for all 8 fansites in the network. They used comparable pages from each site as cards. We discovered that some parks’ attractions organized well by themed area, while others organized well by ride type. Based on this, we decided to let users find attractions using either way on every site. User testers received Explore the Park and the two other new features that emerged from our studies (Visit Tips and Fansite Community) very well.

Although not all of the features I designed for The Coaster Crew went live, the redesign of their official website produced solid results. Their Facebook likes increased over 50% within a year, and their site improved significantly in several major KPIs. Several site visitors have said that the Coaster Crew’s website’s design helped them choose to join The Coaster Crew instead of another club. So that's a big win.

In-park guest experiences and accessibility are hot right now

IAAPA offered over 80 education sessions for industry professionals at this past Attractions Expo. At least nine sessions discussed guest experience. Guest experience was also mentioned in several industry publications I picked up at the show, including one which interviewed The Experience Economy author B. Joseph Pine II.

And parks are following through on this commitment, even for non-riders. Parks are increasingly theming attractions in ways that allow non-riders to experience a ride’s theme in the ride’s environment. For example, Manta at SeaWorld Orlando is a flying roller coaster themed to a manta ray. The park realized that not all of its guests will want to ride a thrilling ride with four inversions. So, separate queues allow both riders and non-riders to see aquariums with around 3,000 sea creatures. And Manta becomes, effectively, a walkthrough attraction for guests who do not want to ride the roller coaster.

The industry has also had encouraging innovations recently in accessibility. Attractions Management Magazine recently featured Morgan’s Wonderland, an amusement park geared toward people with physical and cognitive disabilities. Water parks are beginning to set aside times to especially cater to guests with autism. And at IAAPA, ride manufacturer Zamperla donated a fully-accessible ride to Give Kids the World, an amusement-industry charity.

Several successful consultancies are helping amusement parks and attractions deliver both a better guest experience in the park and better results on business metrics. And that's something I'm definitely keen to be a part of.

Amusement business factors with UX implications

While this is not an exhaustive list, here are some factors in the amusement industry which have UX implications.

Investment in improvements

To keep guests interested in returning, parks reinvest between 5-10% of their revenue into improvements. Park chains typically allocate one capital improvements budget — for new attractions and any other kind of improvement — throughout their entire chain. Reserving enough capital for guest experience improvements is a challenge, even when each touchpoint in a guest’s experience has make-or-break importance. Progress in this area has been slow.

A shift in focus away from high thrills

While ride manufacturers continue to innovate, they are starting to encounter limits on how much physical thrill the human body can handle. A new world’s tallest complete-circuit roller coaster should open in 2017. But that record, only broken one other time since 2003, was broken 5 times between 1994 and 2003. So the industry is shifting toward more immersive attractions and "psychological thriller" rides.

Increased reliance on intellectual property

While some parks still develop their own worlds and characters for attractions, parks today increasingly rely on third-party intellectual property (IP), such as movies, TV shows, and characters. Third-party IP provides guests with a frame of references for interpreting what they see in the park. For example, The Wizarding World of Harry Potter enjoyed a very positive reception from guests due to its faithfulness to the Harry Potter books and films. In the same way, fans will notice if a themed area or attraction is not faithful to the original, and will see it as a broken experience.

Bring your own device

Many guests now carry mobile devices with them in the parks. But so far, guests have not been able to use their mobile devices to trigger changes in a park’s environment. The closest this has come is the interactive wands in The Wizarding World of Harry Potter. Most parks ban mobile devices from most rides due to safety hazards. And amusement parks and museums are both beginning to ban selfie sticks.

Multiple target markets at the same time

Because parks look for gaps in their current offerings and customer bases, they very rarely add new attractions for similar audiences several years in a row. My regional parks tend to handle additions on a 5-10 year cycle. They alternate year by year with additions like a major roller coaster, one or more thrilling flat rides, a family ride, and at least one water ride, to appeal to different market segments, as regularly as possible.The same goes for in-park UX improvements. Themed environment upgrades in a kids’ area appeal to few people in haunted attractions’ target audiences, and vice versa.

Empathy

According to the Association of Zoos and Aquariums (AZA), the fact that most people today will never see sharks, elephants, or pandas in the wild is making conservation efforts more difficult. Zoos and aquaria, in particular, have a large opportunity to allow families to empathize with animals and efforts to conserve threatened species. But, according to an International Zoo Educators Association presentation, most zoo and aquarium guests go there primarily just to see the animals or please their kids.

Ideas for improving the user experience of websites and software in the amusement industry

My primary work interest is to design digital experiences in the amusement industry that support the goals of their target users. To understand where the industry currently stands, I have visited several thousand websites for amusement parks, ride companies, suppliers, zoos, aquaria, museums, and dolphinariums. Below, I've documented a few problems I've seen, and suggested ways companies can solve these problems.

Treat mobile as a top priority

Currently, over 500 websites in the industry are on my radar as sites to improve. At least 90 of them are desktop-only websites with no mobile presence. Several of these sites — even for major ride manufacturers — use Flash and cannot be viewed at all on a mobile device.The industry’s business-to-consumer (B2C) organizations, such as parks, realize that a great deal of traffic comes from mobile and that mobile users are more likely to leave a site that is desktop-only.

These organizations recognize the simple fact that going mobile means selling more tickets. IAAPA itself has capitalized on mobile for their trade show attendees for several years by making a quite resourceful mobile app available.However, at IAAPA, I asked people from several business-to-business (B2B) companies why their sites were not mobile yet. Several told me that they didn’t consider mobile a high priority and that they might start working on a mobile site “in about a year or so.” Thus, they don't feel a great deal of urgency — and I think it's time they did.

Bring design styles and technologies up to date

Many professional UX designers and web designers are well aware of 1990s-style web design artefacts like misused fonts (mainly Comic Sans and Papyrus), black text on a red background,obviously-tiled backgrounds, guestbooks, and splash screens. But I've seen more than a few live amusement websites that still use each of these.Sites that prompt users to install Flash (on mobile devices) or QuickTime increase users’ interaction cost with the site, because the flow of their tasks has been interrupted.

And as Jakob Nielsen says, "Unless everything works perfectly, the novice user will have very little chance of recovery."

If a website needs to use technologies such as Flash or features such as animation or video, a more effective solution would be progressive enhancement. Users whose devices lack the capability to work with these technologies would still see a website with its core features intact and no error messages to distract them from converting.

Organize website information to support user goals and knowledge

Creating an effective website involves much more than using up-to-date design styles. It also involves the following.

Understand why users are on the website

Businesses promote products and services that make them money. Many amusement parks now offer front-of-line passes, VIP tours, pay-per-experience rides, locker rentals, and water park cabana rentals, which are each an additional charge for admitted guests. And per-capita spending is very important to not only parks’ operations, but also their investor relations.Users come to websites with the question, “What’s in it for me?”, and their own sets of goals. Businesses need to know when these goals match their own goals and when they conflict.

The importance of each goal should also be apparent in the design. One water park promotes its changing rooms and locker rentals on its homepage. This valuable space dedicated to logistical information for guests already coming could be used for attracting prospective visitors. Analytics tools and the search queries that they show are helpful tools for understanding why users come to a website. Sites should supplement these by conducting usability studies with users outside their organization. These studies, in turn, could include questions allowing users to describe why would visit that website. The site could use this knowledge to make sure that its content speaks to users’ reasons for visiting.

Understand what users know

Non-technical users are bringing familiarity with how to use the internet when they visit a website. They quickly become perpetual intermediates on the internet, and don’t need to be told how buttons and links work. I've noticed amusement websites that currently label calls to action with “Click Here”, and some even do so on more than one link. This explicit instruction to people is no longer needed, and the best interfaces signal clickable elements in their visual design.

Similarly, people expect to find information in categories they understand and in language familiar to them. So it's important to not make assumptions that people who visit our websites think like us. For example, if a website is organized by model name, users will need to already be familiar with these products and the differences between them. Usability testing exercises, such as card sorting, would contribute to a better design and solve this problem.

Design websites that are consistent with users’ expectations

Websites that aim to showcase a company’s creativity and sense of fun sometimes lack features that people are used to when they visit websites (like easy-to-access menus, vertical scrolling, and so on). But it's important to remember that unconventional designs may lead to increased effort for visitors, which in turn may create a negative experience. A desire to come across as fun may conflict with a visitor's need for ease and simplicity.

The site organization needs to reflect users' goals with minimal barriers to entry. People will be frustrated with things like needing to log in to see prices, having to navigate three levels deep to buy tickets, and coming across unfamiliar or contradictory terms.

Design content for reading

Marketers have written about increased engagement and other benefits resulting from automatically playing videos, animated advertising, and rotating sliders or carousels (all of which usability practitioners have argued against). And this obsession with visual media has sometimes taken attention away from a feature people still want: easy-to-read text.There are still websites in the industry that show walls of text, rivers of text, very small text for main content, text embedded within images, and text written in all-capital letters. But telling clients, “Make the text bigger, higher-contrast, and sentence case”, can conflict with the increasing reliance on exciting visual and interactive design elements.

Goal-directed design shows us what that problem is. For example, if people visit a website to learn more about a company, the website’s design should emphasize the content that gets that message across — in the format that users find most convenient.I recently worked with a leading themed entertainment blogger to improve his site’s usability. His site, Theme Park University, provides deep knowledge of the themed entertainment industry that readers cannot get anywhere else. It first came to my attention when he published a series of posts on why Hard Rock Park in Myrtle Beach, South Carolina – one of the most ambitious new theme park projects in the US in the 2000s — failed after only one season.

As a regular reader, I knew that TPU had truly fantastic content and an engaged community on social media. But users commented to us that the site was cluttered, and so didn't spend much time on the website. We needed to give the website a more open look and feel — in other words, designed to be read.The project was not a full redesign. But by fitting small changes into the site’s existing design, we made the site more open and easier to read while retaining its familiar branding for readers. We also made his site’s advertising more effective, even by having fewer ads on each page.As D. Bnonn Tennant says, “Readership = Revenue.… [A website] has to fulfill a revenue goal. So, every element should be designed to achieve that goal. Including the copy. Especially the copy — because the copy is what convinces visitors to do whatever it is you want them to do on the website".

Three final ideas for getting UX a seat at the table

In helping take UX methods to the amusement industry, I have learned several lessons which would help other practitioners pioneer UX in other industries:

1. Explain UX benefits without UX jargon

When I work for clients in the amusement industry, the biggest challenge that I face is unfamiliarity with UX. Most other professionals in the industry do not know about UX design principles or practices. I have had to educate clients on the importance of giving me feedback early and testing with users often. And because most of my clients have not had a technical background, I have had to explain UX and its benefits in non-UX terms.

2. Be willing to do non-UX project work yourself in a team of one

As a business owner, I regularly prospect for new clients. The biggest challenge in landing projects here is trying to convince people that they should hire a bigger team than just me. A bigger team (and higher rates for a UXer versus a web designer) leads to bigger project costs and more reluctant approvals. So I have had to do development – and even some tech support – myself so far.

3. Realize clients have a lot on their plates — so learn patience

The other main challenge in selling UX to the amusement industry is project priority. Marketing departments that handle websites are used to seeing the website as one job duty out of many. Ride companies without dedicated IT staff tend to see the website as an afterthought, partially because they do most of their business at trade shows instead of online. This has led to several prospects telling me that they might pursue a redesign a year from now or later, but not in the near future. That's OK because I can be ready for them when they're ready for me.

Collating your user testing notes

It’s been a long day. Scratch that - it’s been a long week! Admit it. You loved every second of it.

Twelve hour days, the mad scramble to get the prototype ready in time, the stakeholders poking their heads in occasionally, dealing with no-show participants and the excitement around the opportunity to speak to real life human beings about product or service XYZ. Your mind is exhausted but you are buzzing with ideas and processing what you just saw. You find yourself sitting in your war room with several pages of handwritten notes and with your fellow observers you start popping open individually wrapped lollies leftover from the day’s sessions. Someone starts a conversation around what their favourite flavour is and then the real fun begins. Sound familiar? Welcome to the post user testing debrief meeting.

How do you turn those scribbled notes and everything rushing through your mind into a meaningful picture of the user experience you just witnessed? And then when you have that picture, what do you do next? Pull up a bean bag, grab another handful of those lollies we feed our participants and get comfy because I’m going to share my idiot-proof, step by step guide for turning your user testing notes into something useful.

Let’s talk

Get the ball rolling by holding a post session debrief meeting while it’s all still fresh your collective minds. This can be done as one meeting at the end of the day’s testing or you could have multiple quick debriefs in between testing sessions. Choose whichever options works best for you but keep in mind this needs to be done at least once and before everyone goes home and forgets everything. Get all observers and facilitators together in any meeting space that has a wall like surface that you can stick post its to - you can even use a window! And make sure you use real post its - the fake ones fall off!

Mark your findings (Tagging)

Before you put sharpie to post it, it’s essential to agree as a group on how you will tag your observations. Tagging the observations now will make the analysis work much easier and help you to spot patterns and themes. Colour coding the post its is by far the simplest and most effective option and how you assign the colours is entirely up to you. You could have a different colour for each participant or testing session, you could have different colours to denote participant attributes that are relevant to your study eg senior staff and junior staff, or you could use different colours to denote specific testing scenarios that were used. There’s many ways you could carve this up and there’s no right or wrong way. Just choose the option that suits you and your team best because you’re the ones who have to look at it and understand it. If you only have one colour post it eg yellow, you could colour code the pen colours you use to write on the notes or include some kind of symbol to help you track them.

Processing the paper (Collating)

That pile of paper is not going to process itself! Your next job as a group is to work through the task of transposing your observations to post it notes. For now, just stick them to the wall in any old way that suits you. If you’re the organising type, you could group them by screen or testing scenario. The positioning will all change further down the process, so at this stage it’s important to just keep it simple. For issues that occur repeatedly across sessions, just write them down on their own post its- doubles will be useful to see further down the track.In addition to holding a debrief meetings, you also need to round up everything that was used to capture the testing session/s. And I mean EVERYTHING.

Handwritten notes, typed notes, video footage and any audio recordings need to be reviewed just in case something was missed. Any handwritten notes should be typed to assist you with the completion of the report. Don’t feel that you have to wait until the testing is completed before you start typing up your notes because you will find they pile up very quickly and if your handwriting is anything like mine…. Well let’s just say my short term memory is often required to pick up the slack and even that has it’s limits. Type them up in between sessions where possible and save each session as it’s own document. I’ll often use the testing questions or scenario based tasks to structure my typed notes and I find that makes it really easy to refer back to.Now that you’ve processed all the observations, it’s time to start sorting your observations to surface behavioural patterns and make sense of it all.

Spotting patterns and themes through affinity diagramming

Affinity diagramming is a fantastic tool for making sense of user testing observations. In fact it’s just about my favourite way to make sense of any large mass of information. It’s an engaging and visual process that grows and evolves like a living creature taking on a life of its own. It also builds on the work you’ve just done which is a real plus!By now, testing is over and all of your observations should all be stuck to a wall somewhere. Get everyone together again as a group and step back and take it all in. Just let it sit with you for a moment before you dive in. Just let it breathe. Have you done that? Ok now as individuals working at the same time, start by grouping things that you think belong together. It’s important to just focus on the content of the labels and try to ignore the colour coded tagging at this stage, so if session one was blue post its don’t group all the blue ones together just because they’re all blue! If you get stuck, try grouping by topic or create two groups eg issues and wins and then chunk the information up from there.

You will find that the groups will change several times over the course of the process and that’s ok because that’s what it needs to do.While you do this, everyone else will be doing the same thing - grouping things that make sense to them. Trust me, it’s nowhere near as chaotic as it sounds! You may start working as individuals but it won’t be long before curiosity kicks in and the room is buzzing with naturally occurring conversation.Make sure you take a step back regularly and observe what everyone else is doing and don’t be afraid to ask questions and move other people’s post its around- no one owns it! No matter how silly something may seem just put it there because it can be moved again. Have a look at where your tagged observations have ended up. Are there clusters of colour? Or is it more spread out? What that means will depend largely on how you decided to tag your findings. For example if you assigned each testing session its own colour and you have groups with lot’s of different colours in them you’ll find that the same issue was experienced by multiple people.Next, start looking at each group and see if you can break them down into smaller groups and at the same time consider the overall picture for bigger groups eg can the wall be split into say three high level groups.Remember, you can still change your groups at anytime.

Thinning the herd (Merging)

Once you and your team are happy with the groups, it’s time to start condensing the size of this beast. Look for doubled up findings and stack those post its on top of each other to cut the groups down- just make sure you can still see how many there were. The point of merging is to condense without losing anything so don’t remove something just because it only happened once. That one issue could be incredibly serious. Continue to evaluate and discuss as a group until you are happy. By now clear and distinct groups of your observations should have emerged and at a glance you should be able to identify the key findings from your study.

A catastrophe or a cosmetic flaw? (Scoring)

Scoring relates to how serious the issues are and how bad the consequences of not fixing them are. There are arguments for and against the use of scoring and it’s important to recognise that it is just one way to communicate your findings.I personally rarely use scoring systems. It’s not really something I think about when I’m analysing the observations. I rarely rank one problem or finding over another. Why? Because all data is good data and it all adds to the overall picture.I’ve always been a huge advocate for presenting the whole story and I will never diminish the significance of a finding by boosting another. That said, I do understand the perspective of those who place metrics around their findings. Other designers have told me they feel that it allows them to quantify the seriousness of each issue and help their client/designer/boss make decisions about what to do next.We’ve all got our own way of doing things, so I’ll leave it up to you to choose whether or not you score the issues. If you decide to score your findings there are a number of scoring systems you can use and if I had to choose one, I quite like Jakob Nielsen’s methodology for the simple way it takes into consideration multiple factors. Ultimately you should choose the one that suits your working style best.

Let’s say you did decide to score the issues. Start by writing down each key finding on it’s own post it and move to a clean wall/ window. Leave your affinity diagram where it is. Divide the new wall in half: one side for wins eg findings that indicate things that tested well and the other for issues. You don’t need to score the wins but you do need to acknowledge what went well because knowing what you’re doing well is just as important as knowing where you need to improve. As a group (wow you must be getting sick of each other! Make sure you go out for air from time to time!) score the issues based on your chosen methodology.Once you have completed this entire process you will have everything you need to write a kick ass report.

What could possibly go wrong? (and how to deal with it)

No process is perfect and there are a few potential dramas to be aware of:

People jumping into solution mode too early

In the middle of the debrief meeting, someone has an epiphany. Shouts of We should move the help button! or We should make the yellow button smaller! ring out and the meeting goes off the rails.I’m not going to point fingers and blame any particular role because we’ve all done it, but it’s important to recognise that’s not why we’re sitting here. The debrief meeting is about digesting and sharing what you and the other observers just saw. Observing and facilitating user testing is a privilege. It’s a precious thing that deserves respect and if you jump into solution mode too soon, you may miss something. Keep the conversation on track by appointing a team member to facilitate the debrief meeting.

Storage problems

Handwritten notes taken by multiple observers over several days of testing adds up to an enormous pile of paper. Not only is it a ridiculous waste of paper but they have to be securely stored for three months following the release of the report. It’s not pretty. Typing them up can solve that issue but it comes with it’s own set of storage related hurdles. Just like the handwritten notes, they need to be stored securely. They don’t belong on SharePoint or in the share drive or any other shared storage environment that can be accessed by people outside your observer group. User testing notes are confidential and are not light reading for anyone and everyone no matter how much they complain. Store any typed notes in a limited access storage solution that only the observers have access to and if anyone who shouldn’t be reading them asks, tell them that they are confidential and the integrity of the research must be preserved and respected.

Time issues

Before the storage dramas begin, you have to actually pick through the mountain of paper. Not to mention the video footage, and the audio and you have to chase up that sneaky observer who disappeared when the clock struck 5. All of this takes up a lot of time. Another time related issue comes in the form of too much time passing in between testing sessions and debrief meetings. The best way to deal with both of these issues is to be super organised and hold multiple smaller debriefs in between sessions where possible. As a group, work out your time commitments before testing begins and have a clear plan in place for when you will meet. This will prevent everything piling up and overwhelming you at the end.

Disagreements over scoring

At the end of that long day/week we’re all tired and discussions around scoring the issues can get a little heated. One person’s showstopper may be another person’s mild issue. Many of the ranking systems use words as well as numbers to measure the level of severity and it’s easy to get caught up in the meaning of the words and ultimately get sidetracked from the task at hand. Be proactive and as a group set ground rules upfront for all discussions. Determine how long you’ll spend discussing an issue and what you will do in the event that agreement cannot be reached. People want to feel heard and they want to feel like their contributions are valued. Given that we are talking about an iterative process, sometimes it’s best just to write everything down to keep people happy and merge and cull the list in the next iteration. By then they’ve likely had time to reevaluate their own thinking.

And finally...

We all have our own ways of making sense of our user testing observations and there really is no right or wrong way to go about it. The one thing I would like to reiterate is the importance of collaboration and teamwork. You cannot do this alone, so please don’t try. If you’re a UX team of one, you probably already have a trusted person that you bounce ideas off. They would be a fantastic person to do this with. How do you approach this process? What sort of challenges have you faced? Let me know in the comments below.

"I'm a recent graduate who wants a UI/UX career. Any tips, advice, or leads to get me started?"

"Dear UX Agony Aunt I'm a recent graduate, and I'm interested in becoming a UI/UX designer/developer. The problem is, I don't really know where to start! Is it too much to hope for that out there in the industry, somewhere, is a pro who would be willing to mentor me? Any tips, advice, or leads?" — Nishita

Dear Nishita, Congrats on your recent graduation! I think it’s wonderful that you’ve found what that you’re interested in — and even better that it’s UX! Girl, I bet you don't know where to start! I've been there, let me tell you. One thing I know for sure: UX is a multifaceted industry that defies strict definitions and constantly evolves. But there are plenty of ways in, and you'll have no trouble if you foster these three things: empathy, drive, and an open mind. I now humbly present 5 of my best tips for starting your career with a bang. After you read these, explore the resources I've listed, and definitely head on over to UX Mastery (a place that any Uxer can call home).

My Stunningly Amazing Five Top Tips for Starting a UX Career

That's right — do these things and you'll be on your way to a dazzling career.

Start with something you enjoy

One of my favourite things about UX the sheer number of options available to you. It's that hot, and that in demand, that YOU get to choose which piece of it you want to bite off first. I’m an industrial designer, but the user research side of things makes me so so happy, so that's what I do. It may seem daunting, impossible, or even slightly cliche to simply "Do what you love". But armed with the three essential ingredients I mentioned above — empathy, drive, and an open mind — you actually can do anything. And you must make use of UX Mastery's UX Self Assessment Sundial. Trust me — it'll help you to clarify the skills you have and what you love.

Start a two-way relationship with a mentor

A mentor is a wonderful thing to have no matter what stage you are at in your UX career. You might even find yourself with more than one — I personally have four! They each bring their own experiences and skills into the mix, and I bring mine too. And here's the great thing about mentoring — I also have four mentees of my own. Mentoring is two-way street, so think about what you could bring to the relationship as well. You might have a skill your mentor wants to learn, or they may have never mentored before and you'll be their guinea pig. You asked if someone out there would be willing to mentor you. Yes absolutely! UX people are some of the nicest people around (if I do say so myself!). We devote our time to improving the experience of others, and truthfully, we never stop interating ourselves (an ever-evolving project). How do you find a mentor? Oh, that’s easy: just ask. Seriously, it’s that simple. Reach out to people who inspire you — email, social media, and video calling mean you don't have to let a silly thing like the ocean be a barrier!

Build meaningful connections with fellow UXers

Connecting with other UX humans, both online and face-to-face, is essential. Why? Because people are the heart of UX. We also make excellent company, what with our creative intelligence and our wicked sense of humor (well, that's describing me and the people I know, anyway!) For online connections, get thee straight to the UX Mastery community — it's where I found my feet as a new UXer — where it's totally fine to out yourself as a newbie and ask those questions burning a hole in your pocket (or mind). For in-person connections, a quick google search should turn up UX events and meetups in your area — be brace and just go! You will have a great time, promise.

Use Twitter as your source of quality UX-related content

Twitter is my favourite online resource for UX articles and resources. There are just SO many potential things to read, so Twitter acts as the perfect filter. Set up a Twitter account for all your professional UX stuff (do remember that this means no tweeting about how cranky you are that your cat didn’t keep its breakfast down). Only follow the people who do the things you're interested in (so no following the Kardashians). And make an effort to not just skim read the posts and resources people share, but to absorb the content, make notes, reflect, agree or disagree, brainstorm and wrestle with the ideas, put them into practise, discuss them with people, tweet, retweet, and retweet other peoples' retweets. And whenever you stumble upon a particularly interesting or useful post, sign up for their newsletter or add them to your RSS feed.

Amplify your online presence (CVs have been kicked off their throne)

The best advice I received when starting out was to build an online presence. At the time, I was iterating my CV and asking for feedback — the traditional "How to get a job" approach we were taught as tots.My manager told me then that it’s really not about your CV — it’s more about your LinkedIn profile, and your ability to share your thoughts with others through blogging and tweeting. CVs are still useful, but things are different now. Was he right? Damn straight he was! In addition to the professional Twitter account you’re going to set up, update your Linkedin profile and consider starting a blog (which, incidentally, is a great way to engage with the UX content you'll already be reading and tweeting about — double whammy!).

Start Here: Five websites and ten twitter accounts to follow right now

Subscribe to updates and dive into the archives of these places:

Then search for these accounts and hit 'Follow' on Twitter:

Go for it Nishita — you'll do great!

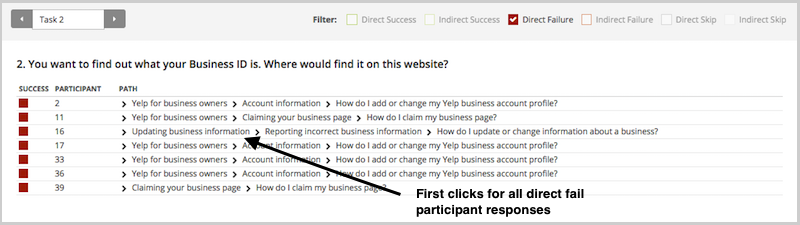

Does the first click really matter? Treejack says yes

In 2009, Bob Bailey and Cari Wolfson published apaper entitled “FirstClick Usability Testing: A new methodology for predicting users’ success on tasks”. They’d analyzed 12 scenario-based user tests and concluded that the first click people make is a strong leading indicator of their ultimate success on a given task. Their results were so compelling that we got all excited and created Chalkmark, a tool especially for first click usability testing. It occurred to me recently that we’ve never revisited the original premise for ourselves in any meaningful way.

And then one day I realized that, as if by magic, we’re sitting on quite possibly the world’s biggest database of tree test results. I wondered: can we use these results to back up Bob and Cari’s findings (and thus the relevanceof Chalkmark)?Hell yes we can.So we’ve analyzed tree testing data from millions of responses in Treejack, and we're thrilled (relieved) that it confirmed the findings from the 2009 paper — convincingly.

What the original study found

Bob and Cari analyzed data from twelve usability studies on websites and products ‘with varying amounts and types of content, a range of subject matter complexity, and distinct user interfaces’. They found that people were about twice as likely to complete a task successfully if they got their first click right, than if they got it wrong:

If the first click was correct, the chances of getting the entire scenario correct was 87%If the first click was incorrect, the chances of eventually getting the scenario correct was only 46%

What our analysis of tree testing data has found

We analyzed millions of tree testing responses in our database. We've found that people who get the first click correct are almost three times as likely to complete a task successfully:

If the first click was correct, the chances of getting the entire scenario correct was 70%If the first click was incorrect, the chances of eventually getting the scenario correct was 24%

To give you another perspective on the same data, here's the inverse:

If the first click was correct, the chances of getting the entire scenario incorrect was 30%If the first click was incorrect, the chances of getting the whole scenario incorrect was 76%

How Treejack measures first clicks and task success

Bob and Cari proved the usefulness of the methodology by linking two key metrics in scenario-based usability studies: first clicks and task success. Chalkmark doesn't measure task success — it's up to the researcher to determine as they're setting up the study what constitutes 'success', and then to interpret the results accordingly. Treejack does measure task success — and first clicks.

In a tree test, participants are asked to complete a task by clicking though a text-only version of a website hierarchy, and then clicking 'I'd find it here' when they've chosen an answer. Each task in a tree test has a pre-determined correct answer — as was the case in Bob and Cari's usability studies — and every click is recorded, so we can see participant paths in detail.

Thus, every single time a person completes an individual Treejack task, we record both their first click and whether they are successful or not. When we came to test the 'correct first click leads to task success' hypothesis, we could therefore mine data from millions of task.

To illustrate this, have a look at the results for one task.The overall Task result, you see a score for success and directness, and a breakdown of whether each Success, Fail, or Skip was direct (they went straight to an answer), or indirect (they went back up the tree before they selected an answer):

In the pietree for the same task, you can look in more detail at how many people went the wrong way froma label (each label representing one page of your website):

In the First Click tab, you get a percentage breakdown of which label people clicked first to complete the task:

And in the Paths tab, you can view individual participant paths in detail (including first clicks), and can filter the table by direct and indirect success, fails, and skips (this table is only displaying direct success and direct fail paths):

How to get busy with first click testing

This analysis reinforces something we already knew that firstclicks matter. It is worth your time to get that first impression right.You have plenty of options for measuring the link between first clicks and task success in your scenario-based usability tests. From simply noting where your participants go during observations, to gathering quantitative first click data via online tools, you'll win either way. And if you want to add the latter to your research, Chalkmark can give you first click data on wireframes and landing pages,and Treejack on your information architecture.

To finish, here's a few invaluable insights from other researchers ongetting the most from first click testing:

- Jeff Sauro details a useful approach to running a first click test, and shares the findings from a test he ran on 13 people.

- An article on Neoinsight describes three common usability problems that first click testing can solve.

- Gianna LaPin describes a first click test she ran on Netflix, VUDU, and Hulu Plus.

No results found.