Usability experts play an essential role in the user interface design process by evaluating the usability of digital products from a very important perspective - the users! Usability experts utilize various techniques such as heuristic evaluation, usability testing, and user research to gather data on how users interact with digital products and services. This data helps to identify design flaws and areas for improvement, leading to the development of user-friendly and efficient products.

Heuristic evaluation is a usability research technique used to evaluate the user interface design of a digital product based on a set of ‘heuristics’ or ‘usability principles’. These heuristics are derived from a set of established principles of user experience design - attributed to the landmark article “Improving a Human-Computer Dialogue” published by web usability pioneers Jakob Nielsen and Rolf Molich in 1990. The principles focus on the experiential aspects of a user interface.

In this article, we’ll discuss what heuristic evaluation is and how usability experts use the principles to create exceptional design. We’ll also discuss how usability testing works hand-in-hand with heuristic evaluation, and how minimalist design and user control impact user experience. So, let’s dive in!

Understanding Heuristic Evaluation

Heuristic evaluation helps usability experts to examine interface design against tried and tested rules of thumb. To conduct a heuristic evaluation, usability experts typically work through the interface of the digital product and identify any issues or areas for improvement based on these broad rules of thumb, of which there are ten. They broadly cover the key areas of design that impact user experience - not bad for an article published over 30 years ago!

The ten principles are:

- Prevention error: Well-functioning error messages are good, but instead of messages, can these problems be removed in the first place? Remove the opportunity for slips and mistakes to occur.

- Consistency and standards: Language, terms, and actions used should be consistent to not cause any confusion.

- Control and freedom for users: Give your users the freedom and control to undo/redo actions and exit out of situations if needed.

- System status visibility: Let your users know what’s going on with the site. Is the page they’re on currently loading, or has it finished loading?

- Design and aesthetics: Cut out unnecessary information and clutter to enhance visibility. Keep things in a minimalist style.

- Help and documentation: Ensure that information is easy to find for users, isn’t too large and is focused on your users’ tasks.

- Recognition, not recall: Make sure that your users don’t have to rely on their memories. Instead, make options, actions and objects visible. Provide instructions for use too.

- Provide a match between the system and the real world: Does the system speak the same language and use the same terms as your users? If you use a lot of jargon, make sure that all users can understand by providing an explanation or using other terms that are familiar to them. Also ensure that all your information appears in a logical and natural order.

- Flexibility: Is your interface easy to use and it is flexible for users? Ensure your system can cater to users to all types, from experts to novices.

- Help users to recognize, diagnose and recover from errors: Your users should not feel frustrated by any error messages they see. Instead, express errors in plain, jargon-free language they can understand. Make sure the problem is clearly stated and offer a solution for how to fix it.

Heuristic evaluation is a cost-effective way to identify usability issues early in the design process (although they can be performed at any stage) leading to faster and more efficient design iterations. It also provides a structured approach to evaluating user interfaces, making it easier to identify usability issues. By providing valuable feedback on overall usability, heuristic evaluation helps to improve user satisfaction and retention.

The Role of Usability Experts in Heuristic Evaluation

Usability experts play a central role in the heuristic evaluation process by providing feedback on the usability of a digital product, identifying any issues or areas for improvement, and suggesting changes to optimize user experience.

One of the primary goals of usability experts during the heuristic evaluation process is to identify and prevent errors in user interface design. They achieve this by applying the principles of error prevention, such as providing clear instructions and warnings, minimizing the cognitive load on users, and reducing the chances of making errors in the first place. For example, they may suggest adding confirmation dialogs for critical actions, ensuring that error messages are clear and concise, and making the navigation intuitive and straightforward.

Usability experts also use user testing to inform their heuristic evaluation. User testing involves gathering data from users interacting with the product or service and observing their behavior and feedback. This data helps to validate the design decisions made during the heuristic evaluation and identify additional usability issues that may have been missed. For example, usability experts may conduct A/B testing to compare the effectiveness of different design variations, gather feedback from user surveys, and conduct user interviews to gain insights into users' needs and preferences.

Conducting user testing with users that represent, as closely as possible, actual end users, ensures that the product is optimized for its target audience. Check out our tool Reframer, which helps usability experts collaborate and record research observations in one central database.

Minimalist Design and User Control in Heuristic Evaluation

Minimalist design and user control are two key principles that usability experts focus on during the heuristic evaluation process. A minimalist design is one that is clean, simple, and focuses on the essentials, while user control refers to the extent to which users can control their interactions with the product or service.

Minimalist design is important because it allows users to focus on the content and tasks at hand without being distracted by unnecessary elements or clutter. Usability experts evaluate the level of minimalist design in a user interface by assessing the visual hierarchy, the use of white space, the clarity of the content, and the consistency of the design elements. Information architecture (the system and structure you use to organize and label content) has a massive impact here, along with the content itself being concise and meaningful.

Incorporating minimalist design principles into heuristic evaluation can improve the overall user experience by simplifying the design, reducing cognitive load, and making it easier for users to find what they need. Usability experts may incorporate minimalist design by simplifying the navigation and site structure, reducing the number of design elements, and removing any unnecessary content (check out our tool Treejack to conduct site structure, navigation, and categorization research). Consistent color schemes and typography can also help to create a cohesive and unified design.

User control is also critical in a user interface design because it gives users the power to decide how they interact with the product or service. Usability experts evaluate the level of user control by looking at the design of the navigation, the placement of buttons and prompts, the feedback given to users, and the ability to undo actions. Again, usability testing plays an important role in heuristic evaluation by allowing researchers to see how users respond to the level of control provided, and gather feedback on any potential hiccups or roadblocks.

Usability Testing and Heuristic Evaluation

Usability testing and heuristic evaluation are both important components of the user-centered design process, and they complement each other in different ways.

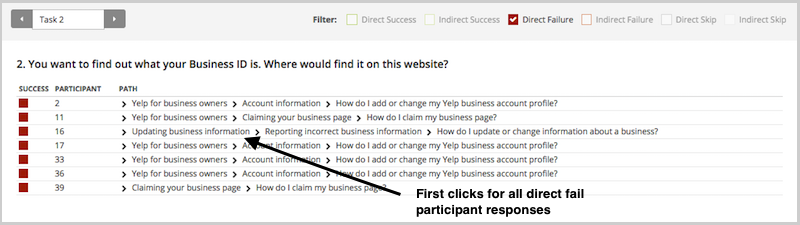

Usability testing involves gathering feedback from users as they interact with a digital product. This feedback can provide valuable insights into how users perceive and use the user interface design, identify any usability issues, and help validate design decisions. Usability testing can be conducted in different forms, such as moderated or unmoderated, remote or in-person, and task-based or exploratory. Check out our usability testing 101 article to learn more.

On the other hand, heuristic evaluation is a method in which usability experts evaluate a product against a set of usability principles. While heuristic evaluation is a useful method to quickly identify usability issues and areas for improvement, it does not involve direct feedback from users.

Usability testing can be used to validate heuristic evaluation findings by providing evidence of how users interact with the product or service. For example, if a usability expert identifies a potential usability issue related to the navigation of a website during heuristic evaluation, usability testing can be used to see if users actually have difficulty finding what they need on the website. In this way, usability testing provides a reality check to the heuristic evaluation and helps ensure that the findings are grounded in actual user behavior.

Usability testing and heuristic evaluation work together in the design process by informing and validating each other. For example, a designer may conduct heuristic evaluation to identify potential usability issues and then use the insights gained to design a new iteration of the product or service. The designer can then use usability testing to validate that the new design has successfully addressed the identified usability issues and improved the user experience. This iterative process of designing, testing, and refining based on feedback from both heuristic evaluation and usability testing leads to a user-centered design that is more likely to meet user needs and expectations.

Conclusion

Heuristic evaluation is a powerful usability research technique that usability experts use to evaluate digital product interfaces based on a set of established principles of user experience design. After all these years, the ten principles of heuristic evaluation still cover the key areas of design that impact user experience, making it easier to identify usability issues early in the design process, leading to faster and more efficient design iterations. Usability experts play a critical role in the heuristic evaluation process by identifying design flaws and areas for improvement, using user testing to validate design decisions, and ensuring that the product is optimized for its intended users.

Minimalist design and user control are two key principles that usability experts focus on during the heuristic evaluation process. A minimalist design is clean, simple, and focuses on the essentials, while user control gives users the freedom and control to undo/redo actions and exit out of situations if needed. By following these principles, usability experts can create an exceptional design that enhances visibility, reduces cognitive load, and provides a positive user experience.

Ultimately, heuristic evaluation is a cost-effective way to identify usability issues at any point in the design process, leading to faster and more efficient design iterations, and improving user satisfaction and retention. How many of the ten heuristic design principles does your digital product satisfy?