Mobile app testing with users before, during and beyond the design process is essential to ensuring product success. As UX designers we know how important usability is for interaction design, but testing early and often on mobile can sometimes be a challenge. This is where usability testing tools like Chalkmark (our first-click testing tool) can make a big difference.

First-click testing on mobile apps allows you to rapidly test ideas and ensure your design supports user goals before you invest time and money in further design work and development. It helps you determine whether you’re on the right track and whether your users are too — people are 2 to 3 times as likely to successfully complete their task if they got their first click right.

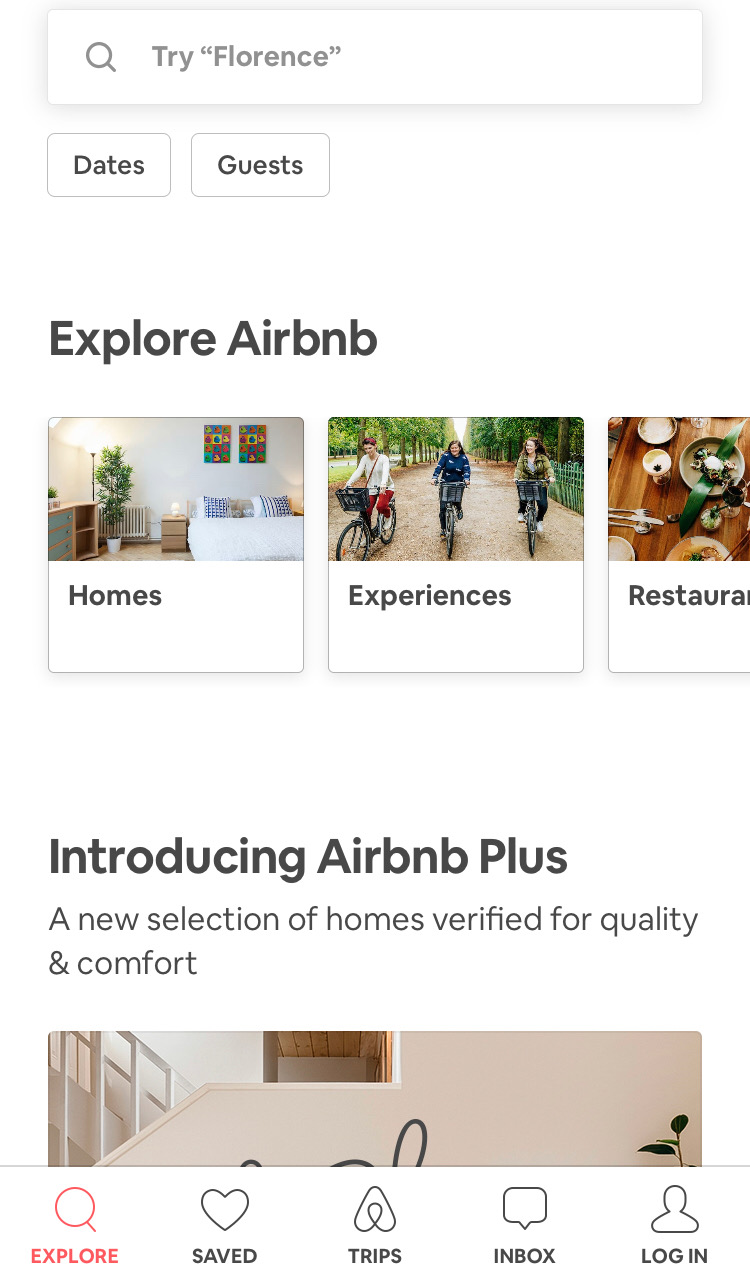

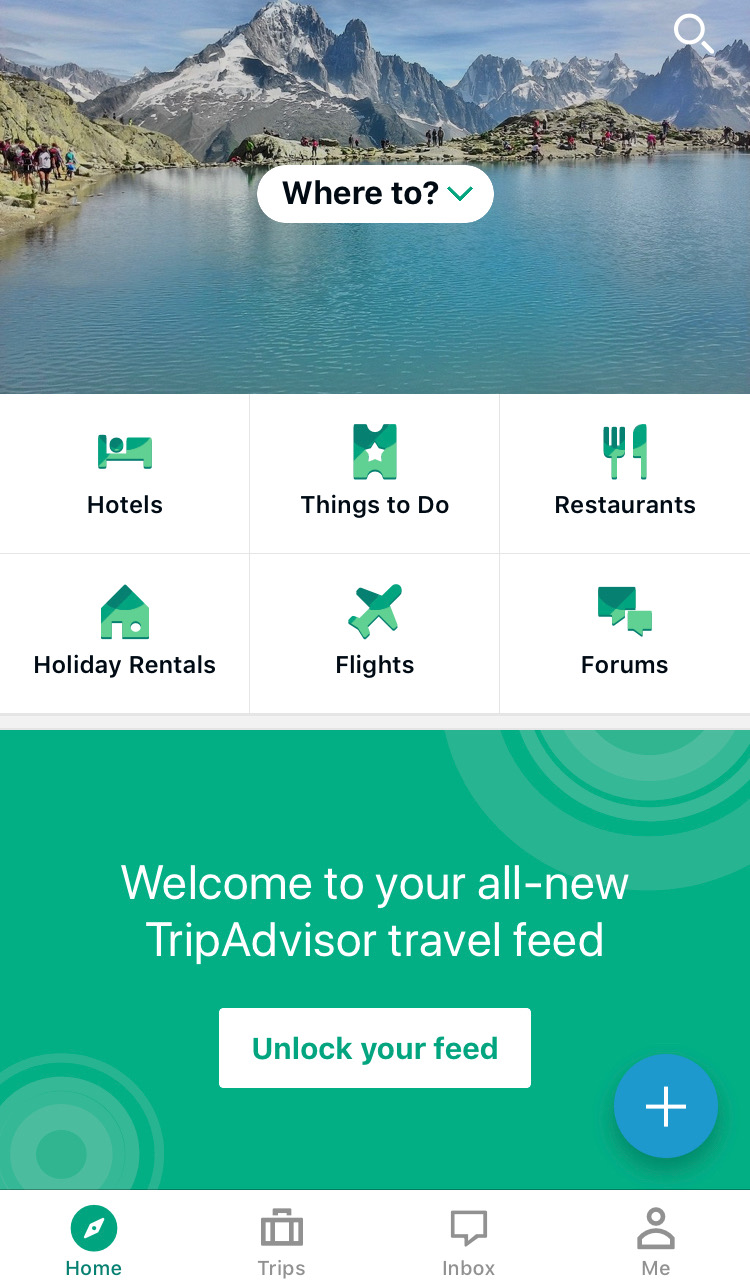

Read on for our top tips for mobile testing with Chalkmark shared through an example of a study we recently ran on Airbnb and TripAdvisor’s mobile apps.

Planning your mobile testing approach: remote or in person

There’s 2 ways that you might approach mobile app testing with Chalkmark: remotely or in person. Chalkmark is great for remote testing because it allows you to gain insights quickly as well as reach people anywhere in the world as the study is simply shared via a link. You might recruit participants via your social networks or email lists or you could use a recruitment service to target specific groups of people. The tool is also flexible enough to work just as well for moderated and in-person research studies. You might pop your study onto a mobile device and hit the streets for some guerrilla testing or you might incorporate it into a usability testing session that you’ve already got planned. There’s no right or wrong way to do it — it really depends on the needs of your project and the resources you have available.

For our Airbnb and TripAdvisor mobile app study example, we decided to test remotely and recruited 30 US based participants through the Optimal Workshop recruitment service.

Getting ready to test

Chalkmark works by presenting participants with a real-world scenario based task and asking them to complete it simply by clicking on a static image of a design. That image could be anything from a rough sketch of an idea, to a wireframe, to a screenshot of your existing product. Anything that you would like to gather your user’s first impressions on — if you can create an image of it, you can Chalkmark it.

To build your study, all you have to do is upload your testing images and come up with some tasks for your participants to complete. Think about the most common tasks a user would need to complete while using your app and base your mobile testing tasks around those. For our Airbnb and TripAdvisor study, we decided to use 3 tasks for each app and tested both mobile apps together in one study to save time. Task order was randomized to reduce bias and we used screenshots from the live apps for testing.

For Airbnb, we focused our mobile testing efforts on the three main areas of their service offering: Homes, Experiences and Restaurants. We wanted to see if people understood the images and labels used and also if there were any potential issues with the way Airbnb presents these three options as horizontally scrollable tiles where the third one is only partially shown in that initial glance.

For TripAdvisor, we were curious to see if the image-only icons on the sticky global navigation menu that appears when the page is scrolled made sense to users. We chose three of these icons to test: Holiday Rentals, Things To Do and Forums.

Our Chalkmark study had a total of 6 tasks — 3 for each app — and we tested both mobile apps together to save time.

Our tasks for this study were:

1. You’ll be spending the holidays with your family in Montreal this year and a friend has recommended you book yourself into an axe throwing workshop during your trip.

2. Where would you go to do this? (Airbnb)

3. You’ve heard that Airbnb has a premium range of places to stay that have been checked by their team to ensure they’re amazing. Where would you go to find out more? (Airbnb)

4. You’re staying with your parents in New York for the week and would like to surprise them by taking them out to dinner but you’re not sure where to take them. Where would you go to look for inspiration? (Airbnb)

5. You’re heading to New Zealand next month and have so many questions about what it’s like! You’d love to ask the online community of locals and other travellers about their experiences. Where would you go to do this? (TripAdvisor)

6. You’re planning a trip to France and would prefer to enjoy Paris from a privately owned apartment instead of a hotel. Where would you go to find out what your options are? (TripAdvisor)

7. You’re currently on a working holiday in Melbourne and you find yourself with an unexpected day off. You’re looking for ideas for things to do. Where would you go to find something like this? (TripAdvisor)

Task order was randomized to reduce bias and we used screenshots from the live apps for testing.All images used for testing were the size of a single phone screen because we wanted to see if they could find their way without needing to scroll. As with everything else, you don’t have to do it this way — you could make the image longer and test a larger section of your design or you could focus on a smaller section. As a testing tool, Chalkmark is flexible and scalable.

We also put a quickly mocked up frame around each image that loosely resembled a smart phone because without it, the image looked like part of it had been cropped out which could have been very distracting for participants! This frame also provided context that we were testing a mobile app.

Making sense of Chalkmark results data

Chalkmark makes it really easy to make sense of your research through clickmaps and some really handy task results data. These 2 powerful analysis features provide a well-rounded and easy to digest picture of where those valuable first clicks landed so that you can evolve your design quickly and confidently.

A clickmap is a visualization of where your participants clicked on your testing image during the study. It has different views showing heatmaps and actual click locations so you can see exactly where they fell. Clickmaps help you to understand if your participants were on the right track or, if they weren’t, where they went instead.The task results tab in Chalkmark shows how successful your participants were and how long it took them to complete the task. To utilize the task results functionality, all you have to do is set the correct clickable areas on the images you tested with — just click and drag and give each correct area a meaningful name that will then appear alongside the rest of the task results. You can do this during the build process or anytime after the study has been completed. This is very useful if you happen to forget something or are waiting on someone else to get back to you while you set up the test!

For our Airbnb and TripAdvisor study, we set the correct areas on the navigational elements (the tiles, the icons etc) and excluded search. While searching for something isn’t necessarily incorrect, we wanted to see if people could find their way by navigating. For Airbnb, we discovered that 83% of our participants were able to correctly identify where they would need to go to book themselves into an axe throwing workshop. With a median task completion time of 4.89 seconds, this task also had the quickest completion time in the entire study. These findings show that the label and image being used for the ‘Experiences’ section of the app appears to be working quite well.

We also found that 80% of participants were able to find where they’d need to go to access Airbnb Plus. Participants had two options and could go via the ‘Homes’ tile (33%) or through the ‘Introducing Airbnb Plus’ image (47%) further down. Of the remaining participants, 10% clicked on the ‘Introducing Airbnb Plus’ heading, however at the time of testing, this area was not clickable. It’s not a huge deal because these participants were on the right track and would have likely found the right spot to click fairly quickly anyway. It’s just something to consider around user expectations and perhaps making that heading clickable might be worth exploring further.

83% of our participants were able to figure out where to go to find a great restaurant on the Airbnb app which is awesome! An additional 7% would have searched for it which isn’t wrong, but remember, we were testing those navigational tiles. It’s interesting to note that most people selected the tiles — likely indicating they felt they were given enough information to complete the task without needing to search.

For our TripAdvisor tasks, we uncovered some very interesting and actionable insights. We found that 63% of participants were able to correctly identify the ‘Forums’ icon as the place to go for advice from other community members. While 63% is a good result, it does indicate some room for improvement and the possibility that the ‘Forums’ icon might not be reasonating with users as well as it could be. For the remaining participants, 10% clicked on ‘Where to?’ which prompts the user to search for specific locations while 7% clicked on the more general search option that would allow them to search all the content on the app.

63% of participants were able to correctly identify the ‘Holiday Rentals’ icon on the TripAdvisor app when looking for a privately owned apartment rather than a hotel to enjoy Paris from, while 20% of participants appear to have been tripped up by the ‘Hotel’ icon itself.

With 1 in 5 people in this study potentially not being able to distinguish between or determine the meaning behind each of the 2 icons, this is something that might merit further exploration. In another one of the TripAdvisor app’s tasks in this study, 43% of participants were unable to correctly identify the ‘Things To Do’ icon as a place to find inspiration for activities.Where to from here?

If this were your project, you might look at running a quick study to see what people think each of the 6 icons represent. You could slip it into some existing moderated research you had planned or you might run a quick image card sort to see what your users would expect each icon to relate to. Running a study testing all 6 at the same time would allow you to gain insights into how users perceive the icons quickly and efficiently.

Overall, both of these apps tested very well in this study and with a few minor tweaks and iterations that are part of any design process, they could be even better!

Now that you’ve seen an example of mobile testing in Chalkmark, why not try it out for yourself with your app? It’s fast and easy to run and we have lots of great resources to help you on your way including sample studies that allow you to interactively explore both the participant’s and the researcher’s perspective.

Further readingCreate and analyze a first-click test for freeView a first-click test as a participantView first-click test results as a researcherRead our first-click testing 101 guideRead more case studies and research stories to see first-click testing in action

Originally published on 29 March 2019