Optimal Blog

Articles and Podcasts on Customer Service, AI and Automation, Product, and more

At Optimal, we know the reality of user research: you've just wrapped up a fantastic interview session, your head is buzzing with insights, and then... you're staring at hours of video footage that somehow needs to become actionable recommendations for your team.

User interviews and usability sessions are treasure troves of insight, but the reality is reviewing hours of raw footage can be time-consuming, tedious, and easy to overlook important details. Too often, valuable user stories never make it past the recording stage.

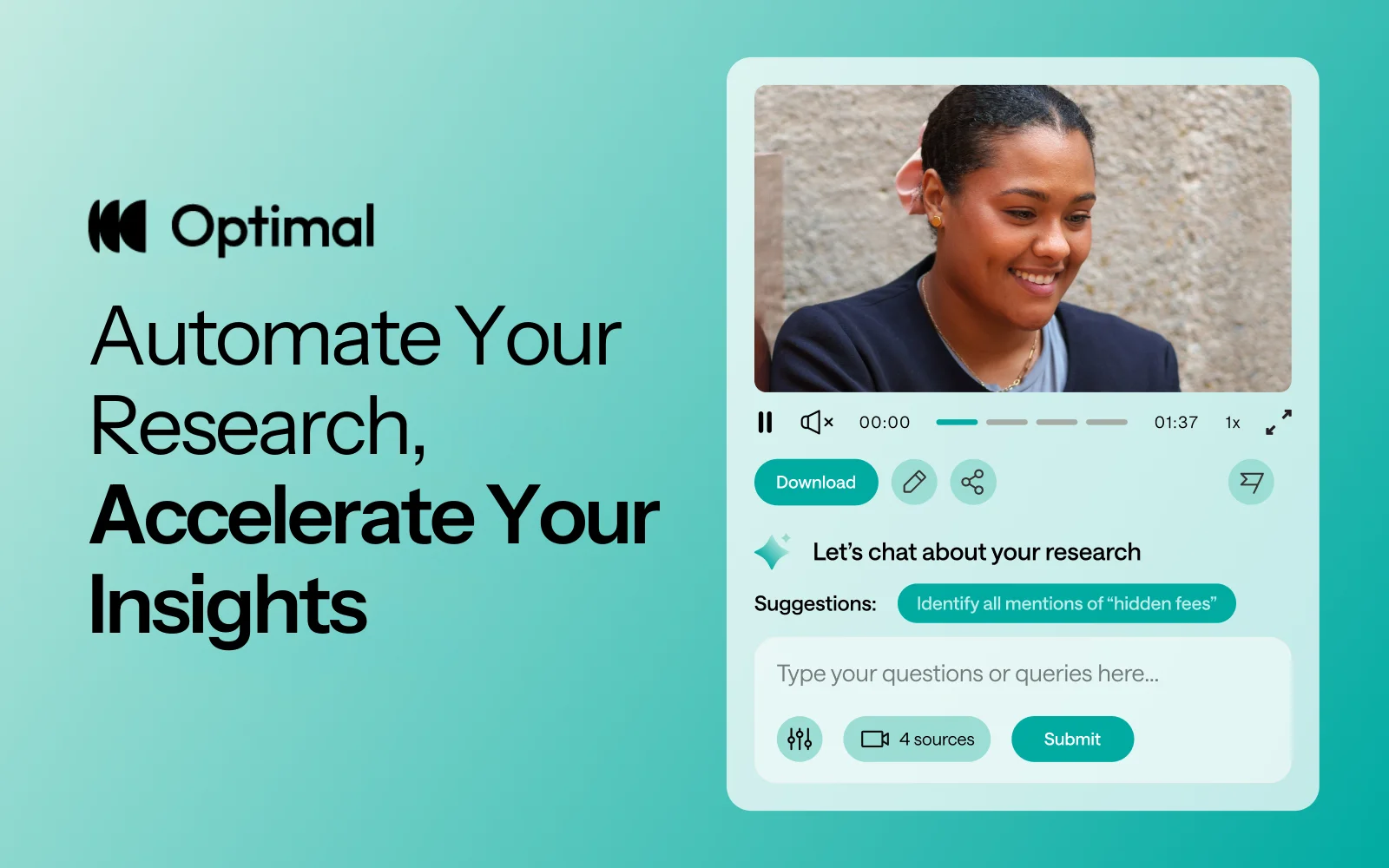

That's why we’re excited to announce the launch of Interviews, a brand-new tool that saves you time with AI and automation, turns real user moments into actionable recommendations, and provides the evidence you need to shape decisions, bring stakeholders on board, and inspire action.

Interviews, Reimagined

We surveyed more than 100 researchers, designers, and product managers, conducted discovery interviews, tested prototypes, and ran feedback sessions to help guide the discovery and development of Optimal Interviews.

The result? What once took hours of video review now takes minutes. With Interviews, you get:

- Instant clarity: Upload your interviews and let AI automatically surface key themes, pain points, opportunities, and other key insights.

- Deeper exploration: Ask follow-up questions and anything with AI chat. Every insight comes with supporting video evidence, so you can back up recommendations with real user feedback.

- Automatic highlight reels: Generate clips and compilations that spotlight the takeaways that matter.

- Real user voices: Turn insight into impact with user feedback clips and videos. Share insights and download clips to drive product and stakeholder decisions.

Groundbreaking AI at Your Service

This tool is powered by AI designed for researchers, product owners, and designers. This isn’t just transcription or summarization, it’s intelligence tailored to surface the insights that matter most. It’s like having a personal AI research assistant, accelerating analysis and automating your workflow without compromising quality. No more endless footage scrolling.

The AI used for Interviews as well as all other AI with Optimal is backed by AWS Amazon Bedrock, ensuring that your AI insights are supported with industry-leading protection and compliance.

Evolving Optimal Interviews

A big thank you to our early access users! Your feedback helped us focus on making Optimal Interviews even better. Here's what's new:

- Speed and easy access to insights: More video clips, instant download, and bookmark options to make sharing findings faster than ever.

- Privacy: Disable video playback while still extracting insights from transcripts and get PII redaction for English audio alongside transcripts and insights.

- Trust: Our enhanced, best-in-class AI chat experience lets teams explore patterns and themes confidently.

- Expanded study capability: You can now upload up to 20 videos per Interviews study.

What’s Next: The Future of Moderated Interviews in Optimal

This new tool is just the beginning. Our vision is to help you manage the entire moderated interview process inside Optimal, from recruitment to scheduling to analysis and sharing.

Here’s what’s coming:

- View your scheduled sessions directly within Optimal. Link up with your own calendar.

- Connect seamlessly with Zoom, Google Meet, or Teams.

Imagine running your full end-to-end interview workflow, all in one platform. That’s where we’re heading, and Interviews is our first step.

Ready to Explore?

Interviews is available now for our latest Optimal plans with study limits. Start transforming your footage into minutes of clarity and bring your users’ voices to the center of every decision. We can’t wait to see what you uncover.

Topics

Research Methods

Popular

All topics

Latest

CRUX #4: fresh thinking from the world of UX

As the fourth issue of CRUX goes to press the demand for usability continues to grow, along with the rise of the digital experience economy. Sharing a sense of community among UXers is more important than ever. That’s why we’re so proud to bring you our latest issue of CRUX, celebrating people and perspectives from the UX community.

CRUX #4 has a great line-up of contributors, all experts in their fields who jumped at the opportunity to share their thoughts and ideas with us - and of course more importantly - you.

This issue we’ve focussed on collaboration and invited our contributors to bring their thoughts and ideas on communication and teamwork to the table. They’ve come up with some compelling reading that inspires, surprises and at times challenges current thinking and offers fresh perspectives.

Some highlights from this issue

- Seasoned researcher Josh Morales from Hotjar tackles the challenge of presenting research effectively with a smart yet simple framework.

"In a word: your findings virtually do not exist if you don’t communicate them well."

- Designer Jordan Bowman of UX Tools ponders the problem of cognitive bias and highlights the key things to look out for.

“Designers are just as vulnerable to the blindspots and errors of cognitive bias as the people who use our products. After all, we're humans,too.”

- UX consultant Eugenia Jongewaard from UX Tips talks about inclusive research and challenges UX researchers and designers to up their game.

“As we live in a world of rapid digital transformation we can't continue to design in the same way we designed before. We should start designing for inclusion. For that to happen we need to shift our mindset towards inclusive research.”

- And much, much more….

A plug for the next issue

Do you have a burning idea to share or a conversation you’re dying to kickstart that’s of interest to the world of UX? Now’s your chance. We’re already on the lookout for contributors for our first edition of CRUX for 2022. To find out more please drop us a line

But for now, get comfortable and settle in for a good read. Welcome to CRUX #4.

Is your SaaS tech stack secure?

Having access to the specialist subscription-based tools you need to do your work is a reasonable thing to expect. But what if you’re relying on someone else’s SaaS account to access what you need? Sounds like a good solution but think again. It’s risky - even fraught. Here are 3 good reasons to avoid shared login credentials and why you need your own.

1. Safety first - sharing account login credentials is a risky business 🔐

If you don’t know who’s signed up and using the subscriptions your organization pays for and holds, how can you protect their data once they’ve gone? As the account holder, you’re responsible for keeping the personal data of anyone accessing your subs safe and secure. That’s not only the right thing to do - it’s pretty important from a legal perspective too.

In today’s data-driven world safeguards around privacy and security are essential. You only need to look at the fallout from serious data breaches around the world to see the damage they can do. There’s a myriad of privacy laws around personal data out there but they’re based on the universal principle of protecting personal data. One of the better-known laws is GDPR the EU’s data protection law.

The General Data Protection Regulation (GDPR) regulates and protects the processing of the personal information of EU citizens and residents by establishing rules on how organizations such as companies and governments can process this personal data. It’s important to note the GDPR applies to those handling the data whether they’re EU-based organizations or not.

Avoid encouraging shared logins in your organization to ensure peace of mind that you’re doing everything you can to keep people’s personal data safe and secure - as well as keeping on the right side of the law.

2. Ease of administration - save time and energy managing multiple users 🎯

Having single logins rather than shared logins saves time and energy and makes the whole administration smoother and easier for everyone.

For instance, maybe you need to delete data as part of honoring GDPR rules. This could be tricky and time consuming if there are multiple users on one email as a generic email isn’t specific to a person.

Generic email addresses also make it harder for SaaS providers to understand your account activity and implement the changes you want or need. For example, customers often ask to retrieve information for account billing. Having multiple employees using a single login can make this problematic. It can be a real struggle to identify the right owners or users.

And if the ‘champion’ of the tool leaves your organization and you want to retrieve information on the account, your SaaS provider won't be able to do this without proof you’re the real owner of this account.

Another added benefit ,(which your IT & security team will thank you for), of having a personal login, is the way it makes setting up functionality such as single-sign-on (SSO) so easy. Given the way single sign-on works, shared emails just don’t cut it anymore. Also if your organization uses SSO it means you’ll be able to log into tools more quickly and easily.

3. Product support - access it when you need it 🙏

When things go wrong or you just need help using products or tools from your friendly SaaS it’s important for them and for you, that they’re in the best position to support you. Supporting people is a big part of the job and generic emails make it harder to connect with customers and create the people to people relationships that enable the best outcome when problems arise or training or help is needed.

You may be surprised to hear what a blocker multiple users on a single email can be. For instance, generic email addresses can make it harder for us to get to the right person and communicate with you. We won’t know if you have another email active in the system we can use to help you.

Wrap up 🌯

We’ve given you 3 good reasons not to account share - still, need convincing?

What about getting the right plan to meet your organization’s needs - so you don’t need to share in the first place? There could be all kinds of reasons why you’ve ended up having to account share: maybe a workmate signed up, shared it, and got you hooked too. Or your organization has grown and you need more subs. Whatever the reason there’s no need to account share - get in touch and sound us out to find a better, safer solution.

How to test mobile apps with Chalkmark

Mobile app testing with users before, during and beyond the design process is essential to ensuring product success. As UX designers we know how important usability is for interaction design, but testing early and often on mobile can sometimes be a challenge. This is where usability testing tools like Chalkmark (our first-click testing tool) can make a big difference.

First-click testing on mobile apps allows you to rapidly test ideas and ensure your design supports user goals before you invest time and money in further design work and development. It helps you determine whether you’re on the right track and whether your users are too — people are 2 to 3 times as likely to successfully complete their task if they got their first click right.

Read on for our top tips for mobile testing with Chalkmark shared through an example of a study we recently ran on Airbnb and TripAdvisor’s mobile apps.

Planning your mobile testing approach: remote or in person

There’s 2 ways that you might approach mobile app testing with Chalkmark: remotely or in person. Chalkmark is great for remote testing because it allows you to gain insights quickly as well as reach people anywhere in the world as the study is simply shared via a link. You might recruit participants via your social networks or email lists or you could use a recruitment service to target specific groups of people. The tool is also flexible enough to work just as well for moderated and in-person research studies. You might pop your study onto a mobile device and hit the streets for some guerrilla testing or you might incorporate it into a usability testing session that you’ve already got planned. There’s no right or wrong way to do it — it really depends on the needs of your project and the resources you have available.

For our Airbnb and TripAdvisor mobile app study example, we decided to test remotely and recruited 30 US based participants through the Optimal Workshop recruitment service.

Getting ready to test

Chalkmark works by presenting participants with a real-world scenario based task and asking them to complete it simply by clicking on a static image of a design. That image could be anything from a rough sketch of an idea, to a wireframe, to a screenshot of your existing product. Anything that you would like to gather your user’s first impressions on — if you can create an image of it, you can Chalkmark it.

To build your study, all you have to do is upload your testing images and come up with some tasks for your participants to complete. Think about the most common tasks a user would need to complete while using your app and base your mobile testing tasks around those. For our Airbnb and TripAdvisor study, we decided to use 3 tasks for each app and tested both mobile apps together in one study to save time. Task order was randomized to reduce bias and we used screenshots from the live apps for testing.

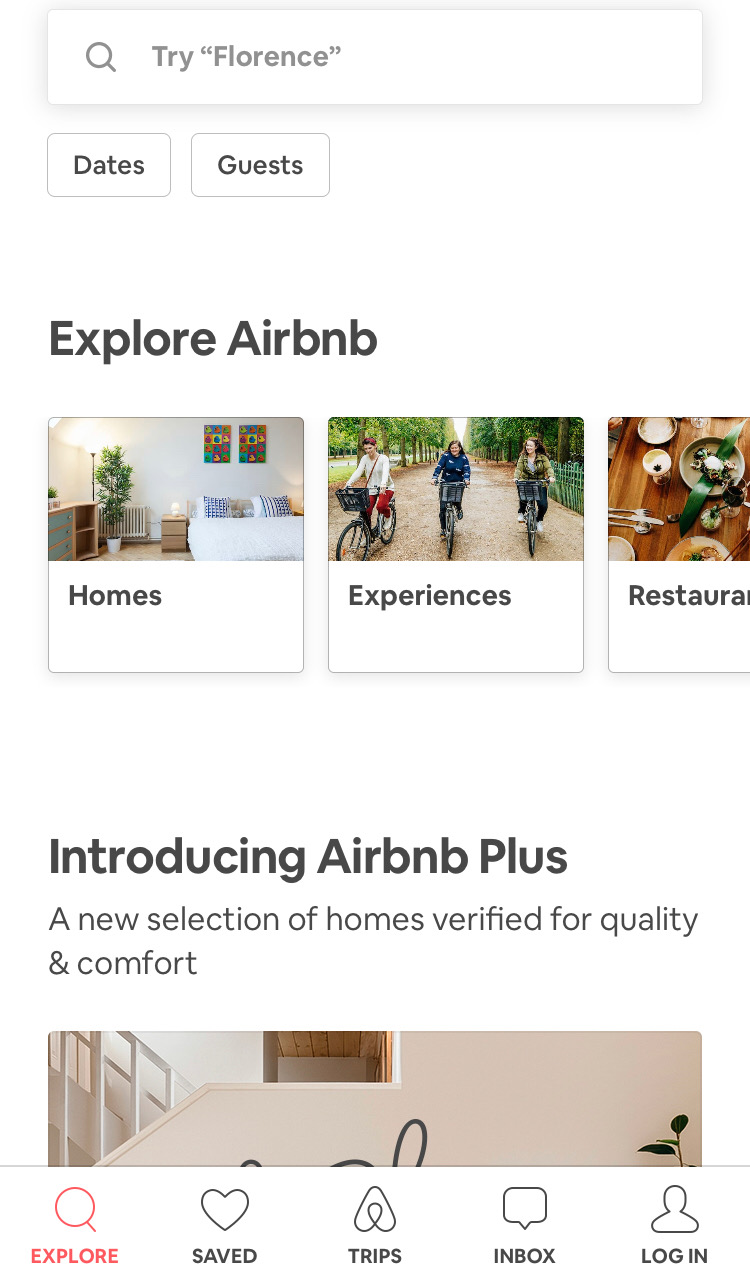

For Airbnb, we focused our mobile testing efforts on the three main areas of their service offering: Homes, Experiences and Restaurants. We wanted to see if people understood the images and labels used and also if there were any potential issues with the way Airbnb presents these three options as horizontally scrollable tiles where the third one is only partially shown in that initial glance.

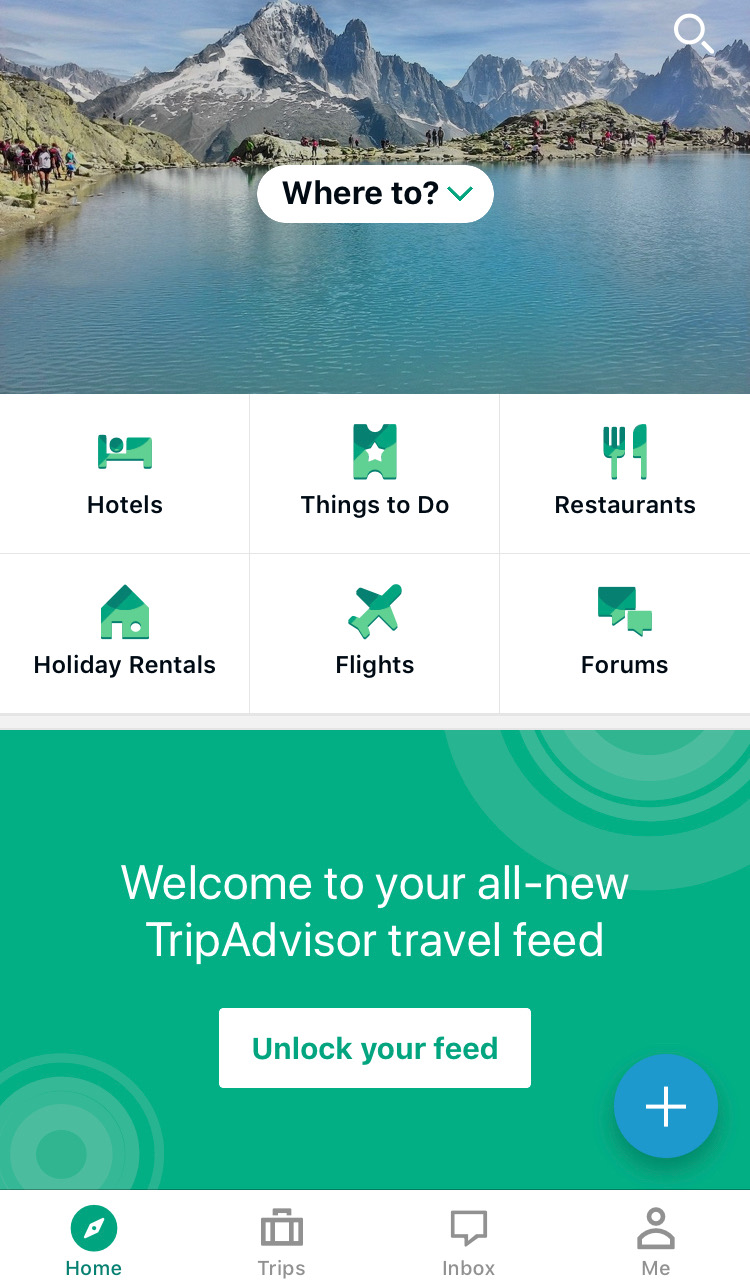

For TripAdvisor, we were curious to see if the image-only icons on the sticky global navigation menu that appears when the page is scrolled made sense to users. We chose three of these icons to test: Holiday Rentals, Things To Do and Forums.

Our Chalkmark study had a total of 6 tasks — 3 for each app — and we tested both mobile apps together to save time.

Our tasks for this study were:

1. You’ll be spending the holidays with your family in Montreal this year and a friend has recommended you book yourself into an axe throwing workshop during your trip.

2. Where would you go to do this? (Airbnb)

3. You’ve heard that Airbnb has a premium range of places to stay that have been checked by their team to ensure they’re amazing. Where would you go to find out more? (Airbnb)

4. You’re staying with your parents in New York for the week and would like to surprise them by taking them out to dinner but you’re not sure where to take them. Where would you go to look for inspiration? (Airbnb)

5. You’re heading to New Zealand next month and have so many questions about what it’s like! You’d love to ask the online community of locals and other travellers about their experiences. Where would you go to do this? (TripAdvisor)

6. You’re planning a trip to France and would prefer to enjoy Paris from a privately owned apartment instead of a hotel. Where would you go to find out what your options are? (TripAdvisor)

7. You’re currently on a working holiday in Melbourne and you find yourself with an unexpected day off. You’re looking for ideas for things to do. Where would you go to find something like this? (TripAdvisor)

Task order was randomized to reduce bias and we used screenshots from the live apps for testing.All images used for testing were the size of a single phone screen because we wanted to see if they could find their way without needing to scroll. As with everything else, you don’t have to do it this way — you could make the image longer and test a larger section of your design or you could focus on a smaller section. As a testing tool, Chalkmark is flexible and scalable.

We also put a quickly mocked up frame around each image that loosely resembled a smart phone because without it, the image looked like part of it had been cropped out which could have been very distracting for participants! This frame also provided context that we were testing a mobile app.

Making sense of Chalkmark results data

Chalkmark makes it really easy to make sense of your research through clickmaps and some really handy task results data. These 2 powerful analysis features provide a well-rounded and easy to digest picture of where those valuable first clicks landed so that you can evolve your design quickly and confidently.

A clickmap is a visualization of where your participants clicked on your testing image during the study. It has different views showing heatmaps and actual click locations so you can see exactly where they fell. Clickmaps help you to understand if your participants were on the right track or, if they weren’t, where they went instead.The task results tab in Chalkmark shows how successful your participants were and how long it took them to complete the task. To utilize the task results functionality, all you have to do is set the correct clickable areas on the images you tested with — just click and drag and give each correct area a meaningful name that will then appear alongside the rest of the task results. You can do this during the build process or anytime after the study has been completed. This is very useful if you happen to forget something or are waiting on someone else to get back to you while you set up the test!

For our Airbnb and TripAdvisor study, we set the correct areas on the navigational elements (the tiles, the icons etc) and excluded search. While searching for something isn’t necessarily incorrect, we wanted to see if people could find their way by navigating. For Airbnb, we discovered that 83% of our participants were able to correctly identify where they would need to go to book themselves into an axe throwing workshop. With a median task completion time of 4.89 seconds, this task also had the quickest completion time in the entire study. These findings show that the label and image being used for the ‘Experiences’ section of the app appears to be working quite well.

We also found that 80% of participants were able to find where they’d need to go to access Airbnb Plus. Participants had two options and could go via the ‘Homes’ tile (33%) or through the ‘Introducing Airbnb Plus’ image (47%) further down. Of the remaining participants, 10% clicked on the ‘Introducing Airbnb Plus’ heading, however at the time of testing, this area was not clickable. It’s not a huge deal because these participants were on the right track and would have likely found the right spot to click fairly quickly anyway. It’s just something to consider around user expectations and perhaps making that heading clickable might be worth exploring further.

83% of our participants were able to figure out where to go to find a great restaurant on the Airbnb app which is awesome! An additional 7% would have searched for it which isn’t wrong, but remember, we were testing those navigational tiles. It’s interesting to note that most people selected the tiles — likely indicating they felt they were given enough information to complete the task without needing to search.

For our TripAdvisor tasks, we uncovered some very interesting and actionable insights. We found that 63% of participants were able to correctly identify the ‘Forums’ icon as the place to go for advice from other community members. While 63% is a good result, it does indicate some room for improvement and the possibility that the ‘Forums’ icon might not be reasonating with users as well as it could be. For the remaining participants, 10% clicked on ‘Where to?’ which prompts the user to search for specific locations while 7% clicked on the more general search option that would allow them to search all the content on the app.

63% of participants were able to correctly identify the ‘Holiday Rentals’ icon on the TripAdvisor app when looking for a privately owned apartment rather than a hotel to enjoy Paris from, while 20% of participants appear to have been tripped up by the ‘Hotel’ icon itself.

With 1 in 5 people in this study potentially not being able to distinguish between or determine the meaning behind each of the 2 icons, this is something that might merit further exploration. In another one of the TripAdvisor app’s tasks in this study, 43% of participants were unable to correctly identify the ‘Things To Do’ icon as a place to find inspiration for activities.Where to from here?

If this were your project, you might look at running a quick study to see what people think each of the 6 icons represent. You could slip it into some existing moderated research you had planned or you might run a quick image card sort to see what your users would expect each icon to relate to. Running a study testing all 6 at the same time would allow you to gain insights into how users perceive the icons quickly and efficiently.

Overall, both of these apps tested very well in this study and with a few minor tweaks and iterations that are part of any design process, they could be even better!

Now that you’ve seen an example of mobile testing in Chalkmark, why not try it out for yourself with your app? It’s fast and easy to run and we have lots of great resources to help you on your way including sample studies that allow you to interactively explore both the participant’s and the researcher’s perspective.

Further readingCreate and analyze a first-click test for freeView a first-click test as a participantView first-click test results as a researcherRead our first-click testing 101 guideRead more case studies and research stories to see first-click testing in action

Originally published on 29 March 2019

Introducing Reframer v2 beta

Please note: This tool is a work in progress and isn’t yet available to all Optimal Workshop customers. If you’d like to opt in to the beta, pop down to the bottom of this article to find out more.

The ability to collect robust and trustworthy qualitative analysis is a must for any team who conducts user research. But so often, the journey to getting those juicy insights is time-consuming and messy. With so many artefacts – usually spread across multiple platforms and mediums – trying to unearth the insights you set out to get can feel overwhelming.

Since launching Reframer in 2019, we’ve had some great feedback from our users and the community. This feedback has led to the development of the beta version of Reframer v2 – in which we’ve expanded the note taking and tagging capabilities, as well as building a more powerful and flexible analysis functionality: affinity mapping.

What is Reframer v2 beta? 🤨

Simply put, Reframer v2 is a workflow that houses your data and insights all in one place. Yes, that’s right! No more context switching between various platforms or tabs. It’s an end-to-end qualitative analysis workflow that allows you to capture, code, group and visualize your data.

We’ve put a lot of focus into making sure that the analysis side of things is easy to learn and understand, regardless of your experience level. It’s also more flexible and better suited to qualitative research with data sets both big and small.

What’s the difference between Reframer and v2 beta? 😶🌫️

The main difference is the analysis workflow. Reframer’s tag-based theme builder has been replaced with an affinity map-based workflow in v2 beta.

The rest of the workflow remains mostly the same, though there are a couple of key differences.

User interface and set up 📲

While the activities within the set up and capture phase remain the same, we’ve updated the user interface to better reflect the qualitative research workflow.

All set up related actions (study overview, tasks, tags, segments, and study members) now live together under one tab – ‘Setup’.

You’ll find your sessions and all the observation data you’ve collected in the ‘Sessions’ tab.

Guest notetakers ✍️

For now, we’ve disabled the ability to invite guest notetakers who don’t hold an Optimal Workshop license. That’s not to say this won’t be reintroduced at some stage in the future, though. And of course, your team members who do have a license will be able to collaborate, take notes and analyze data.

Say hello to affinity mapping 📍🗺️

The biggest (and the best) difference between Reframer and v2 beta is the analysis workflow. In Reframer, themes are created by combining filters and tags. In Reframer v2 beta, themes are created by grouping observations in the affinity map.

Affinity mapping is a flexible and visual way to quickly group, organize and make sense of qualitative data. It’s a popular method amongst research practitioners of all experience levels, though it’s usually conducted in a standalone tool outside of where the raw data is captured, organized, tagged and stored.

Reframer v2 beta makes affinity mapping more powerful and user-friendly than ever – giving you the ability to search and filter your data, and have your observations, tags, and themes all connected and stored in one place.

What exactly does ‘beta’ mean in this case? 🙂⃤

It means that Reframer v2 is still very much a work in progress and isn’t yet available to all Optimal Workshop users. We’re continuing to develop new functionality that will complete the qualitative data analysis workflow and, if you’re part of the beta, you can expect to see new features and changes being rolled out in the coming months.

There may be a few bugs along the way, and we know the current name doesn’t exactly roll off the tongue so standby for a rebrand of the tool name once it’s ready for general consumption!

We need your help! 🆘

Want to help us make Reframer v2 beta really, really great? We’d love that. We here at Optimal Workshop rely on your thoughts, opinions and feedback to build and update our tools so they benefit those who matter most: you.

If you’d like to opt into the beta, sign up here.

And if you’d like to get down into the nitty gritty about the what, why and how of Reframer v2 beta, check out our Help Center articles here.

7 common mistakes when doing user research interviews

Want to do great user research? Maybe you already have tonnes of quantitative research done through testing, surveys and checking. Data galore! Now you really want to get under the skin of your users, understand the why behind their decisions. Getting human-centric with products can mean creating better performing, stronger and more intuitive products that provide an awesome user experience (UX). An in-depth understanding of your users and how they tick can mean the difference between designing products that just work and products that intuitively speak your users language, make them happy, engaged and keep them coming back.

This is where qualitative research comes into play. Understanding how your users tick becomes clearer through user interviews. Interviewing users will provide human insights that make all the difference, the nuance that pulls your product or interface out of the fray and into the light.

How do you interview confidently? Whether this is your first foray into the world of user interviewing or wanting to step up your game, there are a few common pitfalls along the way. We cover off 7 of the most common mistakes, and how to avoid them, helping you avoid these on your way to interview greatness!

How do you conduct a user research interview?

There are several ways of doing qualitative user research. Here we will talk about in-person user interviews. Great user interviewing is a skill in itself. And relies on great prep, quality participants and excellent analysis of the results. But don’t be put off, all of this can be learned, and with the right environment and tools can be simple to implement. Want to find out more in detail about how to conduct an interview? Take a look here.

Even if you’re an old hand we’re not all gifted interviewing experts, it’s okay if you lack expertise. In fact, totally nailing interview technique is almost impossible thanks to a ton of different factors. It's your job to keep what you can under control, and record the interview well in the moment for later analysis. Keeping safe all those lovely human centric insights you unearth.

Here are seven practical user research interview mistakes you could be making, and how to fix them:

1. Not having enough participants

It can be intimidating doing any sort of user research. Particularly when you need to find participants. And a random selection, not just those down the hall in the next office (though sometimes they can be great). And getting a large enough pool of participants that make the data meaningful, and the insights impactful.

Not to worry, there are ways to find a giant pool of reliable interview participants. Either dive into existing users that you are familiar with, and they with you. Or get in touch with us to recruit a small or large sample of participants.

2. Not knowing enough about your interview participants

Interviews are two-way streets, so if you’re hoping to encourage anyone to be open and honest in an interview setting you’ll need to do your homework on the person you’re interviewing. This may not always be applicable if you’re looking for a truly random sample of people. Understanding a little more about your participants should help the conversation flow, and when you do go off-script, it is natural and curiosity driven.

3. Not creating an open interview environment

Everything about your user interview environment affects the outcome of the interview. Your participants need to feel confident and comfortable. The space needs to remove as many distractions as possible. A comfortable workstation, laptop that works, and even the air conditioning at a good temperature can all play a part in providing a relaxed environment. So when it comes to the interview they are able to demonstrate and explain their behaviour or decisions on their own terms.

Of course, in this modern day, the availability of remote and virtual interviewing has changed the game slightly. Allowing your participants to be in their own environment can be beneficial. Be careful to take note of what you can see about their space. Is it crowded, dim, busy or noisy? If you don’t have full control over the environment be sure to note this in a factual way.

4. Not having a note-taker in the room

Good note-taking is a skill in its own right and it’s important to have someone skilled at it. Bringing a dedicated note-taker into the user interviews also frees you up to focus on your participant and your interviewing. Allowing the conversation to flow. Leaving the note-taker to focus on marking down all of the relevant points of interest.

5. Using a bad recording setup

Deciding to audio (and/or video) record the interview is a great option. When choosing this option, recording can be possibly the most important aspect of the interview setup process. Being able to focus on the interview without worrying about your recording equipment is key. Make sure that your recording equipment is high quality and in a central position to pick up everything you discuss - don’t trip at the first hurdle and be left with unusable data.

A dedicated note-taker can still be of value in the room, they can monitor the recording and note any environmental or contextual elements of the interview process. Taking the stress off of you for the recording set up, and any adjustments.

Another option is Reframer. It’s a great recording tool that can free you up to focus on your participant and the interview. Reframer will audio record your interview,auto time-stamp and provide a standardized format for recording all of your interviews. Post analysis becomes simple and quick. And even quicker to share the data and insights.

6. Not taking the time to prepare your interview questions

Lack of preparation can be a fatal error for any user research and user interviews are no different. Interviews are a qualitative research method, and your questions don’t need to be as strict as those in a quantitative questionnaire, for example. However, you will still need a standardised script to regulate your user interviews and make sure all of your participants are asked the same set of questions. Always leaving plenty of room to go off script to get under the skin of why your participant interacts with your product in a particular way!

7. Not having a plan of action for organizing your data

Qualitative data is unstructured, which can make it hard to organize and analyze. Recording and including all of your interviews on one platform so you can analyze the insights and conclusions together makes it easier to review. Reframer can do all of this in one place allowing all of your organizational stakeholders access to the data.

Don’t miss anything in your interviews, you put in the time, the effort and the investment into doing them. Make sure that they are recorded, available and analyzed in one place. For the team to see, use and report against.

Wrap Up

User interviews can be intimidating, to organise, to prep for and even finding your participants can be hard. But user interviews needn’t be too much of a headache. With the Optimal Workshop platform, we take the pain out of the process with participant selection, recording, analyzing and reporting.

If you want a single platform to record, analyze and store your data, take a look at Optimal Workshop and Reframer. And get interviewing!

How to benchmark your information architecture

As an information architect, I’ve worked on loads of website redesigns. Interestingly, every single one of these projects has come about because the website has had navigation problems. But before you go ahead and change up your information architecture (IA), how do you figure out whether the new navigation is any better than the existing one? How do you know if it’s worth the hours of design to implement it?

In this article, I’ll walk you through how to benchmark a site navigation using tree testing.

The initial groundwork

When you start any project, you need to identify success metrics. How would the project sponsor (or your client if you’re in an agency) consider the project to be a success? What KPIs determine how the project is doing?

Put your stake in the ground, draw a line in the sand — or whatever metaphor you wish to use. This is how you objectively determine how far you’ve gone from where you started. At the same time, benchmarking is the perfect exercise to figure out the areas that need improvement. To do this, you’ll need to lay down the groundwork.

If you’re benchmarking your IA as part of a web redesign project, great! Hopefully, that means you’ve already gone through the exercise of determining who your users are and what it is that your users would be doing on the site. If not, it’s time to find out. User research is a crucial part of benchmarking. If you don’t know who your users are and why they’re on your site, how can you improve it?

Of course, everyone has a different approach to benchmarking information architecture. Different navigation problems merit different solutions. This is one that I’ve talked myself into for a global navigation project and it’s worked out for me. If you have a different approach, please share! I’m always open to new processes.

Without further preamble, here’s the quick rundown of my approach to assessing and benchmarking a site navigation:

- Conduct user research with the end goal to identify target users and user intent

- From user research, determine at most 8-10 primary user tasks to test with the identified target users

- Tree test the existing navigation with target users - using those user tasks

- Tree test a competitor navigation with target users - using the same user tasks

- Tree test a proposed navigation - using the same user tasks.

Step 1: Know who your users are

If it’s a new project, who is your target audience? Set up some kind of intercept or survey to find out who your users are. Find out what kind of people are coming to your site. Are they shopping for new cars or used cars? Are they patients or healthcare providers? If patients, then what kind of patients? Chronic care, acute care? If the project timeline doesn’t allow for this, discuss this with your project stakeholders. Ideally, they should have some idea of who their target audience is and you should at least be able to create proto user segments to direct your discovery.

If you have more than one user group, it’s best to identify the primary user group to focus efforts. If you have to design for everyone, you end up getting nowhere, satisfying no one. And guess what? This is not a novel idea.

“When you design for everyone, you design for no one.” — @aarron http://t.co/JIJ2c82d Ethan Marcotte (@beep) May 24, 2012

Your project stakeholder won’t like to hear this, but I would start with one user group and then iterate with additional user groups. Focus your efforts with one user group and do a good job. Then rinse and repeat with the remaining user groups. Your job is not done.

Determine what your users do

Interview or survey a couple of people who use your website and find out what they are doing on your site. What are they trying to do? Are they trying to find out information about services you provide? Are they trying to purchase things from your online store? How did they get there? Why did they choose your site over another website?

Identify priority user tasks

From your user interviews, could you identify 8-10 priority user tasks that you could use? For this, we’re trying to figure out what tasks to use in a navigation test. What are the main reasons why users would be on your site? How would the navigation best serve them? If your navigation says nothing about your users’ tasks, then you have your work cut out for you.

Step 2: Tree test your existing navigation

How would you benchmark without some metrics? There are a couple kinds of metrics that we could collect: quantitative and qualitative. For quantitative, I’m assuming that you have some kind of analytics running on your site as well as event tracking. Track which navigation links are getting the most interaction. Be sure to use event tracking on both primary, utility, and footer links. Name them accordingly. Try and determine which links get the most interaction, on which pages, and follow where the users tend to end up.

Of course, with quantitative data, you don’t have a really good understanding of the reasons behind user behavior. You can make assumptions, but those won’t get you very far. To get this kind of knowledge, you’ll need some qualitative data in the form of tree testing, also known as navigation testing.

I’ve only used Optimal Workshop’s First-click testing tool for tree testing, so I can’t speak to the process with other services (I imagine that it would be similar). Here are the general steps below — you can find a more detailed process in this Tree Testing 101 guide.

Create/import a sitemap for your existing site navigation.

For my recent project, I focused benchmarking on the primary navigation. Don’t combine different types of navigation testing in one — you can do that in a usability test. Here, we’ll just be testing the primary navigation. Search and utility links are secondary, so save those for another time.

Set up user tasks and questions.

Take the user tasks you’ve identified earlier and enter them into a tree test. From this point on, go with best practices when setting up your tree test.

- Limit to 8-10 tasks so that you don’t overwhelm your participants. Aim to keep your tree test to 15 minutes long or less so your participants don’t get exhausted, either.

- Prepare pre-study questions — These are a good way to gather data about your participants, reconfirming their priority and validating any assumptions you have about this user group.

- Prepare post-task questions — Use confidence and free-form feedback questions to feel out how confident the user is in completing each task.

For more tips on setting up your tree test, check out this Knowledge Base article.

Run your tree test!

- Do a dry run with someone who is not on your team so you can see if it makes sense.

- Do a moderated version with a test participant using screen-sharing. The test participant could think aloud and that could give you more insight to the findability of the task. Keep in mind that moderated sessions tend to run longer than unmoderated sessions so your metrics will be different.

- Execute, implement, and run!

Analyze your tree test results

Once you’ve finished testing, it’s time to look for patterns. Set up the baseline metrics using success rate, time spent, patterns in the pietrees — this is the fun stuff!

Focus on the tasks that did not fare as well, particularly the ones that had an overall score of 7 or below. This is an easy indicator that you should pay more attention to the labeling or even the choice you indicated as the correct answer.

What’s next?

From here, you can set up the same tree test using a competitor’s site tree and the same user tasks. This is helpful to test whether a competitor’s navigation structure is an improvement over your existing one. It also helps with discussions where a stakeholder is particularly married to a certain navigation scheme and you’re asked to answer: which one is better? Having the results from this test helps you answer the question objectively.

- here are the reasons why a user is on your site

- here is what they’re trying to do

- here is what happens when they try to find this on your site

- here is what happens when they try to find the same thing on your competitor’s

When you have a proposed sitemap, test it again with the same tasks and you can use these to figure out whether the changes you made changed anything. You can also conduct this test over time.

A few more things to note

You could daisy-chain one tree test after another to test an existing nav and a competitor’s. Just keep in mind that you may need to limit the number of user tasks per tree test so that you don’t overwhelm the participant.

Further reading

- "How to get the most out of tree testing" - This presentation from Steve Byrne contains some key things you need to keep in mind when planning and running a tree test.

- "Tree testing in the design process - Part 1: The research phase" - This blog by Dave O'Brien discusses how tree testing fits in when you're designing a website.

- "Treejack takes a trip with American Airlines" - This article shows how Treejack is used in a real-life scenario.

No results found.