Optimal Blog

Articles and Podcasts on Customer Service, AI and Automation, Product, and more

In our Value of UX Research report, nearly 70% of participants identified analysis and synthesis as the area where AI could make the biggest impact.

At Optimal, we're all about cutting the busywork so you can spend more time on meaningful insights and action. That’s why we’ve built automated Insights, powered by AI, to instantly surface key themes from your survey responses.

No extra tools. No manual review. Just faster insights to help you make quicker, data-backed decisions.

What You’ll Get with Automated Insights

- Instant insight discovery

Spot patterns instantly across hundreds of responses without reading every single one. Get insights served up with zero manual digging or theme-hunting. - Insights grounded in real participant responses

We show the numbers behind every key takeaway, including percentage and participant count, so you know exactly what’s driving each insight. And when participants say it best, we pull out their quotes to bring the insights to life. - Zoom in for full context

Want to know more? Easily drill down to the exact participants behind each insight for open text responses, so you can verify, understand nuances, and make informed decisions with confidence. - Segment-specific insights

Apply any segment to your data and instantly uncover what matters most to that group. Whether you’re exploring by persona, demographic, or behavior, the themes adapt accordingly. - Available across the board

From survey questions to pre- and post-study, and post-task questions, you’ll automatically get Insights across all question types, including open text questions, matrix, ranking, and more.

Automate the Busywork, Focus on the Breakthroughs

Automated Insights are just one part of our ever-growing AI toolkit at Optimal. We're making it easier (and faster) to go from raw data to real impact, such as our AI Simplify tool to help you write better survey questions, effortlessly. Our AI assistant suggests clearer, more effective wording to help you engage participants and get higher-quality data.

Ready to level up your UX research? Log into your account to get started with these newest capabilities or sign up for a free trial to experience them for yourselves.

Topics

Research Methods

Popular

All topics

Latest

Understanding top tasks

All the way back in 2014, the web passed a pretty significant milestone: 1 billion websites. Of course, fewer than 200 million of these are actually active as of 2019, but there’s an important underlying point. People love to create. If the current digital age that we live in has taught us anything, it’s that it’s never been as easy to get information and ideas out into the world.

Understandably, this ability has been used – and often misused. Overloaded, convoluted websites are par for the course, with a common tactic for website renewal being to simply update them with a new coat of paint while ignoring the swirling pile of outdated and poorly organized content below.

So what are you supposed to do when trying to address this problem on your own website or digital project? Well, there’s a fairly robust technique called top tasks management. Here, we’ll go over exactly what it is and how you can use it.

Getting to grips with top tasks

Ideally, all websites would be given regular, comprehensive reviews. Old content could be revisited and analyzed to see whether it’s still actually serving a purpose. If not, it could be reworked or just removed entirely. Based on research, content creators could add new content to address user needs. Of course, this is just the ideal. The reality is that there’s never really enough time or resource to manage the growing mass of digital content in this way. The solution is to hone in on what your users actually use your website for and tailor the experience accordingly by looking at top tasks.

What are top tasks? They're basically a small set of tasks (typically around 5, but up to 10 is OK too) that are most important to your users. The thinking goes that if you get these core tasks right, your website will be serving the majority of your users and you’ll be more likely to retain them. Ignore top tasks (and any sort of task analysis), and you’ll likely find users leaving your website to find something else that better fits their needs.

The counter to top tasks is tiny tasks. These are everything on a website that’s not all that important for the people actually using it. Commonly, tiny tasks are driven more by the organization’s needs than those of the users. Typically, the more important a task is to a user, the less information there is to support it. On the other hand, the less important a task is to a user, the more information there is. Tiny tasks stem very much from ‘organization first’ thinking, wherein user needs are placed lower on the list of considerations.

According to Jerry McGovern (who penned an excellent write-up of top tasks on A List Apart), the top tasks model says “Focus on what really matters (the top tasks) and defocus on what matters less (the tiny tasks).”

How to identify top tasks

Figuring out your top tasks is an important step in clearing away the fog and identifying what actually matters to your users. We’ll call this stage of the process task discovery, and these are the steps:

- Gather: Work with your organization to gather a list of all customer tasks

- Refine: Take this list of tasks to a smaller group of stakeholders and work it down into a shortlist

- User feedback: Go out to your users and get a representative sample to vote on them

- Finalise: Assemble a table of tasks with the one with the highest number of votes at the top and the lowest number of votes at the bottom

We’ll go into detail on the above steps, explaining the best way of handling each one. Keep in mind that this process isn’t something you’ll be able to complete in a week – it’s more likely a 6 to 8-week project, depending on the size of your website, how large your user base is and the receptiveness of your organization to help out.

Step 1: Gather – Figure out the long list of tasks

The first part of the task process is to get out into the wider organization and discover what your users are actually trying to accomplish on your website or by using your products. It’s all about getting into the minds of your users – trying to see the world through their eyes, effectively.

If you’re struggling to think of places where you might find customer tasks, here are some of the best sources:

- Analytics: Take a deep dive into the analytics of your website or product to find out how people are using them. For websites, you’ll want to look at pages with high traffic and common downloads or interactions. The same applies to products – although the data you have access to will depend on the analytics systems in place.

- Customer support teams: Your own internal support teams can be a great source of user tasks. Support teams commonly spend all day speaking to users, and as a result, are able to build up a cohesive understanding of the types of tasks users commonly attempt.

- Sales teams: Similarly, sales teams are another good source of task data. Sales teams typically deal with people before they become your users, but a part of their job is to understand the problems they’re trying to solve and how your website or product can help.

- Direct customer feedback: Check for surveys your organization has run in the past to see whether any task data already exists.

- Social media: Head to Twitter, Facebook and LinkedIn to see what people are talking about with regards to your industry. What tasks are being mentioned?

It’s important to note that you need to cast a wide net when gathering task data. You can’t just rely on analytics data. Why? Well, downloads and page visits only reflect what you have, but not what your users might actually be searching for.

As for search, Jerry McGovern explains why it doesn’t actually tell the entire story: “When we worked on the BBC intranet, we found they had a feature called “Top Searches” on their homepage. The problem was that once they published the top searches list, these terms no longer needed to be searched for, so in time a new list of top searches emerged! Similarly, top tasks tend to get bookmarked, so they don’t show up as much in search. And the better the navigation, the more likely the site search is to reflect tiny tasks.”

At the end of the initial task-gathering stage you should be left with around 300 to 500 tasks. Of course, this can scale up or down depending on the size of the website or product.

Step 2: Refine – Create your shortlist

Now that you’ve got your long list of tasks, it’s time to trim them back until you’ve got a shortlist of 100 or less. Keep in mind that working through your long list of tasks is going to take some time, so plan for this process to take at least 4 weeks (but likely more).

It’s important to involve stakeholders from across the organization during the shortlist process. Bring in people from support, sales, product, marketing and leadership areas of the organization. In addition to helping you to create a more concise and usable list, the shortlist process helps your stakeholders to think about areas of overlap and where they may need to work together.

When working your list down to something more usable, try and consolidate and simplify. Stay away from product names as well as internal organization and industry jargon. With your tasks, you essentially want to focus on the underlying thing that a user is trying to do. If you were focusing on tasks for a bank, opt for “Transactions” instead of “Digital mobile payments”. Similarly, bring together tasks where possible. “Customer support”, “Help and support” and “Support center” can all be merged.

At a very technical level, it also helps to avoid lengthy tasks. Stick to around 7 to 8 words and try and avoid verbs, using them only when there’s really no other option. You’ll find that your task list becomes quite to navigate when tasks begin with “look”, “find” and “get”. Finally, stay away from specific audiences and demographics. You want to keep your tasks universal.

Step 3: User feedback – Get users to vote

With your shortlist created, it’s time to take it to your users. Using a survey tool like Questions, add in each one of your shortlisted tasks and have users rank 5 tasks on a scale from 1 to 5, with 5 being the most important and 1 being the least important.

If you’re thinking that your users will never take the time to work through such a long list, consider that the very length of the list means they’ll seek out the tasks that matter to them and ignore the ones that don’t.

Step 4: Finalize – Analyze your results

Now for the task analysis side of the project. What you want at the end of the user survey end of the project is a league table of entire shortlist of tasks. We’re going to use the example from Cisco’s top tasks project, which has been documented over at A List Apart by Gerry McGovern (who actually ran the project). The entire article is worth a read as it covers the process of running a top task project for a large organization.

Here’s what a league table of the top 20 tasks looks like from Cisco:

Here’s the breakdown of the vote for Cisco’s tasks:

- 3 tasks got the first 25 percent of the vote

- 6 tasks got 25-50 percent of the vote

- 14 tasks got 50-75 percent of the vote

- 44 tasks got 75-100 percent of the vote

While the pattern may seem surprising, it’s actually not unusual. As Jerry explains: “We have done this process over 400 times and the same patterns emerge every single time.”

Final thoughts

Focusing on top tasks management is really a practice that needs to be conducted on a semi-regular basis. The approach benefits organizations in a multitude of ways, bringing different teams and people together to figure out how to best address why your users are coming to your website and what they actually need from you.

As we explained at the beginning of this article, top tasks is really about clearing away the fog and understanding on what really matters. Instead of spreading yourself thin and focusing on a host of tiny tasks, hone in on those top tasks that actually matter to your users.

Understanding how to improve your website

The top tasks approach is an effective way of giving you a clear idea of what you should be focusing on when designing or redesigning your website, but this should really just be one aspect of the work you do.

Utilizing a host of other UX research methods can give you a much more comprehensive idea of what’s working and what’s not. With card sorting, for example, you can learn how your users think the content on your website should be arranged. Then, with this data in hand, you can use tree testing to assemble draft structures of your website and test how people navigate their way through it. You can keep iterating on these structures to ensure you’ve created the most user-friendly navigation.

Take a look at the Intro to UX page to learn more about card sorting and tree testing, as well as the other user research methods you can use to make solid improvements to your website. If you’d rather just start putting methods into practice using user research tools, take our UX platform for a spin for free here.

Introducing our improved header information architecture

Note: We’re gradually rolling out this feature, so it may not appear for you straight away.

We’ve made a few changes to our navigation header in-app to make it easier and more intuitive to use. In this blog post, we’ll explore the changes you’re going to see, why we made these changes and take a look at some of the testing that took place behind the scenes.

Let’s dive in!

What’s changed?

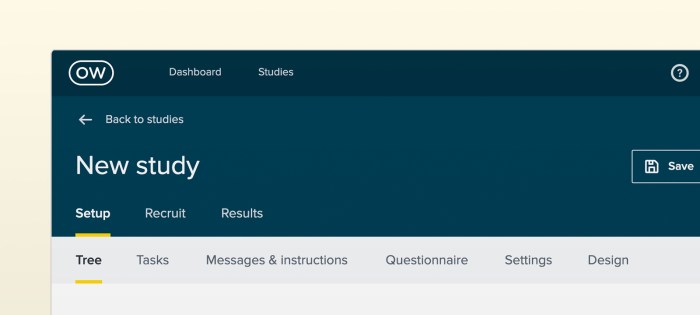

We’ve shuffled most tabs in the header around, but you’ll see the most obvious change when you create a study. You’ll now land directly on the ‘New Study’ screen, instead of being dropped in ‘Settings’ and then having to find your way to ‘Create Study’.

We’ve also made some changes to the ‘Create’, ‘Recruit’ and ‘Results’ tabs. These are now set in the header so you can easily navigate between creating, recruiting and reviewing results, instead of having to go back to ‘Settings’ to manage recruitment.

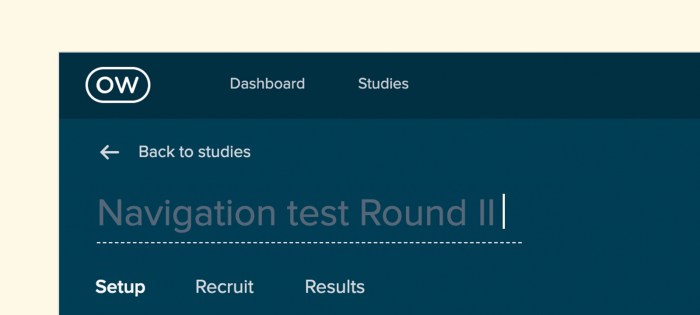

When you click ‘Back to studies’, you’ll now be taken back to the folder you’re currently working in. Previously you’d be taken back to the ‘All studies’ dashboard, so no more scrolling through the dashboard to find the folder you’re looking for. You can also rename your study in the header now, instead of having to navigate back to ‘Setup’.

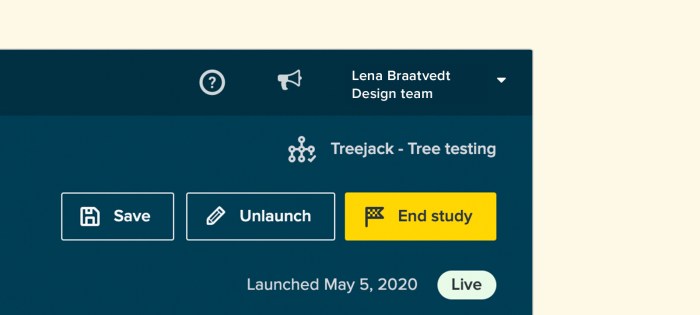

Finally, you can now end your study by clicking the ‘End study’ button in the header. We’d discovered that some people had trouble ending their studies in the past, now you can just click the button with the tiny checkered flag.

Why we made these changes

Our goal is to create the world’s best user research platform – and a big part of that is ensuring that our tools are easy to use. Through testing, we found that our old navigation header had usability, consistency and design issues. Specifically, usability testing and tree testing showed that simplifying our information architecture (IA) would drastically improve the user experience and flow.

We asked the question: “How might we improve the flow of our tool without affecting customers that currently use our tools?”

This led us down a research rabbit hole, but we came to the conclusion that we’d need to redesign the navigation header across all of our tools and adjust the IA to match the user journey. In other words, we needed to redesign the navigation to match how people were using our tools.

What we discovered during our user testing

User research and testing inform all of the changes that we make to our tools, and this project was no different.

We ran 3 tree tests using Treejack, testing 3 different IAs with 97 participants (30 per test). We also ran a first-click test using Chalkmark to validate what we were doing in Treejack.

The 3 IA’s we tested were:

- The same as the prototype (Create, Design, Launch, Recruit, Results)

- Removing Launch and splitting up children branches (Create, Design, Recruit, Results)

- Moving Design back into Create (Create, Recruit, Results)

Note: A caveat of the results is that we used the phrase ‘Set up’ in many questions which would bias the second test in favor of any question where ‘Setup’ is the correct answer.

We found that the third tree performed the best during our test. On average, it required a little less backtracking and was less direct than the first 2 tests, but had a higher chance of completion simply because there were fewer options available.

Wrap up

So that’s our new in-app header navigation – we hope you like it. Got a question? Feedback? Click the yellow Intercom circle in the bottom right-hand corner to get in touch with our support team.

Happy testing!

Pinpointing the findability of topics on your website with tree testing

Think about the last time you were visiting a friend or family member’s house and needed to find something. Like a spoon, for example. You start in the kitchen because that’s where we’ve all been trained to find things like spoons. But where do you look next? Some may look in the drawer near the dishwasher, while others may look to the drawer that’s by the cabinet that holds dishes and bowls. Point is, people use context clues and other points of reference (like familiarity and habits) to find things that they’re looking for.

The same goes for navigating a webpage.

However, unlike the spoon example, there’s much more variation in website structure than kitchen layout. Websites vary from industry to industry, ranging in purpose from ecommerce to digital portfolios and everything in between. So when someone lands on your website to find something or complete a task, is it obvious where they should go to get things done?

Your gut might tell you that it is. The people on your design team might tell you that it is too. But what really matters is what happens with actual users. Whenever you’re building a new website or optimizing an existing one, it’s important that you build it with quality user insights. That starts with user experience (UX) research.

And before you ask if we mean qualitative or quantitative research – the answer is both. Let’s explore a particular user research method that’s essential for gathering the information needed to build intuitive site navigation. It’s called tree testing.

How tree testing evaluates findability of topics on a website

For anyone unfamiliar with tree testing (sometimes referred to as ‘reverse card sorting’ or ‘card-based classification’), it’s a series of tests that help you understand where and why people get lost in your content.

It works by taking text-based versions of websites and stripping the influence of navigation aids or design elements to build a study. Participants are shown the text-only version of your website or app and are asked to indicate where they would find specific items or topics.

By removing everything but the labels and how they’re organized, you can work out just how effective your website structure is at enabling people to find what they need. If test participants consistently struggle to locate specific items, you’ll know there are issues with your structure. It can also help you visualize what paths participants take to get to specific site information.

Another point worth mentioning is that tree testing not only uncovers site navigation flaws, but it reveals word choice and microcopy that may not be clear to users. To reference back to the initial analogy, one person might say “where would I find your spoons,” while another may ask, “where do you keep your utensils?” So, tree testing can also be helpful for understanding which words work best in your site structure.

When should I conduct a tree test?

While it’s best to conduct a tree test early in the research phase of a project, there’s never a wrong time. (Unless that time is not at all). And if you have an existing website, this is a good time to establish a base of your existing site structure. The results you gather here can help to highlight any issues with your site’s structure and provide the data needed to compare to any improvements.

If you’re starting from scratch with a new website, you can run tree tests on different versions of your proposed site structure and then compare the results to determine which makes the most sense to your users.

How do I conduct a tree test?

Running a tree test is simple. You can use an online tool (like TreeJack) to collect all of the necessary quantitative data you need to ensure successful site navigation.

But what about the qualitative side of it all? We’re so glad you asked.

How to add qualitative data to your tree test

Running tree tests is a great way to gather quantitative data about your site navigation and topic findability, but it's not so good at providing you with qualitative insights, or why people are looking for information the way that they are.

Traditionally, you could get your gift cards ready and set up an in-person study where you watch people perform the tasks laid out in your tree test, and subsequently ask why they searched for things the way they did. But that takes a lot of time, coordination, and compensation. Alternatively, you could set up a remote usability test using a platform designed to record users as they complete your tree test.

This way, you’ll get the quantitative results you need to make decisions, backed by qualitative insights from real people. You’ll be able to set up your test to get answers to questions like:

- “Why did people go to X category to find information on X?”

- “Why did it take so long to find X?”

- "Why did people take that path to find X?"

Combine quantitative and qualitative research solutions to paint the whole picture

Teams that solely rely on quantitative data are only getting half the story. By pairing tree testing tools, like Optimal Workshop’s Treejack, with UserTesting’s Human Insight Platform, researchers are able to not only see how people search for information on a website but get to the bottom of why. When used together, quantitative and qualitative data reveal the most valuable insights for tree testing.

5 common mistakes we have all made with screening questions

This is a guest post from our friends over at Askable. Check out their blog.

Writing screening questions is an everyday part of life as a UXer or researcher of any kind, really. And at first glance, they seem straightforward enough. Draft up some questions that help to either qualify or disqualify people from taking part in your research, whether that’s a survey, an interview or something in between.

At Askable, we have seen thousands and thousands of screening questions. Some horrible and some amazing - and everything in between.

So here we go – 5 of the most common mistakes made when writing screening questions – oh and how to avoid them.

- Using closed-ended questions too often

What’s the quickest way of knowing if someone went on a holiday in the last 6 months… You ask them, right? “Have you been on a holiday in the last 6 months – Yes or No?”. Duh.

But actually, a question worded in this way is signposting the answer you’re looking for, which may lead to false answers! And also, the answer doesn’t give you any extra information about that person’s travel habits, etc.

So, perhaps a better way to ask the question would be: “When was the last time you went on a holiday?” Provide multiple choices. This also gives you that added info, like if it was a month ago or 5 months ago, in this case.

- Using open-ended questions at the wrong time

Open-ended screening questions can be great, but only for certain info. Avoid using them when you have strict criteria. But instead, use them for getting inside your applicant’s head a bit more. An example would be to ask as a follow up to the example above “Tell me about where you went on your last holiday”.

Open-ended questions are also fine when the answers could vary wildly. A good example is “What is your occupation”. There are simply way too many possible responses to have as a multi-choice.

- Using industry jargon

How many people in the general public know what EV stands for? It’s Electric Vehicle by the way.

Or how about the term ‘Financial Services’? Are we talking about a bank or payments company or an accountant?

Work off the lowest common denominator, assume the applicant doesn’t know anything about your industry. Because often, they don’t or they think of it differently to you. When we live and breathe a topic, it’s all too easy to forget that others do not.

- Too many screening questions

We often write too many screening questions for a number of reasons. Sometimes we do it because we forget that screening questions are just that – to screen. Not to survey! Don’t start adding questions in there that are actually part of your research.

Other times it can be because our criteria is just way too narrow. Whatever the reason, a good rule of thumb is to never have more than 15 and the less the better.

- Not trusting the majority

We have learned this time and time again at Askable – most people are good and honest! We even have a saying now for it – “default to honesty”.

Don’t get overly concerned that your screening questions give too much away. Of course, keep it vague, but don’t go crazy. The 99% of people in our experience won’t take advantage of you. So serve the 99 and not the 1.

Wrap Up

Think about these next time you are writing up some screening questions, setting up your research or trying to figure out who it is you really want to talk with. Do this and you will be on your way to some seriously awesome and accurate insights!

5 reasons to consider unmoderated user testing

In-person user testing is an important part of any research project, helping you to see first-hand how your users interact with your prototypes and products – but what are you supposed to do when it’s not a viable option?

The recent outbreak of coronavirus is forcing user researchers and designers to rethink traditional testing approaches, especially in-person testing. So what’s the solution? Enter unmoderated user testing. This approach circumvents the need to meet your participants face-to-face as it’s done entirely over the internet. As you can probably guess, this also means there are a few considerable benefits.

Here, we'll take a look at 5 reasons to consider this testing approach. But first, let's explore what unmoderated user testing is.

What is unmoderated user testing?

In the most basic sense, unmoderated user testing removes the ‘moderated’ part of the equation. Instead of having a facilitator guide participants through the test, participants complete the testing activity by themselves and in their own time. For the most part, everything else stays the same.

The key differences are that:

- You’re unable to ask follow-up questions

- You can’t use low-fidelity prototypes

- You can’t support participants (beyond the initial instructions you send them).

However, there are a number of upsides to unmoderated user testing, which we’ll cover below.

1. You can access participants from all over the globe

There’s a good chance that your organization’s customers don’t exist solely in your city, or even just in your country, so why limit yourself to testing local individuals? Moderated user testing requires you to either bring in people who can visit your office or for you to actually travel to another physical location and host testing sessions there.

With unmoderated user testing, you can use a variety of participant recruitment services to access large groups of participants from all over the globe. Making these services even more useful is the fact many allow you to recruit the exact participants you need. For example, drivers of Toyota hybrid vehicles who live in Calgary.

2. Unmoderated user testing is cheaper

Have a think for a moment about all of the typical costs that go along with a hosted user testing session. There’s the cost of a location if you’re traveling to another city, the remuneration for the people you’re testing and the cost of equipment (that you may not typically have access to). Sure, moderated testing can be made much more affordable if you’re hosting a session in your own office and you have all of the required gear, but that’s not the case for everyone doing research.

Unmoderated user testing really only requires you to choose the tool with which you want to run your user test (variable cost), set up your study and then send out the link to your participants.

3. It’s easier to manage

Unmoderated user testing means you can set aside the difficult task of managing participants in person, from scheduling through to finding notetakers and people to help you with the recording equipment. As we noted in the above section about cost, all you have to do is select the tool and then set up and run your study.

4. Automatic analysis

Running in-person, qualitative usability testing sessions can deliver some truly useful insights. There’s really nothing like sitting down in front of a participant and seeing how they interact with the product you’re working on, hearing their frustrations and learning about how they work. But any insights you gain from these sessions you’ll have to derive yourself. There’s no magic button that can generate useful qualitative analysis for you.

With unmoderated user testing, and especially with the right set of tools, you can run your tests and then have analysis generated automatically from your data. Take our IA tool Treejack as just one example. The functionality built into the tool means you can send out text-based versions of your website structure and then see how people make their way through the website to find what they’re looking for. At the end of your test, Treejack will present you with an array of useful, detailed visualizations like this one:

5. There’s less chance of bias

Ever heard of the observer effect? It’s a theory that basically states that the observation of a phenomenon will inevitably change that phenomenon, commonly due to the instruments used in the measurement. The observer effect and other biases often come into play during moderated research sessions specifically as a result of having a moderator in the room – typically with their own biases. Removing the moderator from the equation means you’ll get more reliable data from your study.

And the best place to get started?

Unmoderated user research requires unmoderated testing tools. With health concerns like coronavirus and influenza leading to reduced travel and in turn making in-person testing more difficult, there’s never been a better time to start using unmoderated testing tools. If you haven’t already, take our full set of 5 tools for a spin for free (no credit card required).

Say hello to our new look

Today we’re launching a new look for Optimal Workshop. While it’s a new direction for us visually, there’s also a little more to this story than meets the eye. This new identity represents a total rethink about how we present ourselves to the world, from our tools like OptimalSort and Reframer to way we present updates just like this one.

This brand update was a 7-month effort from concept through to execution, involving talents from both inside and outside the walls of our Wellington Office. Here, we’ll share a little about what made us decide to make this change and where you’re going to see the biggest changes.

So, without further adieu…

Why we made this change

- We’d experienced a lot of change and grown at a rapid pace – Our previous brand had grown organically for 12 years. But we’ve matured, and we need a brand identity that will continue to grow with us.

- We needed a brand that’s accessible to all – We place accessibility at the heart of our product decisions, and we wanted the same for our brand. Our previous design guidelines often made this a struggle.

- We needed a brand identity that clearly communicates what we do – Our brand needs to be clear, flexible and robust. A brand system that’s simple to configure means it’s easier to create new assets and ensures every bit of the design is usable, beautiful and communicates our value clearly.

What’s new

Here’s a quick round-up of some of the changes you can expect to see around the place – from the big things like our company logo right through to the smaller tweaks we’ve applied across the website.

Our tools

When you log back into your account, you’ll find that everything works exactly the way that it did before – we’ve just applied a fresh coat of paint. Of course, if you do happen to come across any bugs or quirks, get in touch with our support team by clicking the little blue chat icon in the bottom right corner of the screen.

Our logo

The most noticeable change is front and center with our logo. We’ve evolved it to outline what’s important. Now, we like to think that you can interpret it in a few different ways:

- A cycle of learning

- Circling the answer/highlighting the solution

- Putting “Optimal Workshop” at the centre

Our bold new logo

We’ve also designed our new logo to be quite flexible. We’re able to swap out the colors as well as the “O” to celebrate special occasions, people and events:

Our colors

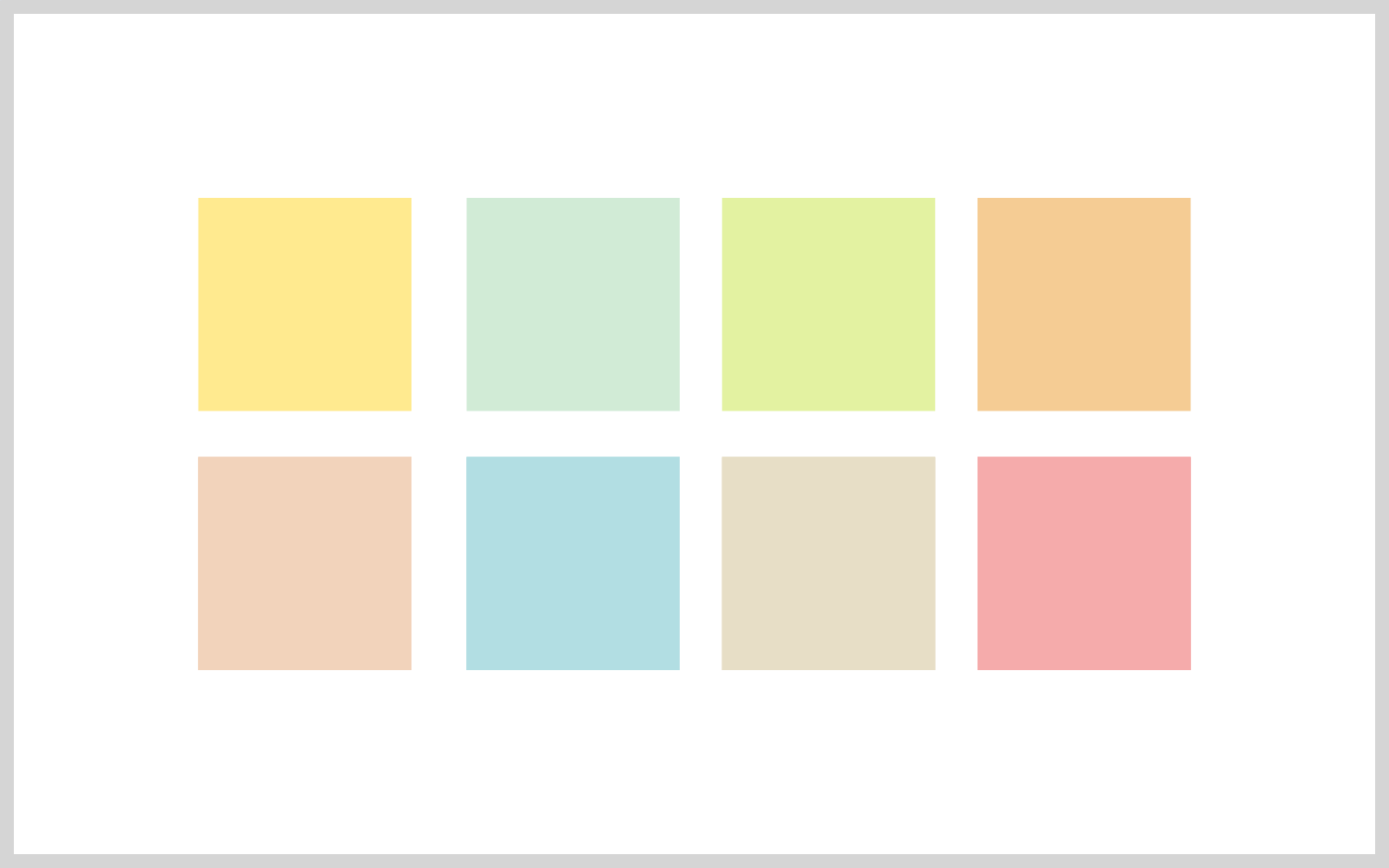

We’ve also refreshed our color palette. We still love green and blue, but we’ve introduced yellow as well as a few other options. Here are the highlights (no pun intended):Our new brand color is yellow. The color of Post-it notes, smiley face emojis and bright ideas. We hope it represents things like warmth, approachability, insight, discovery and enlightenment.

Our new colour palette ranges from yellow through to green and blue. We use tints of yellow to allow flexibility and accessibility when designing, while the dark navy is versatile and reliable. We place an emphasis on whites and grey tints for freshness and lightness.

We also use accent colors to add more variety and life. We’ll let you find these on your own as you experience the new palette on our website.

Our new colour palette 1

Our new colour palette 2

Our typeface

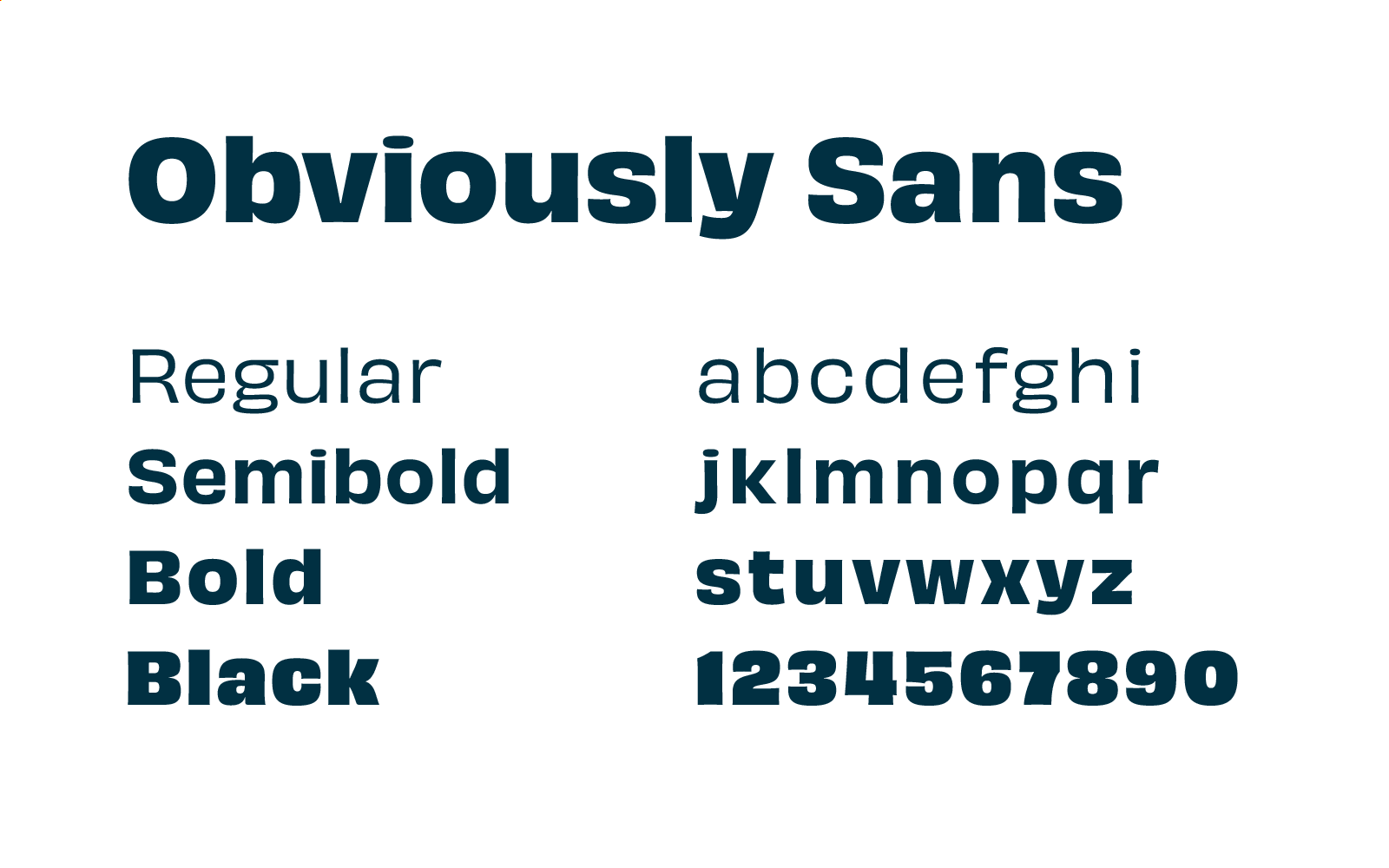

One of the biggest improvements we’ve made to the accessibility of the website comes in the form of our new typeface. Obviously Sans is our new heading typeface – it has a “steady” style, but it’s also quite interesting structurally.

You’ll see Obviously Sans throughout the website, app and blog. It’ll also make its way into our emails and other comms too!

Highlighting what matters

The act of highlighting something is universally understood. We all use highlighters and we all know what it means when we highlight something. Highlighting to find patterns is what research is all about.

We often highlight to:

- Discover themes

- Uncover insights

- Simplify complexity

- Emphasize meaning

Here’s what the highlighting looks like in practice:

Wrap-up

So, that’s our brand refresh. We’re still the same Optimal Workshop, just with a new look that better represents who we are and what we do.

We’re trying to become an ever-more important part of the work that user experience professionals carry out every day. Whether that means hosting user interviews, validating their ideas, testing and probing their information architectures or running surveys, we want to be a part of it.

We believe in better experiences for all and putting people at the heart of decisions. The team at Optimal Workshop genuinely enjoy working together to build something that will leave the world in a better state than we found it.

Join us on the journey – there’s more to come.

No results found.