Summary: User researcher Ashlea McKay runs through some of her top tips for carrying out advanced analysis in tree testing tool Treejack.

Tree testing your information architecture (IA) with Treejack is a fantastic way to find out how easy it is for people to find information on your website and pinpoint exactly where they’re getting lost. A quick glance at the results visualization features within the tool will give you an excellent starting point, however your Treejack data holds a much deeper story that you may not be aware of or may be having trouble pinning down. It’s great to be able to identify a sticking point that’s holding your IA back, but you also want to see where that fits into the rest of the story and also not just where people are getting lost in the woods, but why.

Thankfully, this is something that is super quick and easy to find — you just have to know where to look. To help you gain a fuller picture of your tree testing data, I’ve pulled together this handy guide of my top tips for running advanced analysis in Treejack.

Setting yourself up for success in the Participants tab

Treejack results are exciting and it can be all too easy to breeze past the Participants tab to get to those juicy insights as quickly as possible, but stopping for a moment to take a look is worth it. You need to ensure that everyone who has been included in your study results belongs there. Take some time to flick through each participant one by one and see if there’s anyone you’d need to exclude.

Keep an eye out for any of the following potential red flags:

- People who skipped all or most of their tasks directly: their individual tasks will be labeled as ‘Direct Skipped’ and this means they selected the skip task button without attempting to complete the task at all.

- People who completed all their tasks too quickly: those who were much faster than the median completion time listed in the Overview tab may have rushed their way through the activity and may not have given it much thought.

- People who took a very long time to complete the study: it’s possible they left it open on their computer while they completed other tasks not related to your study and may not have completed the whole tree test in one sitting and therefore may not have been as focused on it.

Treejack also automatically excludes incomplete responses and marks them as ‘abandoned’, but you have full control over who is and isn’t included and you might like to reintroduce some of these results if you feel they’re useful. For example, you might like to bring back someone who completed 9 out of a total of 10 tasks before abandoning it as this might mean that they were interrupted or may have accidentally closed their browser tab or window before reaching the end.

You can add, remove or filter participant data from your overall results pool at any time during your analysis, but at a minimum deciding who does and doesn’t belong at the very beginning will save you a lot of time and effort that I certainly learned about the hard way.

Once you’re happy with the responses that will be included in your results, you’re good to go. If you made any changes, all you have to do is reload your results which you can do from the Participants tab and all your data on the other tabs will be updated to reflect your new participant pool.

Getting the most out of your pietrees

Pietrees are the heart and soul of Treejack. They bring all the data Treejack collected on your participants’ journeys for a single task during your study together into one interactive and holistic view. After gaining an overall feel for your results by reviewing the task by task statistics under the Task Results tab, pietrees are your next stop in advanced analysis in Treejack.

How big does a pietree grow?

Start by reviewing the overall size of the pietree. Is it big and scattered with small circles representing each node (also called a ‘pie’ or a ‘branch’)? Or is it small with large circular nodes? Or is it somewhere in between? The overall size of the pietree can provide insight into how long and complex your participants’ pathways to their nominated correct answer were.

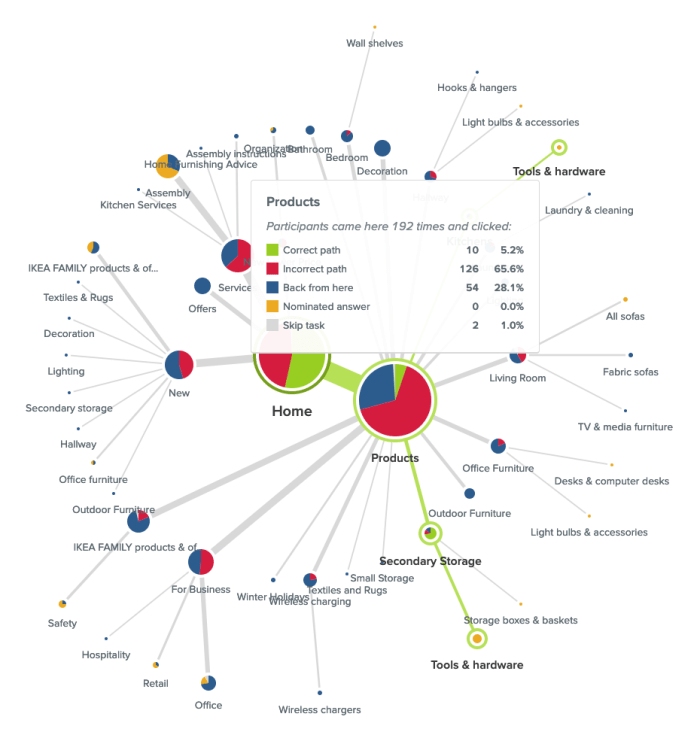

Smaller pietrees with bigger circular nodes like the one shown in the example below taken from a study I ran in 2018 testing IKEA’s US website, happen when participants follow more direct pathways to their destination — meaning they didn’t stray from the path that you set as correct when you built the study.

Example of a smaller and more direct pietree taken from a study I ran on IKEA’s US website in 2018.

This is a good thing! You want your participants to be able to reach their goal quickly and directly without clicking off into other areas but when they can’t and you end up with a much larger and more scattered pietree, the trail of breadcrumbs they leave behind them will show you exactly where you’re going wrong — also a good thing! Larger and more scattered pietrees happen when indirect and winding pathways were followed and sometimes you’ll come across a pietree like the one shown below where just about every second and third level node has been clicked on.

This can indicate that people felt quite lost in general while trying to complete their task because bigger pietrees tend to show large amounts of people clicking into the wrong nodes and immediately turning back. This is shown with red (incorrect path) and blue (back from here) color coding on the nodes of the tree and you can view exactly how many people did this along with the rest of that node’s activity by hovering over each one (see below image).

In this case people were looking for an electric screwdriver and while ‘Products’ was the right location for that content, there was something about the labels underneath it that made 28.1% of its total visitors think they were in the wrong place and turn back. It could be that the labels need a bit of work or more likely that the placement of that content might not be right — ‘Secondary Storage’ and ‘Kitchens’ (hidden by the hover window in the image above) aren’t exactly the most intuitive locations for a power tool.

Labels that might unintentionally misdirect your users

When analyzing your pietree keep an eye out for any labels that might be potentially leading your users astray. Were there large numbers of people starting on or visiting the same incorrect node of your IA? In the example shown below, participants were attempting to replace lost furniture assembly instructions and the pietree for this task shows that the 2 very similar labels of ‘Assembly instructions’ (correct location) and ‘Assembly’ (incorrect location) were likely tripping people up as almost half the participants in the study were in the right place (‘Services’), but took a wrong turn and ultimately chose the wrong destination node.

There’s no node like home

Have a look at your pietree to see the number of times ‘Home’ was clicked. If that number is more than twice that of your participants, this can be a big indicator that people were lost in your IA tree overall. I remember a project where I was running an intranet benchmarking tree test that had around 80 participants and ‘Home’ had been clicked on a whopping 648 times and the pietrees were very large and scattered. When people are feeling really lost in an IA, they’ll often click on ‘Home’ as a way to clear the slate and start their journey over again. The Paths tab — which we’re going to talk about next — will allow you to dig deeper into findings like this in your own studies.

Breaking down individual participant journeys in the Paths tab

While the pietrees bring all your participants’ task journeys together into one visualization, the Paths tab separates them out so you can see exactly what each individual got up to during each task in your study.

How many people took the scenic route?

As we discussed earlier, you want your IA to support your users and enable them to follow the most direct pathway to the content that will help them achieve their goal. The paths table will help show you if your IA is there yet or if it needs more work. Path types are color coded by directness and also use arrows to communicate which direction participants were traveling in at each point of their journey so you can see where in the IA that they were moving forward and where they were turning back. You can also filter by path type by checking/unchecking the boxes next to the colours and their text-based label names at the top of the table.

Here’s what those types mean:

- Direct success: Participants went directly to their nominated response without backtracking and chose the correct option - awesome!

- Indirect success: Participants clicked into a few different areas of the IA tree and turned around and went back while trying to complete their task, but still reached the correct location in the end

- Direct failure: Participants went directly to their nominated response without backtracking but unfortunately did not find the correct location

- Indirect failure: Participants clicked into a few different areas of the IA tree and some backtracking occurred, but they still weren’t able to find the correct location

- Direct skip: Participants instantly skipped the task without clicking on any of your IA tree nodes

- Indirect skip: Participants attempted to complete the task but ultimately gave up after clicking into at least one of your IA tree’s nodes.

It’s also important to note that while some tasks may appear to be successful on the surface — e.g., your participants correctly identified the location of that content — if they took a convoluted path to get to that correct answer, something isn’t quite right with your tree and it still needs work. Success isn’t always the end of the story and failed tasks aren’t the only ones you should be checking for lengthy paths. Look at the lengths of all your paths to gain a full picture of how your participants experienced your IA.

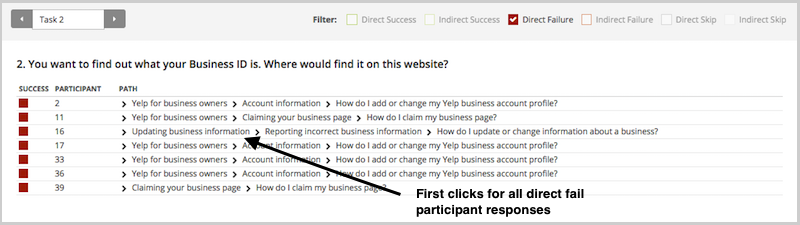

Take a closer look at the failed attempts

If you’re seeing large numbers of people failing tasks — either directly or indirectly — it’s worth taking a closer look at the paths table to find out exactly what they did and where they went. Did multiple people select the same wrong node? When people clicked into the wrong node, did they immediately turn back or did they keep going further down? And if they kept going, which label or labels made them think they were on the right track?

In the Sephora study example on that task I mentioned earlier where no one was successful in finding the correct answer, 22% of participants (7 people) started their journey on the wrong first node of ‘Help & FAQs’ and not one of those participants turned back beyond that particular Level 1 starting point (ie clicked on ‘Home’ to try another path). Some did backtrack during their journey but only as far back as the ‘Help & FAQs’ node that they started on indicating that it was likely the label that made them think they were on the right track. We’ll also take a closer look at the importance of accurate first clicks later on in this guide.

How many people skipped the task and where?

Treejack allows participants to skip tasks either before attempting a task or during one. The paths table will show you which node the skip occurred at, how many other nodes were clicked before they threw in the towel and how close (or not) they were to successfully completing their task. People skipping tasks in the real world affects conversion rates and more, but if you can find out where it’s happening in the IA during a tree test, you can improve it and better support your users and in turn meet your business goals.

Coming back to that Sephora study, when participants were looking to book an in-store beauty consultation, Participant 14 (see below image) was in the right area of the IA a total of 5 times during their journey (‘About Sephora’ and ‘Ways to Shop’). Each time they were just 2-3 clicks away from finding the right location for that content, but ultimately ended up skipping the task. It’s possible that the labels on the next layer down didn’t give this participant what they needed to feel confident they were still on the right track.

Finding out if participants started out on the right foot in the First clicks tab

Borrowing a little functionality from Chalkmark, the first clicks tab in Treejack will help you to understand if your participants started their journey on the right foot because that first click matters! Research has shown that people are 2-3 times as likely to successfully complete their task if they start out on the right first click.

This is a really cool feature to have in Treejack because Chalkmark is image based, but when you’re tree testing you don’t always have a visual thing to test. And besides, a huge part of getting the bones of an IA right is to be deliberately visual distraction-free! Having this functionality in Treejack means you can start finding out if people are on the right track from much earlier stages in your project saving you a lot of time and messy guesswork.

Under the First clicks tab you will find a table with 2 columns. The first column shows which nodes of your tree were clicked first and the percentage of your participants that did that, and the second column shows the percentage of participants that visited that node during the task overall. The first column will tell you how many participants got their first click right (the correct first click nodes are shown in bold text ) and the second will tell you how many found their way there at some point during their journey overall including those who went there first.

Have a look at how many participants got their first click right and how many didn’t. For those who didn’t, where did they go instead?

Also look at how the percentage of correct first clicks compares to the percentage of participants who made it there eventually but didn’t go there first — is the number in the second column the same or is it bigger? How much bigger? Are people missing the first click but still making it there in the end? Not the greatest experience, but better than nothing! Besides that task’s paths table and pietree will help you pinpoint the exact location of the issues anyway so you can fix them.

When considering first-click data in your own Treejack study, just like you would with the pietrees, use the data under the Task results tab as a starting point to identify which tasks you’d like to take a closer look at. For example, in that Sephora study I mentioned, Task 5 showed some room for improvement. Participants were tasked with finding out if Sephora ships to PO boxes and only 44% of participants were able to do this as shown in the image below.

Looking at the first click table for this task (below), we can see that only 53% of participants overall started on the right first click which was ‘Help & FAQs’ (as shown in bold text).

Almost half the participants who completed this task started off on the wrong foot and a quarter overall clicked on ‘About Sephora’ first. We also know that 69% of participants visited that correct first node during the task which shows that some people were able to get back on track, but almost a third of participants still didn’t go anywhere near the correct location for that content. In this particular case, it’s possible that the correct first click of ‘Help & FAQs’ didn’t quite connect with participants as the place where postage options can be found.

Discovering the end of the road in the Destinations tab

As we near the end of this advanced Treejack analysis guide, our last stop is the Destinations tab. Under here you’ll find a detailed matrix showing where your participants ended their journeys for each task across your entire study. It’s a great way to quickly see how accurate those final destinations were and if they weren’t, where people went instead. It’s also useful for tasks that have multiple correct answers because it can tell you which one was most popular with participants and potentially highlight opportunities to streamline your IA by removing unnecessary duplication.

Along the vertical axis of the grid you’ll find your entire IA tree expanded out and along the horizontal axis, you’ll see your tasks shown by number. For a refresher on which task is which, just hover over the task number on the very handy sticky horizontal axis. Where these 2 meet in the grid, the number of participants who selected that node of the tree for that task will be displayed. If there isn’t a number in the box — regardless of shading — no one selected that node as their nominated correct answer for that task.

The boxes corresponding to the correct nodes for each task are shaded in green. Numberless green boxes can tell you in one quick glance if people aren’t ending up where they should be and if you scroll up and down the table, you’ll be able to see where they went instead.

Red boxes with numbers indicate that more than 20% of people incorrectly chose that node as well as how many did that. Orange boxes with numbers do the same but for nodes where between 10% and 20% of people selected it. And finally, boxes with numbers and no shading, indicate that less than 10% selected that node.

In the below example taken from that Sephora study we’ve been talking about in this guide, we can see that ‘Services’ was one of the correct answers for Task 4 and no one selected it.

The Destinations table is as long as the IA when it’s fully expanded and when we scroll all the way down through it (see below), we can see that there were a total of 3 correct answers for Task 4. For this task, 8 participants were successful and their responses were split across the 2 locations for the more specific ‘Beauty Services’ with the one under ‘Book a Reservation’ being the most popular and potentially best placed because it was chosen by 7 out of the 8 participants.

When viewed in isolation, each tab in Treejack offers a different and valuable perspective on your tree test data and when combined, they come together to build a much richer picture of your study results overall. The more you use Treejack, the better you’ll get at picking up on patterns and journey pathways in your data and you’ll be mastering that IA in no time at all!

Further reading

- Our handy Tree Testing 101 guide

- Website review: ASOS

- The information architecture of libraries part 2: Library of Congress Classification/a>