AI is transforming how businesses approach product development. From AI-powered chatbots and recommendation engines to predictive analytics and generative models, AI-first products are reshaping user interactions with technology, which in turn impacts every phase of the product development lifecycle.

Whether you're skeptical about AI or enthusiastic about its potential, the fundamental truth about product development in an AI-driven future remains unchanged: a product is only as good as its user experience.

No matter how powerful the underlying AI, if users don't trust it, can't understand it, or struggle to use it, the product will fail. Good UX isn't simply an add-on for AI-first products, it's a fundamental requirement.

Why UX Is More Critical Than Ever

Unlike traditional software, where users typically follow structured, planned workflows, AI-first products introduce dynamic, unpredictable experiences. This creates several unique UX challenges:

- Users struggle to understand AI's decisions – Why did the AI generate this particular response? Can they trust it?

- AI doesn't always get it right – How does the product handle mistakes, errors, or bias?

- Users expect AI to "just work" like magic – If interactions feel confusing, people will abandon the product.

AI only succeeds when it's intuitive, accessible, and easy-to-use: the fundamental components of good user experience. That's why product teams need to embed strong UX research and design into AI development, right from the start.

Key UX Focus Areas for AI-First Products

To Trust Your AI, Users Need to Understand It

AI can feel like a black box, users often don't know how it works or why it's making certain decisions or recommendations. If people don't understand or trust your AI, they simply won't use it. The user experiences you need to build for an AI-first product must be grounded in transparency.

What does a transparent experience look like?

- Show users why AI makes certain decisions (e.g., "Recommended for you because…")

- Allow users to adjust AI settings to customize their experience

- Enable users to provide feedback when AI gets something wrong—and offer ways to correct it

A strong example: Spotify's AI recommendations explain why a song was suggested, helping users understand the reasoning behind specific song recommendations.

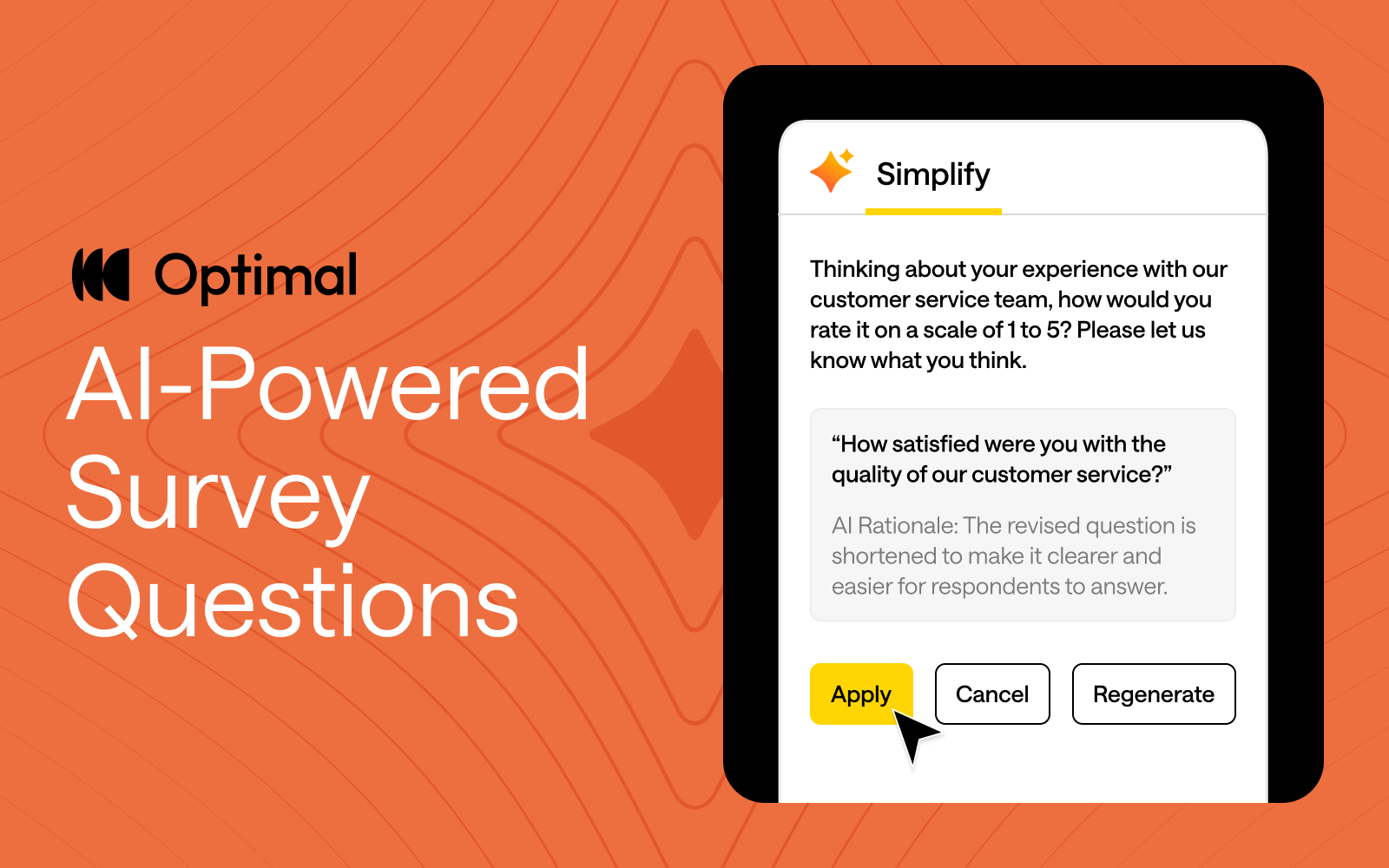

AI Should Augment Human Expertise Not Replace It

AI often goes hand-in-hand with automation, but this approach ignores one of AI's biggest limitations: incorporating human nuance and intuition into recommendations or answers. While AI products strive to feel seamless and automated, users need clarity on what's happening when AI makes mistakes.

How can you address this? Design for AI-Human Collaboration:

- Guide users on the best ways to interact with and extract value from your AI

- Provide the ability to refine results so users feel in control of the end output

- Offer a hybrid approach: allow users to combine AI-driven automation with manual/human inputs

Consider Google's Gemini AI, which lets users edit generated responses rather than forcing them to accept AI's output as final, a thoughtful approach to human-AI collaboration.

Validate and Test AI UX Early and Often

Because AI-first products offer dynamic experiences that can behave unpredictably, traditional usability testing isn't sufficient. Product teams need to test AI interactions across multiple real-world scenarios before launch to ensure their product functions properly.

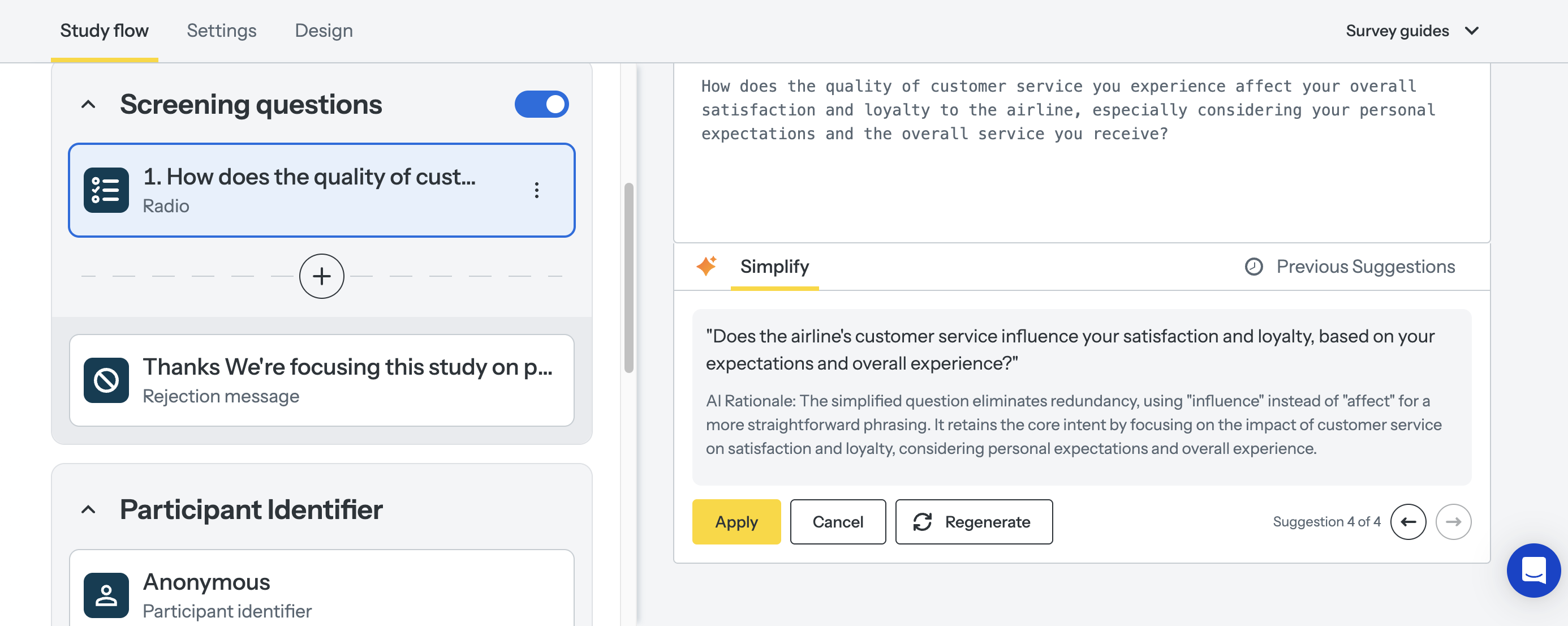

Run UX Research to Validate AI Models Throughout Development:

- Implement First Click Testing to verify users understand where to interact with AI

- Use Tree Testing to refine chatbot flows and decision trees

- Conduct longitudinal studies to observe how users interact with AI over time

One notable example: A leading tech company used Optimal to test their new AI product with 2,400 global participants, helping them refine navigation and conversion points, ultimately leading to improved engagement and retention.

The Future of AI Products Relies on UX

The bottom line is that AI isn't replacing UX, it's making good UX even more essential. The more sophisticated the product, the more product teams need to invest in regular research, transparency, and usability testing to ensure they're building products people genuinely value and enjoy using.

Want to improve your AI product's UX? Start testing with Optimal today.