AI creates beautiful designs, but only humans can validate if they work

Let's talk about something that's fundamentally reshaping product development: AI-generated designs. It's not just a trendy tool; it's a complete transformation of the design workflow as we know it.

Today's AI design tools aren't just creating mockups, they're generating entire design systems, producing variations at scale, and predicting user preferences before you've even finished your prompt. Instead of spending hours on iterations, designers are exploring dozens of directions in minutes.

This is where platforms like Lovable shine with their vibe coding approach, generating design directions based on emotional and aesthetic inputs rather than just functional requirements, and while this AI-powered innovation is impressive, it raises a critical question for everyone creating digital products: How do we ensure these AI-generated designs actually resonate with real people?

The Gap Between AI Efficiency and Human Connection

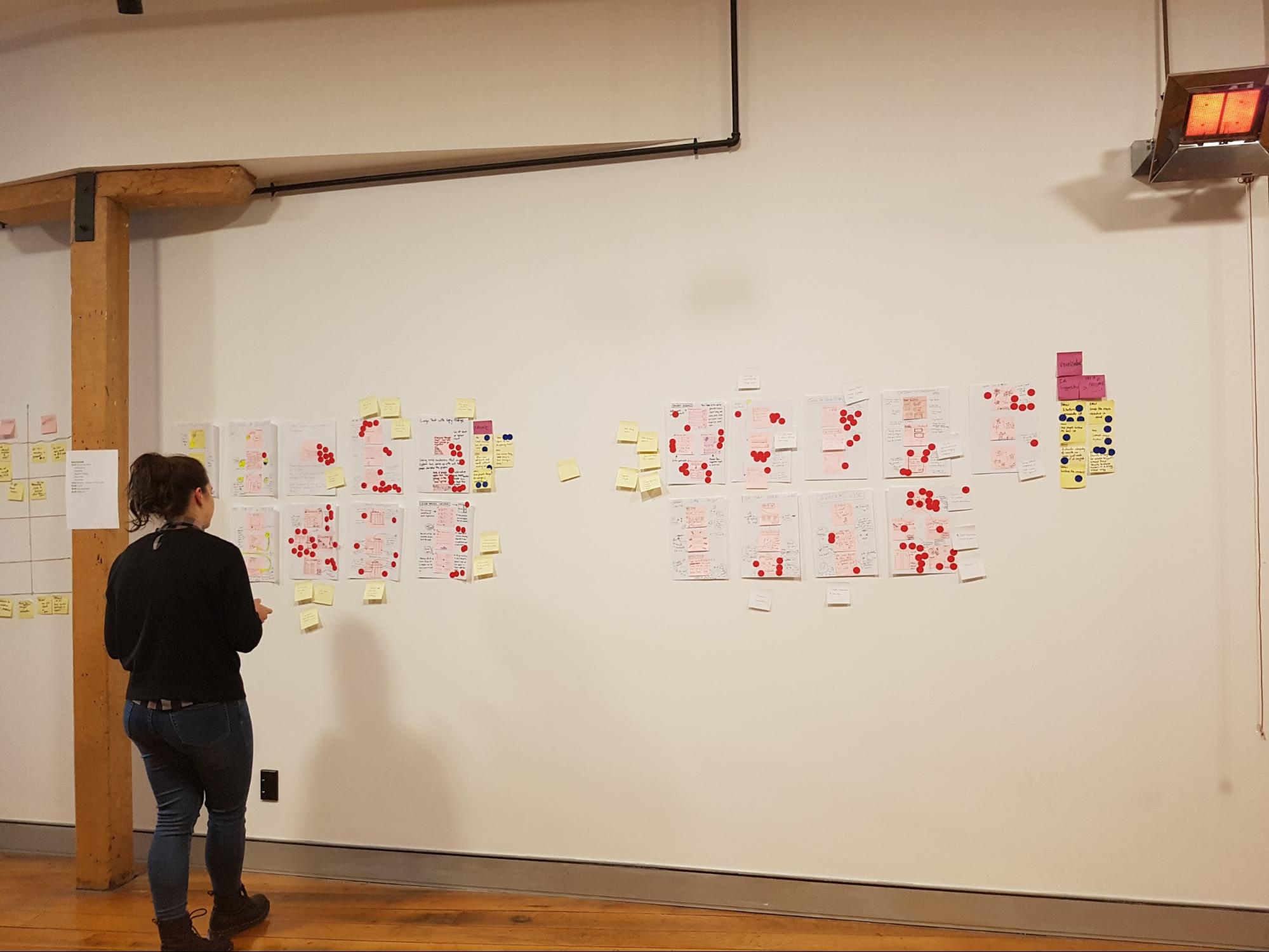

The design process has fundamentally shifted. Instead of building from scratch, designers are prompting and curating. Rather than crafting each pixel, they're directing AI to explore design spaces.

The whole interaction feels more experimental. Designers are using natural language to describe desired outcomes, and the AI responses feel like collaborative explorations rather than final deliverables.

This shift has major implications for product teams:

- If you're a product manager, you need to balance AI efficiency with proven user validation methods to ensure designs solve actual user problems.

- UX designers, you're now curating and refining AI outputs. When AI generates interfaces, will real users understand how to use them?

- Visual designers, your expertise is evolving. You need to develop prompting skills while maintaining your critical eye for what actually works.

- And UX researchers, there's an urgent need to validate AI-generated designs with real human feedback before implementation.

The Future of Design: AI Innovation + Human Validation

As AI design tools become more powerful, the teams that thrive will be those who balance technological innovation with human understanding. The winning approach isn't AI alone or human-only design, it's the thoughtful integration of both.

Why Human Validation Is Essential for AI-Generated Designs

AI is revolutionizing design creation, but it has inherent limitations that only human validation can address:

- AI Lacks Contextual Understanding While AI can generate visually impressive designs, it doesn't truly understand cultural nuances, emotional responses, or lived experiences of your users. Only human feedback can verify whether an AI-generated interface feels intuitive rather than just looking good.

- The "Uncanny Valley" of AI Design AI-generated designs sometimes create an "almost right but slightly off" feeling, technically correct but missing the human touch. Real user testing helps identify these subtle disconnects that might otherwise go unnoticed by design teams.

- AI Reinforces Patterns, Not Breakthroughs AI models are trained on existing design patterns, meaning they excel at iteration but struggle with true innovation. Human validation helps identify when AI-generated designs feel derivative versus when they create genuine emotional connections with users.

- Diverse User Needs Require Human Insight AI may not account for accessibility considerations, cultural sensitivities, or edge cases without explicit prompting. Human validation ensures designs work for your entire audience, not just the statistical average.

The Multiplier Effect: Why AI + Human Validation Outperforms Either Approach Alone

The combination of AI-powered design and human validation creates a virtuous cycle that elevates both:

- From Rapid Iteration to Deeper Insights AI allows teams to test more design variations than ever before, gathering richer comparative data through human testing. This breadth of exploration was previously impossible with human-only design processes.

- Continuous Learning Loop Human validation of AI designs creates feedback that improves future AI prompts. Over time, this creates a compounding advantage where AI tools become increasingly aligned with real user preferences.

- Scale + Depth AI provides the scale to generate numerous options, while human validation provides the depth of understanding required to select the right ones. This combination addresses both the breadth and depth dimensions of effective design.

At Optimal, we're committed to helping you navigate this new landscape by providing the tools you need to ensure AI-generated designs truly resonate with the humans who will use them. Our human validation platform is the essential complement to AI's creative potential, turning promising designs into proven experiences.

Introducing the Optimal + Lovable Integration: Bridging AI Innovation with Human Validation

At Optimal, we've always believed in the power of human feedback to create truly effective designs. Now, with our new Lovable integration, we're making it easier than ever to validate AI-generated designs with real users.

Here's how our integrated approach works:

1. Generate Innovative Designs with Lovable

Lovable allows you to:

- Explore emotional dimensions of design through AI prompting

- Generate multiple design variations in minutes

- Create interfaces that feel aligned with your brand's emotional targets

2. Validate Those Designs with Optimal

Interactive Prototype Testing Our integration lets you import Lovable designs directly as interactive prototypes, allowing users to click, navigate, and experience your AI-generated interfaces in a realistic environment. This reveals critical insights about how users naturally interact with your design.

Ready to Transform Your Design Process?

Try our Optimal + Lovable integration today and experience the power of combining AI innovation with human validation. Your first study is on us! See firsthand how real user feedback can elevate your AI-generated designs from interesting to truly effective.

Try the Optimal + Lovable Integration today