Optimal Blog

Articles and Podcasts on Customer Service, AI and Automation, Product, and more

In our Value of UX Research report, nearly 70% of participants identified analysis and synthesis as the area where AI could make the biggest impact.

At Optimal, we're all about cutting the busywork so you can spend more time on meaningful insights and action. That’s why we’ve built automated Insights, powered by AI, to instantly surface key themes from your survey responses.

No extra tools. No manual review. Just faster insights to help you make quicker, data-backed decisions.

What You’ll Get with Automated Insights

- Instant insight discovery

Spot patterns instantly across hundreds of responses without reading every single one. Get insights served up with zero manual digging or theme-hunting. - Insights grounded in real participant responses

We show the numbers behind every key takeaway, including percentage and participant count, so you know exactly what’s driving each insight. And when participants say it best, we pull out their quotes to bring the insights to life. - Zoom in for full context

Want to know more? Easily drill down to the exact participants behind each insight for open text responses, so you can verify, understand nuances, and make informed decisions with confidence. - Segment-specific insights

Apply any segment to your data and instantly uncover what matters most to that group. Whether you’re exploring by persona, demographic, or behavior, the themes adapt accordingly. - Available across the board

From survey questions to pre- and post-study, and post-task questions, you’ll automatically get Insights across all question types, including open text questions, matrix, ranking, and more.

Automate the Busywork, Focus on the Breakthroughs

Automated Insights are just one part of our ever-growing AI toolkit at Optimal. We're making it easier (and faster) to go from raw data to real impact, such as our AI Simplify tool to help you write better survey questions, effortlessly. Our AI assistant suggests clearer, more effective wording to help you engage participants and get higher-quality data.

Ready to level up your UX research? Log into your account to get started with these newest capabilities or sign up for a free trial to experience them for yourselves.

Topics

Research Methods

Popular

All topics

Latest

13 time-saving tips and tools for conducting great user interviews

User interviews are a great research method you can use to gain qualitative data about your users, and understand what they think and feel. But they can be quite time consuming, which can sometimes put people off doing them altogether.They can be a bit of a logistical nightmare to organize. You need to recruit participants, nail down a time and place, bring your gear, and come up with a Plan B if people don’t show up. All of this can take up a fair bit of back and forthing between your research team and other people, and it’s a real headache when you have a deadline to work to.So, how can you reap the great rewards and insights that user interviews provide, while spending less time planning and organizing them? Here are 15 tips and tools to help get you started.

Preparation

1) Come up with a checklist

Checklists can be lifesavers, especially when your brain is running 100 miles an hour and you’re wondering if you’ve forgotten to even introduce yourself to your participant.Whether you’re doing your research remotely or in person, it always helps to have a list of all the tasks you need to do so you can check them off one by one.A great checklist should include:

- the items you need to bring to your sessions (notebooks, laptop, pens, water, and do NOT forget your laptop charger!)

- any links you need to send to your interviewee if speaking to them remotely (Google Hangouts, webex etc.)

- a reminder to get consent to record your interview session

- a reminder to hit the record button

Scripts are also useful for cutting down time. Instead of “umm-ing” and “ahh-ing” your way through your interview, you can have a general idea of what you’ll talk about. Scripts will likely change between each project, but having a loose template that you can chop and change pretty easily will help you save time in the future.Some basic things you’ll want to include in your script:

- an introduction of yourself, and some ice-breaker questions to build a rapport with your participant

- your research goals and objectives — what/who you’re doing this research for and why

- how your research will be used

- the questions you’re going to ask

- tying up loose ends — answering questions from your participant and thanking them very much for their time.

2) Build up a network of participants to choose from

This is another tip that requires a bit of legwork at the start, but saves lots of hassle later on. If you build up a great network of people willing to take part in your research, recruiting can become much easier.Perhaps you can set up a research panel that people can opt into through your website (something we’ve done here at Optimal Workshop that has been a huge help). If you’re working internally and need to interview users at your own company, you can do a similar thing. Reach out to managers or team leaders to get employees on board, get creative with incentives, reward people with thanks or cakes in public — there are loads of ideas.

3) Do your interviews remotely

Remote user research is great. It allows you to talk to all types of people anywhere in the world, without having to spend time and money for travel to get to them.There are many different tools you can use to conduct your user interview remotely.Some easy to use and free ones are Google Hangouts and Skype. As a bonus, it’s likely your participants will already have one of these installed, saving them time and hassle — just don’t forget to record your session.Here are a couple of recording tools you can use:

- QuickTime

- iShowU HD

- Pamela for Skype

4) Rehearse, rehearse, rehearse

Make sure you’re not wasting any precious research time and rehearse your interview with a colleague or friend. This will help you figure out anything you’ve missed, or what could potentially go wrong that could cause you time delays and headaches on the day.

- Do your questions make sense, and are they the right kinds of questions?

- Test your responses — are you making sure you stay neutral so you don’t lead your participants along?

- Does your script flow naturally? Or does it sound too scripty?

- Are there any areas that technology could become a hindrance, and how can you make sure you avoid this?

5) Use scheduling tools to book sessions for you

Setting up meetings with colleagues can be difficult, but when you’re reaching out to participants who are volunteering their precious time it can be a nightmare.Make it easier for all involved and use an easy scheduling tool to get rid of most of the hard work.Simply enter in a few times that you’re free to host sessions, and your participants can select which ones work for them.Here are a couple of tools to get you started:

- Calendly

- NeedtoMeet

- Boomerang Calendar

- ScheduleOnce

Don’t forget to automate the reminder emails to save yourself some time. Some of the above tools can sort that out for you!

In-session

6) Avoid talking about yourself — stick to your script!

When you’re trying to build a rapport with your participant, it’s easy to go overboard, get off track and waste precious research time. Avoid talking about yourself too much, and focus on asking about your participant, how they feel, and what they think. Make sure you keep your script handy so you know if you’re heading in the wrong direction.

7) Record interviews, transcribe later

In many user interview scenarios, you’ll have a notetaker to jot down key observations as your session goes on. But if you don’t have the luxury of a notetaker, you’ll likely be relying on yourself to take notes. This can be really distracting when you’re interviewing someone, and will also take up precious research time. Instead, record your interview and only note down timestamps when you come across a key observation.

8) Don’t interrupt

Ever had something to say and started to explain it to someone, only to get interrupted then lose your train of thought? This can happen to your participants if you’re not careful, which can mean delays with getting the information you need. Stay quiet, and give your participant a few seconds before asking what they’re thinking.

9) Don’t get interrupted

If you’re hosting your interview at your office, let your coworkers know so they don’t interrupt you. Hang a sign up on the door of your meeting room and make sure you close the door. If you’re going out of your office, pick a location that’s quiet and secluded like a meeting room at a library, or a quiet corner in a cafe.

10) Take photos of the environment

If you’re interviewing users in their own environment, there are many little details that can help you with your research. But you could spend ages taking note of all these details in your session. You can get a good idea of what your participant’s day is like by snapping some images of their workstations, tech they use, and the office as a whole. Use your phone and pop these into Evernote or Dropbox to analyze later.

Analysis

11) Use Reframer to analyze your data

Qualitative research produces very powerful data, but it also produces a lot of it. It can take you and your team hours, even days, to go through it all.Use a qualitative research tool such as Reframer to tag your observations so you can easily build themes and find patterns in your data while saving hours of analysis. Tags might be related to a particular subject you’re discussing with a participant, a really valuable quote, or even certain problems your participants have encountered — it all depends on your project.

12) Make collaboration simple

Instead of spending hours writing up some of your findings on Post-it notes and sticking them up on a wall to discuss with your teammates, you can quickly and easily do this online with Trello or MURAL. This is definitely a big timesaver if you’ve got some team members who work remotely.

13) Make your findings easy to read

Presenting your findings to stakeholders can be difficult, and extremely time consuming if you need to explain it all in easy-to-understand terms. Save time and make it easier for your stakeholders by compiling your findings into an infographic, engaging data visualization, or slideshow presentation. Just make sure you bring all the stats you need to answer any questions from stakeholders.For more actionable tips and tricks from UX professionals all over the world, check out our latest ebook. Download and print out templates and checklists, and become a pro for your next user interview.Get our new ebook

Related reading

- "Individual interviews" - An article from Usability.gov explaining how, when, and why you should conduct user interviews with individuals.

- "Interviewing users" - A guide from Nielsen Norman Group showing us the situations in which user interviews are best suited, and the kind of information they can provide us.

- "Open-ended versus close-ended questions in user research" - Another article from Nielsen Norman Group explaining the differences in question types.

Understanding a museum’s digital audience

Ahead of her talk at UX New Zealand 2016, Lana Gibson from Lanalytics writes about a project she worked on with Te Papa.Te Papa (a museum in Wellington, New Zealand) created audience personas based on user research, and I used these as a basis to create audience segments in Google Analytics to give us further insight into different groups. By regularly engaging with our audience using both qualitative and quantitative user insight methods, we’re starting to build up a three-dimensional picture of their needs and how Te Papa can serve them.

Personas based on user research

At Te Papa the digital team created six audience personas to inform their site redesign, based on user research:

- enthusiast

- tourist

- social

- educator

- volunteer

- Wellingtonian

These formed a good basis for understanding why people are using the site. For example the educator persona wants fodder for lesson plans for her class — trustworthy, subject-based resources that will excite her students. The tourist persona wants practical information — what’s on, how to plan a visit. And they want to get this information quickly and get on with their visit.We’ll follow the tourist persona through a couple more rounds of user research, to give an example of what you can find out by segmenting your audience.

Interpreting tourist needs with data

Te Papa holds information for the Tourist audience in the Visit and What’s on sections of the site. I created a segment in Google Analytics which filters the reports to show how people who visit pages within these two sections interact with the whole site. For example the keywords they search for in Google before arriving on Te Papa, what they search for when on the site, and how many of them email us.Deeper digging revealed that the Tourist audience makes up about half of our overall audience. Because the content is useful to everyone wanting to visit the museum, and not just tourists, we broadened the scope of this persona and called the segment ‘Museum visitor’.

Why segment by site category — what if the audience goes beyond these pages?

Google Analytics segments allow you to see all the pages that a particular audience visits, not just the ones you’ve filtered. For example over 2,000 people who visited a page within the Visit and What’s on sections also visited the Kids and families section in July 2016. So, the audience segment allows us to expand our concept of our audiences.You can segment by a lot of different behaviors. For example you could segment visitors by keyword, isolating people who come to the site from Google after searching for ‘parking’ and ‘opening hours’ and seeing what they do afterwards. But segmenting by site category tests the information architecture of your site, which can be very useful if you’ve got it wrong!

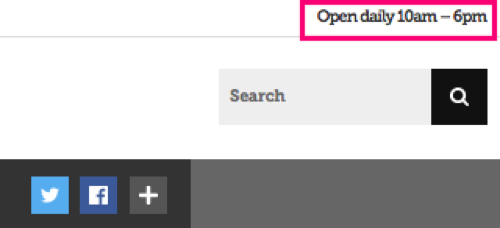

Visit persona wants opening hours information

What did we learn from these personas? One example is that the most searched term on the site for the Visit persona was ‘opening hours’. To help fix this, the team put the opening hours on every page of the redesigned site:

This resulted in a 90% drop in searches that include ‘hours’ (May 2016 compared with May 2015):

Developing personas with Matariki

After the re-design the team ran a project to increase the reach and engagement of the Te Papa Matariki audience. You can read more about this in "Using data to help people celebrate Matariki". Te Papa holds Matariki events in the museum, such as the Kaumātua kapa haka, and this event in particular enhanced and challenged our ideas about this audience.

Experiencing Kaumātua kapa haka performances online

The Kaumātua kapa haka is the biggest Matariki event held at Te Papa, and this year we had 4,000 unique page views to the two Kaumātua kapa haka event pages. Traffic spiked over the event weekend, particularly from Facebook and mobile devices. We assumed the traffic was from people who were planning to come to the event, as they sit in the What’s on section. But further analysis indicates that people were visiting for the live streaming of the event — we included embedded Youtube videos on these pages.The popularity of the videos suggests that we’re taking events held within the museum walls out to people on the move, or in the comfort of their own homes. Based on this insight we’re looking into live streaming more events.

We’ve taken Te Papa personas through three iterations, based on user research, analytics, then a practical application of these to the Matariki festival. Each user research method has limitations, but by regularly using qualitative and quantitative methods we’re engaging with a three dimensional view of our audience that’s constantly evolving. Each user research piece builds that view, and allows us to plan projects and site changes with greater clarity about what our users need. It means we can plan projects that will have real and measurable impact, and allow people to engage with Te Papa in useful and meaningful ways.

Want to hear more? Come to UX New Zealand!

If you'd like to hear more about how Lana and Ruth redesigned the Te Papa website, plus a bunch of other cool UX-related talks, head along to UX New Zealand 2016 hosted by Optimal Workshop. The conference runs from 12-14 October, 2016, including a day of fantastic workshops, and you can get your tickets here. Got some questions you'd like to ask Lana before the conference? You can Tweet her on @lanalytics00!

Card descriptions: Testing the effect of contextual information in card sorts

The key purpose of running a card sort is to learn something new about how people conceptualize and organize the information that’s found on your website. The insights you gain from running a card sort can then help you develop a site structure with content labels or headings that best represent the way your users think about this information. Card sorts are in essence a simple technique, however it’s the details of the sort that can determine the quality of your results.

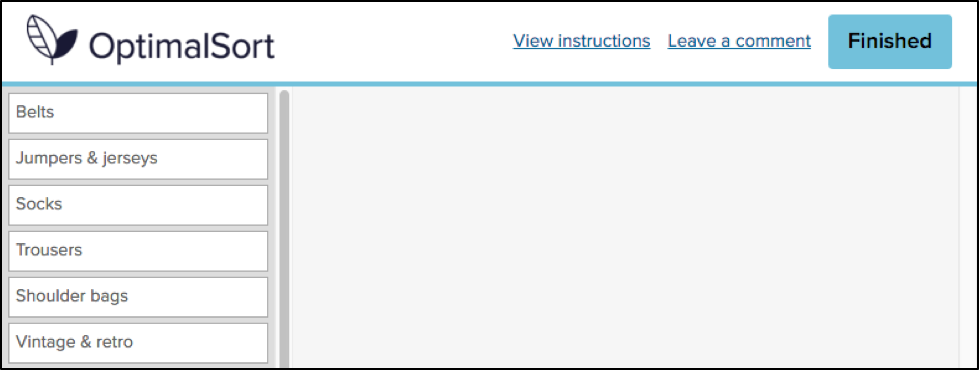

Adding context to cards in OptimalSort – descriptions, links and images

In most cases, each item in a card sort has only a short label, but there are instances where you may wish to add additional context to the items in your sort. Currently, the cards tab in OptimalSort allows you to include a tooltip description, a link within the tooltip description or to format the card as an image (with or without a label).

We generally don’t recommend using tooltip descriptions and links, unless you have a specific reason to do so. It’s likely that they’ll provide your participants with more information than they would normally have when navigating your website, which may in turn influence your results by leading participants to a particular solution.

Legitimate reasons that you may want to use descriptions and links include situations where it’s not possible or practical to translate complex or technical labels (for example, medical, financial, legal or scientific terms) into plain language, or if you’re using a card sort to understand your participants’ preferences or priorities.

If you do decide to include descriptions in your sort, it’s important that you follow the same guidelines that you would otherwise follow for writing card labels. They should be easy for your participants to understand and you should avoid obvious patterns, for example repeating words and phrases, or including details that refer to the current structure of the website.

A quick survey of how card descriptions are used in OptimalSort

I was curious to find out how often people were including descriptions in their card sorts, so I asked our development team to look into this data. It turns out that around 15% of cards created in OptimalSort have at least some text entered in the description field. In order to dig into the data a bit further, both Ania and I reviewed a random sample of recent sorts and noted how descriptions were being used in each case.

We found that out of the descriptions that we reviewed, 40% (6% of the total cards) had text that should not have impacted the sort results. Most often, these cards simply had the card label repeated in the description (to be honest, we’re not entirely sure why so many descriptions are being used this way! But it’s now in our roadmap to stop this from happening — stay tuned!). Approximately 20% (3% of the total cards) used descriptions to add context without obviously leading participants, however another 40% of cards have descriptions that may well lead to biased results. On occasion, this included linking to the current content or using what we assumed to be the current top level heading within the description.

Testing the effect of card descriptions on sort results

So, how much influence could potentially leading card descriptions have on the results of a card sort? I decided to put it to the test by running a series of card sorts to compare the effect of different descriptions. As I also wanted to test the effect of linking card descriptions to existing content, I had to base the sort on a live website. In addition, I wanted to make sure that the card labels and descriptions were easily comprehensible by a general audience, but not so familiar that participants were highly likely to sort the cards in a similar manner.

I selected the government immigration website New Zealand Now as my test case. This site, which provides information for prospective and new immigrants to New Zealand, fit the above criteria and was likely unfamiliar to potential participants.

Navigating the New Zealand Now website

When I reviewed the New Zealand Now site, I found that the top level navigation labels were clear and easy to understand for me personally. Of course, this is especially important when much of your target audience is likely to be non-native English speaking! On the whole, the second level headings were also well-labeled, which meant that they should translate to cards that participants were able to group relatively easily.

There were, however, a few headings such as “High quality” and “Life experiences”, both found under “Study in New Zealand”, which become less clear when removed from the context of their current location in the site structure. These headings would be particularly useful to include in the test sorts, as I predicted that participants would be more likely to rely on card descriptions in the cases where the card label was ambiguous.

I selected 30 headings to use as card labels from under the sections “Choose New Zealand”, “Move to New Zealand”, “Live in New Zealand”, “Work in New Zealand” and “Study in New Zealand” and tweaked the language slightly, so that the labels were more generic.

I then created four separate sorts in OptimalSort:Round 1: No description: Each card showed a heading only — this functioned as the control sort

Round 2: Site section in description: Each card showed a heading with the site section in the description

Round 3: Short description: Each card showed a heading with a short description — these were taken from the New Zealand Now topic landing pages

Round 4:Link in description: Each card showed a heading with a link to the current content page on the New Zealand Now website

For each sort, I recruited 30 participants. Each participant could only take part in one of the sorts.

What the results showed

An interesting initial finding was that when we queried the participants following the sort, only around 40% said they noticed the tooltip descriptions and even fewer participants stated that they had used them as an aid to help complete the sort.

Participant recognition of descriptions

Of course, what people say they do does not always reflect what they do in practice! To measure the effect that different descriptions had on the results of this sort, I compared how frequently cards were sorted with other cards from their respective site sections across the different rounds.Let’s take a look at the “Study in New Zealand” section that was mentioned above. Out of the five cards in this section,”Where & what to study”, “Everyday student life” and “After you graduate” were sorted pretty consistently, regardless of whether a description was provided or not. The following charts show the average frequency with which each card was sorted with other cards from this section. For example in the control round, “Where & what to study” was sorted with “After you graduate” 76% of the time and with “Everyday day student life” 70% of the time, but was sorted with “Life experiences” or “High quality” each only 10% of the time. This meant that the average sort frequency for this card was 42%.

Untitled chartCreate bar charts

On the other hand, the cards “High quality” and “Life experiences” were sorted much less frequently with other cards in this section, with the exception of the second sort, which included the site section in the description.These results suggest that including the existing site section in the card description did influence how participants sorted these cards — confirming our prediction! Interestingly, this round had the fewest number of participants who stated that they used the descriptions to help them complete the sort (only 10%, compared to 40% in round 3 and 20% in round 4).Also of note is that adding a link to the existing content did not seem to increase the likelihood that cards were sorted more frequently with other cards from the same section. Reasons for this could include that participants did not want to navigate to another website (due to time-consciousness in completing the task, or concern that they’d lose their place in the sort) or simply that it can be difficult to open a link from the tooltip pop-up.

What we can take away from these results

This quick investigation into the impact of descriptions illustrates some of the intricacies around using additional context in your card sorts, and why this should always be done with careful consideration. It’s interesting that we correctly predicted some of these results, but that in this case, other uses of the description had little effect at all. And the results serve as a good reminder that participants can often be influenced by factors that they don’t even recognise themselves!If you do decide to use card descriptions in your cards sorts, here are some guidelines that we recommend you follow:

- Avoid repeating words and phrases, participants may sort cards by pattern-matching rather than based on the actual content

- Avoid alluding to a predetermined structure, such as including references to the current site structure

- If it’s important that participants use the descriptions to complete the sort, you should mention this in your task instructions. It may also be worth asking them a post-survey question to validate if they used them or not

We’d love to hear your thoughts on how we tested the effects of card descriptions and the results that we got. Would you have done anything differently?Have you ever completed a card sort only to realize later that you’d inadvertently biased your results? Or have you used descriptions in your card sorts to meet a genuine need? Do you think there’s a case to make descriptions more obvious than just a tooltip, so that when they are used legitimately, most participants don’t miss this information?

Let us know by leaving a comment!

Understanding UI design and its principles

Wireframes. Mockups. HTML. Fonts. Elements. Users. If you’re familiar with user interface design, these terms will be your bread and butter.An integral part of any website or application, user interface design is also arguably one of the most important. This is because your design is what your users see and interact with. If your site or app functions poorly and looks terrible, that’s what your users are going to remember.

But isn’t UX design and UI design the same thing? Or is there just an extremely blurred line between the two? What’s involved with UI design and, more importantly, what makes good design?

What is UI design exactly?

If you’re wondering how to test UI on your website, it’s a good idea to first learn some of the differences between UX and UI design. Although UI design and UX design look similar when written down, they’re actually two totally separate things. However, they should most definitely complement each other.

UX design, according to Nielsen Norman Group, “encompasses all aspects of the end-user's interaction with the company, its services, and its products.” Meanwhile, UI design focuses more on a user’s interaction, the overall design, look and feel of a system. The two still sound similar, right?For those of you still trying to wrap your ahead around the difference, Nielsen Norman Group has a great analogy up on its site that helps to explain it:

"As an example, consider a website with movie reviews. Even if the UI for finding a film is perfect, the UX will be poor for a user who wants information about a small independent release if the underlying database only contains movies from the major studios.”

This just goes to show the complementary relationship between the two and why it’s so important.User interface was popularized in the early 1970s, partly thanks to Fuji Xerox’s ‘Xerox Alto Workstation’ — an early personal computer dubbed “the origin of the PC”. This machine used various icons, multi windows, a mouse, and e-mail, which meant that some sort of design and design principles were needed to create consistency for the future. It was here that human-centred UI was born. UI design also covers graphical user interface design (GUI design). A GUI is the software or interface that works as the medium between a user and the computer.

It uses a number of graphical elements, such as screen cursors, menus, and icons so that users can easily navigate a system. This is also something that has stemmed from Fuji Xerox back in the late 1970s and early 1980s.Since then, UI has developed quickly and so has its design principles. When the Xerox Alto Workstation was first born, Fuji Xerox came up with eight of its own design principles. These were:

- Metaphorically digitize the desk environment

- Operating on display instead of entering on keyboard

- What you see is what you get

- Universal but fewer commands

- Same operation for the same job at different places

- Operating computers as easily as possible

- No need to transfer to different jobs

- System customized as desired by users

Over time, these principles have evolved and now you’ll likely find many more added to this list. Here are just a few of the most important ones identified in “Characteristics of graphical and web user interfaces” by Wilbert Galitz.

UI design principles:

Principle #1: Clarity

Usability.gov says that the “best interfaces are almost invisible to the user”.Everything in the system, from visual elements, functions, and text, needs to be clear and simple. This includes layout as well as the words used — stay away from jargon and complex terms or analogies that users won’t understand.Aesthetic appeal also fits into this principle. Ensure colors and graphics are used in a simple manner, and elements are grouped in a way that makes sense.

Principle #2: Consistency

The system should have the same or similar functions, uses and look throughout it for consistency. For example, the same color scheme should be used throughout an app, or the terminology on a website should be consistent throughout. Users should also have an idea of what to expect when they use your system. As an example, picture a retail shopping app. You’d expect that any other retail shopping app out there will have similar basic functions: a place to log in or create an account, account settings, a way to navigate and browse stock, a way to purchase stock at the press of a button. However, this doesn’t mean copying another app or website exactly; there should just be consistency so users know what to expect when they encounter your system.Apple even states an “app should respect its users and avoid forcing them to learn new ways to do things for no other reason than to be different”.

Principle #3: Flexibility and customizability

Is there more than one way people can access your system and its functions? Can people perform tasks in a number of different ways, too?Providing your users with a flexible system means people are more in control of what they’re doing. Galitz mentions this can also be done through allowing system customization.Don’t forget use on other kinds of devices, too. In a time when Google is using mobile-friendliness as a ranking signal, and research from Ericsson shows smartphones accounted for 75% of all mobile phone sales in Q4 2015, you know that being flexible is important.

Examples of good UI design

For a list of some of the best user interface examples, check out last year’s Webby Awards category for Best Interface Design. The 2016 category winner was the Reuters TV Web App, while the People’s Choice winner was AssessYourRisk.org.As an aside, this is the second year that the Webby Awards introduced this category — just goes to show how important it is to have good UI design!While you don’t want your site or application to look exactly the same as these winners, you still want yours to function well and be aesthetically pleasing.

To help you get there, there are a number of UI design tools and UI software available.Here’s a list of some of the many out there:

- UXPin - An online UI design tool that allows you to create wireframes, mockups, and prototypes all on one platform.

- InVision - A prototyping and collaboration tool. More in-depth than Balsamiq, and it allows you to go from mockup to high-fidelity in minutes.

- Balsamiq - A simple mockups tool for wireframing, which allows users to test out ideas in the early stage of interface design.

- Atomic - An interface design tool that allows you to design in your browser and collaborate with others on your projects.

Have you got any favorite UI design examples, or tips for beautiful design? We’d love to see them — comment below and let us know!

Further reading

- “UI, UX: Who does what? A designer’s guide to the tech industry” - An article from Fastcodesign that provides a breakdown of some of the design roles in the tech industry.

- “7 rules for creating gorgeous UI (Part 1)” - A great guide from Erik D. Kennedy for creating good UI design, with some fantastic tips to help you get started.

- “10 usability heuristics for user interface design” - An article from Nielsen Norman Group’s Principal Jakob Nielsen explaining some of the key principles to keep in mind when designing a user interface.

A quick analysis of feedback collected with OptimalSort

Card sorting is an invaluable tool for understanding how people organize information in their minds, making websites more intuitive and content easier to navigate. It’s a useful method outside of information architecture and UX research, too. It can be a useful prioritization technique, or used in a more traditional sense. For example, it’s handy in psychology, sociology or anthropology to inform research and deepen our understanding of how people conceptualize information.

The introduction of remote card sorting has provided many advantages, making it easier than ever to conduct your own research. Tools such as our very own OptimalSort allow you to quickly and easily gather findings from a large number of participants from all around the world. Not having to organize moderated, face-to-face sessions gives researchers more time to focus on their work, and easier access to larger data sets.

One of the main disadvantages of remote card sorting is that it eliminates the opportunity to dive deeper into the choices made by your participants. Human conversation is a great thing, and when conducting a remote card sort with users who could potentially be on the other side of the world, opportunities for our participants to provide direct feedback and voice their opinions are severely limited.Your survey design may not be perfect.

The labels you provide your participants may be incorrect, confusing or redundant. Your users may have their own ideas of how you could improve your products or services beyond what you are trying to capture in your card sort. People may be more willing to provide their feedback than you realize, and limiting their insights to a simple card sort may not capture all that they have to offer.So, how can you run an unmoderated, remote card sort, but do your best to mitigate this potential loss of insight?

A quick look into the data

In an effort to evaluate the usefulness of the existing “Leave a comment” feature in OptimalSort, I recently asked our development team to pull out some data.You might be asking “There’s a comment box in OptimalSort?”If you’ve never noticed this feature, I can’t exactly blame you. It’s relatively hidden away as an unassuming hyperlink in the top right corner of your card sort.

Comments left by your participants can be viewed in the “Participants” tab in your results section, and are indicated by a grey speech bubble.

The history of the button is unknown even to long-time Optimal Workshop team members. The purpose of the button is also unspecified. “Why would anyone leave a comment while participating in a card sort?”, I found myself wondering.As it turns out, 133,303 comments have been left by participants. This means 133,303 insights, opinions, critiques or frustrations. Additionally, these numbers only represent the participants who noticed the feature in the first place. Considering the current button can easily be missed when focusing on the task at hand, I can’t help but wonder how this number might change if we drew more attention to the feature.

Breaking down the comments

To avoid having to manually analyze and code 133,303 open text fields, I decided to only spend enough time to decipher any obvious patterns. Luckily for me, this didn’t take very long. After looking at only a hundred or so random entries, four distinct types of comments started to emerge.

- This card/group doesn’t make sense.Comments related to cards and groups dominate. This is a great thing, as it means that the majority of comments made by participants relate specifically to the task they are completing. For closed and hybrid sorts, comments frequently relate to the predefined categories available, and since the participants most likely to leave a comment are those experiencing issues, the majority of the feedback relates to issues with category names themselves. Many comments are related to card labels and offer suggestions for improving naming conventions, while many others draw attention to some terms being confusing, unclear or jargony. Comments on task length can also be found, along with reasons for why certain cards may be left ungrouped, e.g., “I’ve left behind items I think the site could do without”.

- Your organization is awesome for doing this/you’re doing it all wrong. A substantial number of participants used the comment box as an opportunity to voice their general feedback on the organization or company running the study. Some of the more positive comments include an appreciation for seeing private companies or public sector organizations conducting research with real users in an effort to improve their services. It’s also nice to see many comments related to general enjoyment in completing the task.On the other hand, some participants used the comment box as an opportunity to comment on what other areas of their services should be improved, or what features they would like to see implemented that may otherwise be missed in a card sort, e.g., “Increased, accurate search functionality is imperative in a new system”.

- This isn’t working for me. Taking a closer look at some of the comments reveals some useful feedback for us at Optimal Workshop, too. Some of the comments relate specifically to UI and usability issues. The majority of these issues are things we are already working to improve or have dealt with. However, for researchers, comments that relate to challenges in using the tool or completing the survey itself may help explain some instances of data variability.

- #YOLO, hello, ;) And of course, the unrelated. As you may expect, when you provide people with the opportunity to leave a comment online, you can expect just about anything in return.

How to make the most of your user insights in OptimalSort

If you’re running a card sort, chances are you already place a lot of value in the voice of your users. To ensure you capture any additional insights, it’s best to ensure your participants are aware of the opportunity to do so. Here are two ways you may like to ensure your participants have a space to voice their feedback:

Adding more context to the “Leave a comment” feature

One way to encourage your participants to leave comments is to promote the use of the this feature in your card sort instructions. OptimalSort gives you flexibility to customize your instructions every time you run a survey. By making your participants aware of the feature, or offering ideas around what kinds of comments you may be looking for, you not only make them more likely to use the feature, but also open yourself up to a whole range of additional feedback. An advantage of using this feature is that comments can be added in real time during a card sort, so any remarks can be made as soon as they arise.

Making use of post-survey questions

Adding targeted post-survey questions is the best way to ensure your participants are able to voice any thoughts or concerns that emerged during the activity. Here, you can ask specific questions that touch upon different aspects of your card sort, such as length, labels, categories or any other comments your participants may have. This can not only help you generate useful insights but also inform the design of your surveys in the future.

Make your remote card sorts more human

Card sorts are exploratory by nature. Avoid forcing your participants into choices that may not accurately reflect their thinking by giving them the space to voice their opinions. Providing opportunities to capture feedback opens up the conversation between you and your users, and can lead to surprising insights from unexpected places.

Further reading

- "Moderated card sorts vs online card sorts — why you need both" - An article on our blog that explains some of the differences between these two methods and how to use both for great results.

- "The hidden benefits of remote research" - Kathleen Asjes writes for UX Booth and explains when to use remote research and some of its many benefits.

- "Remote research: real users, real time, real research" - A book by Nate Bolt and Tony Tulathimutte on how to design and conduct remote research studies.

A short guide to personas

The word “persona” has many meanings. Sometimes the term refers to a part that an actor plays, other times it can mean a famous person, or even a character in a fictional play or book. But in the field of UX, persona has its own special meaning.

Before you get started with creating personas of your own, learn what they are and the process to create one. We'll even let you in on a great, little tip — how to use Chalkmark to refine and validate your personas.

What is a persona?

In the UX field, a persona is created using research and observations of your users, which is analyzed and then depicted in the form of a person’s profile. This individual is completely fictional, but is created based on the research you’ve conducted into your own users. It’s a form of segmentation, which Angus Jenkinson noted in his article “Beyond Segmentation” is a “better intellectual and practical tool for dealing with the interaction between the concept of the ‘individual’ and the concept of ‘group’”.

Typical user personas include very specific information in order to paint an in-depth and memorable picture for the people using them (e.g., designers, marketers etc).

The user personas you create don’t just represent a single individual either; they’ll actually represent a whole group. This allows you to condense your users into just a few segments, while giving you a much smaller set of groups to target.

There are many benefits of using personas. Here are just a few:

- You can understand your clients better by seeing their pain points, what they want, and what they need

- You can narrow your focus to a small number of groups that matter, rather than trying to design for everybody

- They’re useful for other teams too, from product management to design and marketing

- They can help you clarify your business or brand

- They can help you create a language for your brand

- You can market your products in a better, more targeted way

How do I create a persona?

There’s no right or wrong way to create a persona; the way you make them can depend on many things, such as your own internal resources, and the type of persona you want.

The average persona that you’ve probably seen before in textbooks, online or in templates isn’t always the best kind to use (picture the common and overused types like ‘Busy Barry’). In fact, the way user personas are constructed is a highly debated topic in the UX industry.

Creating good user personas

Good user personas are meaningful descriptions — not just a list of demographics and a fake name that allows researchers to simply make assumptions.

Indi Young, an independent consultant and founder of Adaptive Path, is an advocate of creating personas that aren’t just a list of demographics. In an article she penned on medium.com, Indi states: “To actually bring a description to life, to actually develop empathy, you need the deeper, underlying reasoning behind the preferences and statements-of-fact. You need the reasoning, reactions, and guiding principles.”

One issue that can stem from traditional types of personas is they can be based on stereotypes, or even reinforce them. Things like gender, age, ethnicity, culture, and location can all play a part in doing this.

In a study by Phil Turner and Susan Turner titled “Is stereotyping inevitable when designing with personas?” the authors noted: “Stereotyped user representations appear to constrain both design and use in many aspects of everyday life, and those who advocate universal design recognise that stereotyping is an obstacle to achieving design for all.”

So it makes sense to scrap the stereotypes and, in many instances, irrelevant demographic data. Instead, include information that accurately describes the persona’s struggles, goals, thoughts and feelings — all bits of meaningful data.

Creating user personas involves a lot of research and analyzing. Here are a few tips to get you started:

1) Do your research

When you’re creating personas for UX, it’s absolutely crucial you start with research; after all, you can’t just pull this information out of thin air by making assumptions! Ensure you use a mixture of both qualitative and quantitative research here in order to cast your net wide and get results that are really valuable. A great research method that falls into the realms of both qualitative and quantitative is user interviews.

When you conduct your interviews, drill down into the types of behaviors, attitudes and goals your users have. It’s also important to mention that you can’t just examine what your users are saying to you — you need to tap into what they’re thinking and how they behave too.

2) Analyze and organize your data into segments

Once you’ve conducted your research, it’s time to analyze it. Look for trends in your results — can you see any similarities among your participants? Can you begin to group some of your participants together based on shared goals, attitudes and behaviors?

After you have sorted your participants into groups, you can create your segments. These segments will become your draft personas. Try to limit the number of personas you create. Having too many can defeat the purpose of creating them in the first place.

Don’t forget the little things! Give your personas a memorable title or name and maybe even assign an image or photo — it all helps to create a “real” person that your team can focus on and remember.

3) Review and test

After you’ve finalized your personas, it’s time to review them. Take another look at the responses you received from your initial user interviews and see if they match the personas you created. It’s also important you spend some time reviewing your finalized personas to see if any of them are too similar or overlap with one another. If they do, you might want to jump back a step and segment your data again.

This is also a great time to test your personas. Conduct another set of user interviews and research to validate your personas.

User persona templates and examples

Creating your personas using data from your user interviews can be a fun task — but make sure you don’t go too crazy. Your personas need to be relevant, not overly complex and a true representation of your users.

A great way to ensure your personas don’t get too out of hand is to use a template. There are many of these available online in a number of different formats and of varying quality.

This example from UX Lady contains a number of helpful bits of information you should include, such as user experience goals, tech expertise and the types of devices used. The accompany article also provides a fair bit of guidance on how to fill in your templates too. While this template is good, skip the demographics portion and read Indi Young’s article and books for better quality persona creation.

Using Chalkmark to refine personas

Now it’s time to let you in on a little tip. Did you know Chalkmark can be used to refine and validate your personas?

One of the trickiest parts of creating personas is actually figuring out which ones are a true representation of your users — so this usually means lots of testing and refining to ensure you’re on the right track. Fortunately, Chalkmark makes the refinement and validation part pretty easy.

First, you need to have your personas finalized or at least drafted. Take your results from your persona software or template you filled in. Create a survey for each segment so that you can see if your participants’ perceptions of themselves matches each of your personas.

Second, create your test. This is a pretty simple demo we made when we were testing our own personas a few years ago at Optimal Workshop. Keep in mind this was a while ago and not a true representation of our current personas — they’ve definitely changed over time! During this step, it’s also quite helpful to include some post-test questions to drill down into your participants’ profiles.

After that, send these tests out to your identified segments (e.g., if you had a retail clothing store, some of your segments might be women of a certain age, and men of a certain age. Each segment would receive its own test). Our test involved three segments: “the aware”, “the informed”, and “the experienced” — again, this has changed over time and you’ll find your personas will change too.

Finally, analyze the results. If you created separate tests for each segment, you will now have filtered data for each segment. This is the real meaty information you use to validate each persona. For example, our three persona tests all contained the questions: “What’s your experience with user research?” And “How much of your job description relates directly to user experience work?”

A

bove, you’ll see the results for Persona #2. This tells us that 34% of respondents identified that their job involves a lot of UX work (75-100%, in fact). In addition, 31% of this segment considered themselves “Confident” with remote user research, while a further 9% and 6% of this segment said they were “Experienced” and “Expert”.

These results all aligned with the persona we associated with that segment: “the informed”.

When you’re running your own tests, you’ll analyze the data in a very similar way. If the results from each of your segments’ Chalkmark tests don’t match up with the personas you created, it’s likely you need to adjust your personas. However, if each segment’s results happen to match up with your personas (like our example above), consider them validated!

For a bit more info on our very own Chalkmark persona test, check out this article.

Further reading

- "From personas to user stories" - an article by Roman Pichler on how to discover the right stories and integrate them into a sprint.

- "Personas make users memorable for product team members" - Aurora Bedford looks at how personas support user-centered design throughout a project’s lifecycle.

- "Developing successful personas" - Luke Wroblewski presents notes from a talk by Tamara Adlin who outlines a set of practical ways to align stakeholder, developers, and designers to use personas.

- "Describing personas" - Indi Young looks at some of the problems in accurately describing personas.

No results found.