Optimal Blog

Articles and Podcasts on Customer Service, AI and Automation, Product, and more

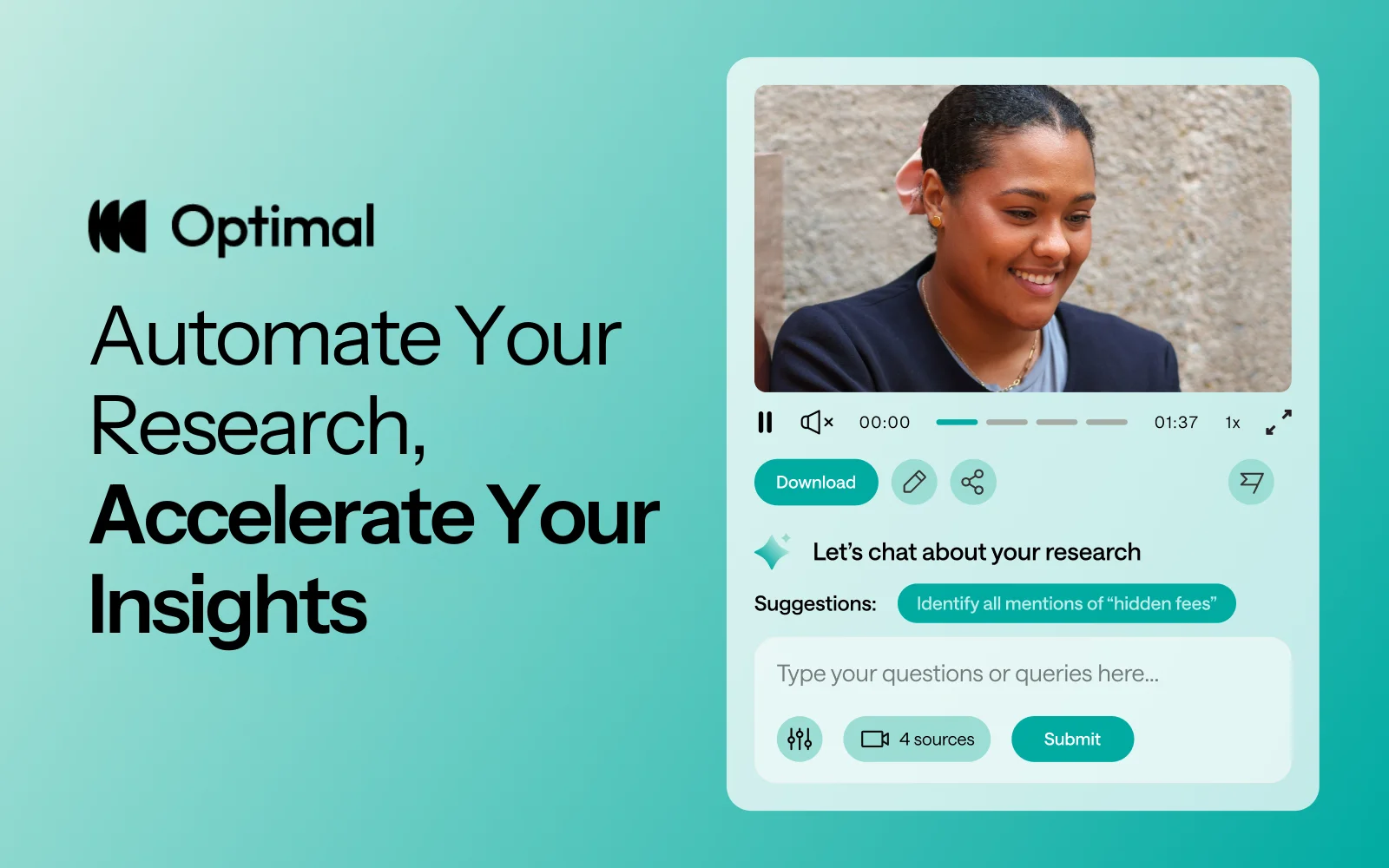

At Optimal, we know the reality of user research: you've just wrapped up a fantastic interview session, your head is buzzing with insights, and then... you're staring at hours of video footage that somehow needs to become actionable recommendations for your team.

User interviews and usability sessions are treasure troves of insight, but the reality is reviewing hours of raw footage can be time-consuming, tedious, and easy to overlook important details. Too often, valuable user stories never make it past the recording stage.

That's why we’re excited to announce the launch of Interviews, a brand-new tool that saves you time with AI and automation, turns real user moments into actionable recommendations, and provides the evidence you need to shape decisions, bring stakeholders on board, and inspire action.

Interviews, Reimagined

We surveyed more than 100 researchers, designers, and product managers, conducted discovery interviews, tested prototypes, and ran feedback sessions to help guide the discovery and development of Optimal Interviews.

The result? What once took hours of video review now takes minutes. With Interviews, you get:

- Instant clarity: Upload your interviews and let AI automatically surface key themes, pain points, opportunities, and other key insights.

- Deeper exploration: Ask follow-up questions and anything with AI chat. Every insight comes with supporting video evidence, so you can back up recommendations with real user feedback.

- Automatic highlight reels: Generate clips and compilations that spotlight the takeaways that matter.

- Real user voices: Turn insight into impact with user feedback clips and videos. Share insights and download clips to drive product and stakeholder decisions.

Groundbreaking AI at Your Service

This tool is powered by AI designed for researchers, product owners, and designers. This isn’t just transcription or summarization, it’s intelligence tailored to surface the insights that matter most. It’s like having a personal AI research assistant, accelerating analysis and automating your workflow without compromising quality. No more endless footage scrolling.

The AI used for Interviews as well as all other AI with Optimal is backed by AWS Amazon Bedrock, ensuring that your AI insights are supported with industry-leading protection and compliance.

Evolving Optimal Interviews

A big thank you to our early access users! Your feedback helped us focus on making Optimal Interviews even better. Here's what's new:

- Speed and easy access to insights: More video clips, instant download, and bookmark options to make sharing findings faster than ever.

- Privacy: Disable video playback while still extracting insights from transcripts and get PII redaction for English audio alongside transcripts and insights.

- Trust: Our enhanced, best-in-class AI chat experience lets teams explore patterns and themes confidently.

- Expanded study capability: You can now upload up to 20 videos per Interviews study.

What’s Next: The Future of Moderated Interviews in Optimal

This new tool is just the beginning. Our vision is to help you manage the entire moderated interview process inside Optimal, from recruitment to scheduling to analysis and sharing.

Here’s what’s coming:

- View your scheduled sessions directly within Optimal. Link up with your own calendar.

- Connect seamlessly with Zoom, Google Meet, or Teams.

Imagine running your full end-to-end interview workflow, all in one platform. That’s where we’re heading, and Interviews is our first step.

Ready to Explore?

Interviews is available now for our latest Optimal plans with study limits. Start transforming your footage into minutes of clarity and bring your users’ voices to the center of every decision. We can’t wait to see what you uncover.

Topics

Research Methods

Popular

All topics

Latest

Lunch n' Learn: Writing for, talking to, and designing with vulnerable users

Every month we have fun and informative “bite sized” presentations to add some inspiration to your lunch break. These virtual events allow us to partner with amazing speakers, community groups and organizations to share their insights and hot takes on a variety of topics impacting our industry.

Join us at the end of every month for Lunch n' Learn.

Ally Tutkaluk

A multi-faceted approach is key when creating digital products for users who may be in a vulnerable, sensitive, or distressed state. Adopting an approach to copy, design, and testing that considers the unique needs of your main user group not only enhances their experiences, but improves the product for everyone.

From user interviews, to copywriting, to IA decisions, to testing - Ally will cover tools and tips for how you can ensure vulnerable users needs’ are considered at every stage of the digital design process.

Speaker Bio

Ally has worked in digital experience in the higher education, FMCG, and not-for-profit industries for over 14 years, most recently at Australian healthcare charity Lives Lived Well. She’s passionate about working with users to create data-driven, meaningful and valuable digital content and navigation pathways. She lives in Brisbane and also teaches Design Thinking at the Queensland University of Technology.

Grab your lunch, invite your colleagues and we hope to see you at our next Lunch n’ Learn 🌮🍕🥪

Lunch n' Learn: Integrating Self-Leadership & Well-being into Our Design Practice

Every month we have fun and informative “bite sized” presentations to add some inspiration to your lunch break. These virtual events allow us to partner with amazing speakers, community groups and organizations to share their insights and hot takes on a variety of topics impacting our industry.

Join us at the end of every month for Lunch n' Learn.

Susanna Carman

The world is growing increasingly volatile and uncertain. Design practitioners working during these times are tasked with bringing skills and professional expertise to help solve complex customer and internal-facing challenges. However, many of us are operating in professional contexts that are resistant to change, struggle to understand what we do, or are unable to fully embrace the value we have to offer. Trying to do ‘good’ work in these conditions can be isolating, frustrating and anxiety producing. In order to sustain our capacity for impact, now is the time to invest in integrating our own well-being into our design practice.

Design Leadership & Learning specialist, Susanna Carman, returns to offer 60-minutes of sanctuary for those who would like to explore the questions:

- What does it take for us and our practice to BE well?

- What’s the relationship between well-being, self-leadership & impact?

Susanna will present and share restorative practices that deepen understanding, enhance capacity for self-care, and reframe the quality of impact we can have with others in our professional roles.

Speaker Bio

Susanna Carman is a Strategic Designer and research-practitioner who helps people solve complex problems, the types of problems that have to do with services, systems and human interactions. Specializing in design, leadership and learning, Susanna brings a high value toolkit and herself as Thinking Partner to design leadership and change practitioners who are tasked with delivering sustainable solutions amidst disruptive conditions.

Susanna holds a Masters of Design Futures degree from RMIT University, and has over a decade of combined experience delivering business performance, cultural alignment and leadership development outcomes to the education, health, community development and financial services sectors. She is also the founder and host of Transition Leadership Lab, a 9-week learning lab for design, leadership and change practitioners who already have a sophisticated set of tools and mindsets, but still feel these are insufficient to meet the challenge of leading change in a rapidly transforming world.

Grab your lunch, invite your colleagues and we hope to see you at our next Lunch n' Learn 🌮🍕🥪

Ruth Brown: When expertise becomes our achilles heel

We all want to be experts in what we do. We train, we practice, and we keep learning. We even do 10,000 hours of something believing it will make us an expert.

But what if our ‘expertise’ actually comes with some downfalls? What if experts can be less creative and innovative than their less experienced counterparts? What if they lack flexibility and are more prone to error?

Ruth Brown, freelance Design Lead, recently spoke at UX New Zealand, the leading UX and IA conference in New Zealand hosted by Optimal Workshop, on how experts can cover their blind spots.

In her talk, Ruth discusses the paradox of expertise, how it shows up in design (and especially design research), and most importantly - what we can do about it.

Background on Ruth Brown

Ruth is a freelance researcher and design leader. She currently works in the design team at ANZ. In the past, she has been GM of Design Research at Xero and Head of User Experience at Trade Me.

Ruth loves people. She has spent much of her career understanding how people think, feel, and behave. She cares a lot about making things that make people’s lives better. Her first love was engineering until she realized that people were more interesting than maths. On a good day, she gets to do both.

Contact Details:

Email address: ruthbrownnz@gmail.com

LinkedIn URL: https://www.linkedin.com/in/ruth-brown-309a872/

When expertise becomes our achilles heel 🦶🏼🗡

Ruth is an avid traveler and travel planner. She is incredibly organized but recounts a time when she made a mistake on her father’s travel documents. The error (an incorrect middle name on a plane ticket) cost her up to $1,000 and, perhaps worse, dealt a severe knock to her confidence as a self-stylized travel agent!

What caused the mistake? Ruth, after recovering from the error, realized that she had auto-filled her father’s middle name field with her own, voiding the ticket. She had been the victim of two common ways that people with expertise fail:

- Trusting their tools too much

- Being over-confident – not needing to check their work

This combination is known as the Paradox of Expertise.

The Paradox of Expertise 👁️⃤

While experts are great at many things and we rely on them every day, they do have weaknesses. Ruth argues that the more we know about our weaknesses, the more we can avoid them.

Ruth touches on what’s happening inside the brain of experts, and what’s happening outside the brain (social).

- Inside influence (processing): As the brain develops and we gain experiences, it starts to organize information better. It creates schemas, which makes accessing and retrieving that information much easier and more automatic. Essentially, the more we repeat something, the more efficient the brain becomes in processing information. While this sounds like a good thing, it starts to become a burden when completely new information/ideas enter the brain. The brain struggles to order new information differently which means we default to what we know best, which isn’t necessarily the best at all.

- Outside influence (social): The Authority Bias is where experts are more likely to be believed than non-experts. Combined with this, experts usually have high confidence in what they’re talking about and can call upon neatly organized data to strengthen their argument. As a result, experts are continually reinforced with a sense of being right.

How does the Paradox of Expertise work against us? 🤨

In her talk, Ruth focuses on the three things that have the most impact on design and research experts.

1) Experts are bad at predicting the future

Hundreds of studies support the claim that experts are bad at predicting the future. One study by Philip Tetlock tested 284 experts (across multiple fields) and 27,450 predictions. It was found that after 20 years, the experts did “little better than dart-throwing chimpanzees”.

As designers and researchers, one of the most difficult things we get asked is to predict the future. We get asked questions like; will people use this digital product? How will people use it? How much will they pay?

Since experts are so bad at predicting the future, how can we reduce the damage?

- Fix #1: Generalize: The Tetlock study found that people with a broader knowledge of a subject were much better predictors. Traits of good predictors included “knowing many small things”, “being skeptical of grand schemes”, and “sticking together diverse sources of information”.

- Fix #2: Form interdisciplinary teams: This is fairly common practice now, so we can take it further. Researchers should consider asking wider teams for recommendations when responding to research results. Rather than independently making recommendations based on your own narrow lens, bring in the wider team.

2) Experts make worse teachers

In general, experts are fairly average teachers. Despite knowing the principles, theory, and practice of our fields, experts aren’t usually very good teachers. This is because experts tend to think in abstraction and concepts that have been built up over thousands of hours of experience. This leads experts to skip the explanation of foundational steps i.e. explaining why the concepts themselves are important.

- Fix #3: Be bad at something: There’s nothing like stepping into the shoes of a rookie to have empathy for their experience. By doing so, it helps us to take stock of the job or task that we’re doing.

- Fix #4: It takes a team: Sometimes we need to realize that there are other people better suited to some tasks e.g. teaching! As an expert, the responsibility doesn’t have to fall to you to pass on knowledge – there are others who know enough to do it in place of you, and that’s okay. In fact, it may be better to have the entire village teach a junior, rather than one elder.

3) Experts are less innovative and open-minded

Ruth highlights the fact that experts find it hard to process new information, especially new information that challenges closely held beliefs or experiences. It is difficult to throw away existing arguments (or schemas) in place of new, seemingly untested arguments.

- Fix #5: Stay curious: It’s easier said than done, but stay open to new arguments, information, and schemas. Remember to let your ego step down – don’t dig your heels in.

Why it matters 🤷

Understanding the Paradox of Expertise can help designers and researchers become more effective in their roles and avoid common pitfalls that hinder their work.

Ruth's insights into the inner workings of experts' brains shed light on the cognitive processes that can work against us. The development of schemas and the efficiency of information processing, while beneficial, can also lead to cognitive biases and resistance to new information. This insight reminds UX professionals to remain open-minded and adaptable when tackling design and research challenges.

The three key points Ruth emphasizes - the inability of experts to predict the future accurately, their challenges as teachers, and their resistance to innovation - have direct implications for the UX field. UX designers often face the daunting task of predicting user behavior and needs, and recognizing the limitations of expertise in this regard is crucial. Furthermore, the importance of embracing interdisciplinary teams and seeking diverse perspectives is underscored as a means to mitigate the shortcomings of expertise. Collaboration and humility in acknowledging that others may be better suited to certain tasks can lead to more well-rounded and innovative solutions.

Finally, Ruth's call to stay curious and open-minded is particularly relevant to UX professionals. In a rapidly evolving field, the ability to adapt to new information and perspectives is critical. By recognizing that expertise is not a fixed state but an ongoing practice, designers and researchers can continuously improve their work and deliver better user experiences.

What is UX New Zealand?

UX New Zealand is a leading UX and IA conference hosted by Optimal Workshop, that brings together industry professionals for three days of thought leadership, meaningful networking and immersive workshops.

At UX New Zealand 2023, we featured some of the best and brightest in the fields of user experience, research and design. A raft of local and international speakers touched on the most important aspects of UX in today’s climate for service designers, marketers, UX writers and user researchers.

These speakers are some of the pioneers leading the way and pushing the standard for user experience today. Their experience and perspectives are invaluable for those working at the coalface of UX, and together, there’s a tonne of valuable insight on offer.

Lunch n' Learn: Using categorisation systems to choose your best IA approach

Every month we have fun and informative “bite sized” presentations to add some inspiration to your lunch break. These virtual events allow us to partner with amazing speakers, community groups and organizations to share their insights and hot takes on a variety of topics impacting our industry.

Join us at the end of every month for Lunch n' Learn.

Elle Geraghty

We all know that testing your IA is essential to create a functioning user experience.

But prior to testing, what is the best way to decide between multiple possible IA approaches?

For Elle Geraghty, the answer is a thorough understanding of potential categorisation options that can be matched to your business and user needs.

Speaker Bio

Elle Geraghty is a consultant content strategist from Sydney, Australia who specialises in large website redesign.

Over the past 15 years, she has worked with Qantas, Atlassian, the Australian Museum, Virgin Mobile Australia and all levels of the Australian government.

Elle also teaches and coaches content strategy and information architecture.... and through all this work she has developed a great IA categorisation system which she will share with you today.

Connect with Elle on:

Ebony Kenney: “Ain’t None of Y’all Safe!”: Achieving Social Justice in digital spaces

Pressure on budgets, deadlines, and constantly shifting goalposts can mean that our projects sometimes become familiar, impersonal, and “blah”. How can we evolve from our obsession with status quo content engagement and instead, using our raised awareness, help to usher culture and society’s changing demands into our digital products?

Ebony Kenney, UX Analyst at Ripefruit Creative, Ripefruit Foundation, and a Federal Government Agency, recently spoke at UX New Zealand, the leading UX and IA conference in New Zealand hosted by Optimal Workshop, on how social justice can permeate our work as Usability professionals.

In her talk, Ebony takes us on a journey beyond the status quo to deliver digital products that are equitable and champion social justice.

Background on Ebony Kenney

Ebony L. Kenney is a Graphic Design, Market Research, and Usability veteran, excelling at the art of inquiry. She is said to be a "clear wifi signal” with her finger on the pulse, and insights to elevate any conversation. She draws from a scientific approach which yields itself to facilitating discussions that inspire thought and action. She holds a BA in English and MA in Design. She has most recently served as a User Experience Product Lead for applications, and Data Analyst for workforce morale efforts at a federal agency.

She is also the founder of Ripefruit Foundation, a non-profit effort dedicated to identifying and strengthening peripheral skills as they appear along the spectrum of neurodiversity, and Ripefruit Creative, a design agency dedicated to the realm of education and equity.

Contact Details:

Email address: ekenney11@gmail.com

LinkedIn URL: https://www.linkedin.com/in/ebonylkenney/

“Ain’t None of Y’all Safe!”: Achieving Social Justice in digital spaces 🌱

Ebony’s talk explores how Usability professionals often focus too much on the details, for example, the elements of a page, or a specific user path. As a result, it’s easy to forget the bigger picture - the potential for the project to make a difference, to be welcoming, and to be an accessible experience for a diverse set of users. Additionally, constraints on timelines, uninformed or unwilling product owners, and the endless loop of shifting requirements distract us from even a hint of a higher purpose. Throughout our projects, things that are important, like designing a nurturing, protective, and supportive environment that encourages engagement start to get deprioritized.

Ebony challenges us to work in ways that ensure we don’t leave behind equity and social justice in our digital products.

Introducing a Safe Third Space ⚠️

Her talk introduces the concept of "third space," which is a hybrid space that combines different realities. Combining the concepts of “safe space” and “third space”, she arrives at the “safe third space”, which has embedded social justice and is the pinnacle of product design.

- Third Space: A hybrid space with concentric, adjacent, and overlapping realities. Third spaces can be geographical (e.g. a coffee shop with a bank attached), cultural (e.g. finding a connection between immigrants and first generations), and virtual (e.g. online experiences and social media).

- Safe Space: A space where a person can be honest and there are no consequences or perks.

- Safe Third Space: A space or environment online where people feel safe and can be themselves. Going beyond tolerance to actually making people feel like they belong.

What is Social Justice? ⚖️

Ebony asks us to think about social justice as rungs on a ladder, starting with “reality” and finishing on “justice” as we climb the ladder.

- Reality: Some get less than what’s needed, while others get more. Waste and disparity are created.

- Empathy: Once you’re in touch with pain points, compassion is sparked for another human’s condition.

- Equality: The assumption is that everyone benefits from the same support. This is considered to be “equal treatment”.

- Equity: Everyone gets the support they need, which produces equity.

- Justice: The cause(s) of the inequity was addressed. The barriers have been removed or work has begun.

When discussing equity, Ebony highlights the difference between need-based and strength-based equity. Need-based equity identifies everyone's needs, while strength-based equity goes further by identifying the different strengths of the people involved.

Essentially, everyone has a unique lens through which they view and experience the world. Teams and organizations should value their employee's unique lenses and should encourage employees to feel comfortable speaking their minds. Additionally, teams and organizations should nurture safe spaces so that these views can be shared and therefore add value to a project or product achieving equity and social justice.

Why it matters 💥

When we think about our users, we should climb the social justice ladder and think about people on the “fringes” of our user base. In other words, don’t just cater to the most valuable user, or the most engaged user, as often happens when we start delivering projects. We should challenge status quo processes and assumptions in an attempt to better reflect society in our digital products.

Bringing social justice into the UX design process can be done by marrying the basic UX design process with the social justice ladder.

What does implementing Social Justice look like in practice? 👀

Ebony suggests a few ways that UX professionals can adopt social justice practices in our day-to-day work. These practices help to foster diverse thinking within project teams, which in turn helps us to get closer to achieving equity and social justice when designing digital products.

Social Justice on an Agile Team

- Call your own meetings with just the people necessary – don’t be afraid to coordinate meetings outside of the scrum master’s schedule.

- Recommend don’t suggest – It’s a subtle difference, but “I recommend…” statements emphasize your own unique viewpoint.

- Find data to back up recommendations - if not, find or generate the data

- Set the UX/CX commitment before the ceremonies begin.

- Choose your battles – don’t fight your product owner on every single thing.

Social Justice on the Screen

- Run completely through each user path (From Google, not URL). This helps us to catch blind spots.

- Make personas that make sense - ensure they can be implemented.

- Capture risk and look for trends in decision-making that could have business implications e.g. if you’re trying to convince your product owner to change something, it’s important to align it to the business goals or project vision.

- Look for bias in language and placement - share from your unique viewpoint.

- Watch an internet novice navigate your screen.

Be yourself, but develop yourself

- What is your strong point as a UX person?

- Are you codeswitching for safety reasons or expediency?

- Are you allowing “different”?

- Work on your question muscle – try to ask questions that build a foundation of understanding before making wild guesses or assumptions.

- Avoid burnout

In these ways, organizations and teams can keep social justice front and center when designing a digital product, rather than letting it slip by the wayside. If you can create safe spaces for your team to thrive and share unique points of view (or at least look for them), you are much more likely to design products that nurture engagement, create a welcoming environment, and ultimately meet the needs of diverse user groups.

Kate Keep and Brad Millen: How the relationship between Product Owners and Designers can impact human-centered design

Working in a multi-disciplined product team can be daunting, but how can those relationships be built, and what does that mean for your team, your stakeholders, and the users of the product?

Kate Keep, Product Owner, and Brad Millen, UX Designer, both work in the Digital team at the Accident Compensation Corporation (ACC). They recently spoke at UX New Zealand, the leading UX and IA conference in New Zealand hosted by Optimal Workshop, about their experience working on a large project within an organization that was new to continuous improvement and digital product delivery.

In their talk, Kate and Brad discuss how they were able to pull a team together around a common vision, and three key principles they found useful along the way.

Background on Kate

Kate is a Product Owner working in the Digital team at ACC, and her team currently look after ACC’s Injury Prevention websites. Kate is also a Photographer, which keeps her eye for detail sharp and her passion for excellence alive. She comes from a Contact Centre background which drives her dedication to continuously search for the optimal customer experience. Kate and the team are passionate about accessibility and building websites that are inclusive for all of Aotearoa.

Contact Details:

Email address: kate.keep@acc.co.nz

LinkedIn URL: Not provided

Background on Brad

Brad is a Digital UX Designer in Digital team at ACC. Before launching into the world of UX, Brad studied game design which sparked his interest in the way people interact, engage and perceive products. This helped to inform his ethos that you’re always designing with others in mind.

How the relationship between Product Owners and Designers can impact human-centered design 👩🏻💻📓✍🏻💡

Brad and Kate preface their talk by acknowledging that they were both new to their roles and came from different career backgrounds when this project began, which presented a significant challenge. Kate was a Product Owner with no previous delivery experience, while Brad, was a UX designer. To overcome these challenges, they needed to quickly figure out how to work together effectively.

Their talk focuses on three key principles that they believe are essential when building a digital product in a large, multi-disciplined team.

Building Trust-Based Relationships 🤝🏻

The first principle emphasizes the importance of building trust-based relationships. They highlight the need to understand each other's perspectives and work together towards a common vision for the customer. This can only be achieved by building a strong sense of trust with everyone on the team. They stress the value of open and honest communication - both within the team and with stakeholders.

Kate, as Product Owner, identified her role as being one of “setting the vision and getting the hell out of the way”. In this way, she avoided putting Brad and his team of designers in a state of paralysis by critiquing decisions all of the time. Additionally, she was clear from the outset with Brad that she needed “ruthless honesty” in order to build a strong relationship.

Cultivating Psychological Safety and a Flat Hierarchy 🧠

The second principle revolves around creating an environment of psychological safety. Kate explains that team members should feel comfortable challenging the status quo and working through disagreements without fear of ridicule. This type of safety improves communication and fast-tracks the project by allowing the team to raise issues without feeling they need to hide and wait for something to break.

They also advocate for a flat hierarchy where everyone has an equal say in decision-making. This approach empowers team members and encourages autonomy. It also means that decisions don’t need to wait for meetings, where juniors are scheduled to report issues or progress to seniors. Instead, all team members should feel comfortable walking up to a manager and, having built a relationship with them, flag what’s on their mind without having to wait.

This combination of psychological safety and flat hierarchy, coupled with building trust, means that the team dynamic is efficient and productive.

Continuous Focus on the Customer Voice 🔊

The third principle centers on keeping the customer's voice at the forefront of the product development process. Brad and Kate recommend regularly surfacing customer feedback and involving the entire team in understanding customer needs and goals. They also highlight the importance of making customer feedback tangible and visible to all team members and stakeholders.

Explaining why the topic matters 💡

Kate and Brad’s talk sets a firm foundation for building positive and efficient team dynamics. The principles that they discuss champion empowerment and autonomy, which ultimately help multi-disciplined teams to gel when developing digital products. In practice, these principles set the stage for several key advantages.

They stress that building trust is key, not only for the immediate project team but for organizational stakeholders too. It’s just as crucial for the success of the product that all key stakeholders buy into the same way of thinking i.e. trusting the expertise of the product design and development teams. Kate stresses that sometimes Product Owners need to absorb stakeholder pressure and take failures on the chin so that they to let design teams do what they do best.

That being said, Kate also realizes that sometimes difficult decisions need to be made when disagreements arise within the project team. This is when the value of building trust works both ways. In other words, Kate, as Product Owner, needed to make decisions in the best interest of the team to keep the project moving.

Psychological safety, in practice, means leading by example and providing a safe environment for people to be honest and feel comfortable enough to speak up when necessary. This can even mean being honest about what scares you. People tend to value this type of honesty, and it establishes common ground by encouraging team members (and key stakeholders) to be upfront with each other.

Finally, keeping the customer's voice front and center is important, not just as design best practice, but also as a way of keeping the project team grounded. Whenever the project experiences a bump in the road, or a breakdown in team communication, Kate and Brad suggest always coming back to the question, “What’s most important to the customer?”. Allow user feedback to be accessible to everyone in the team. This means that the customer's voice can be present throughout the whole project, and everyone, including key stakeholders, never lose sight of the real-life application of the product. In this way, teams are consistently able to work with facts and insights rather than making assumptions that they think are best for the product.

What is UX New Zealand? 🤷

UX New Zealand is a leading UX and IA conference hosted by Optimal Workshop, that brings together industry professionals for three days of thought leadership, meaningful networking and immersive workshops.

At UX New Zealand 2023, we featured some of the best and brightest in the fields of user experience, research and design. A raft of local and international speakers touched on the most important aspects of UX in today’s climate for service designers, marketers, UX writers and user researchers.

These speakers are some of the pioneers leading the way and pushing the standard for user experience today. Their experience and perspectives are invaluable for those working at the coalface of UX, and together, there’s a tonne of valuable insight on offer.

No results found.