Optimal Blog

Articles and Podcasts on Customer Service, AI and Automation, Product, and more

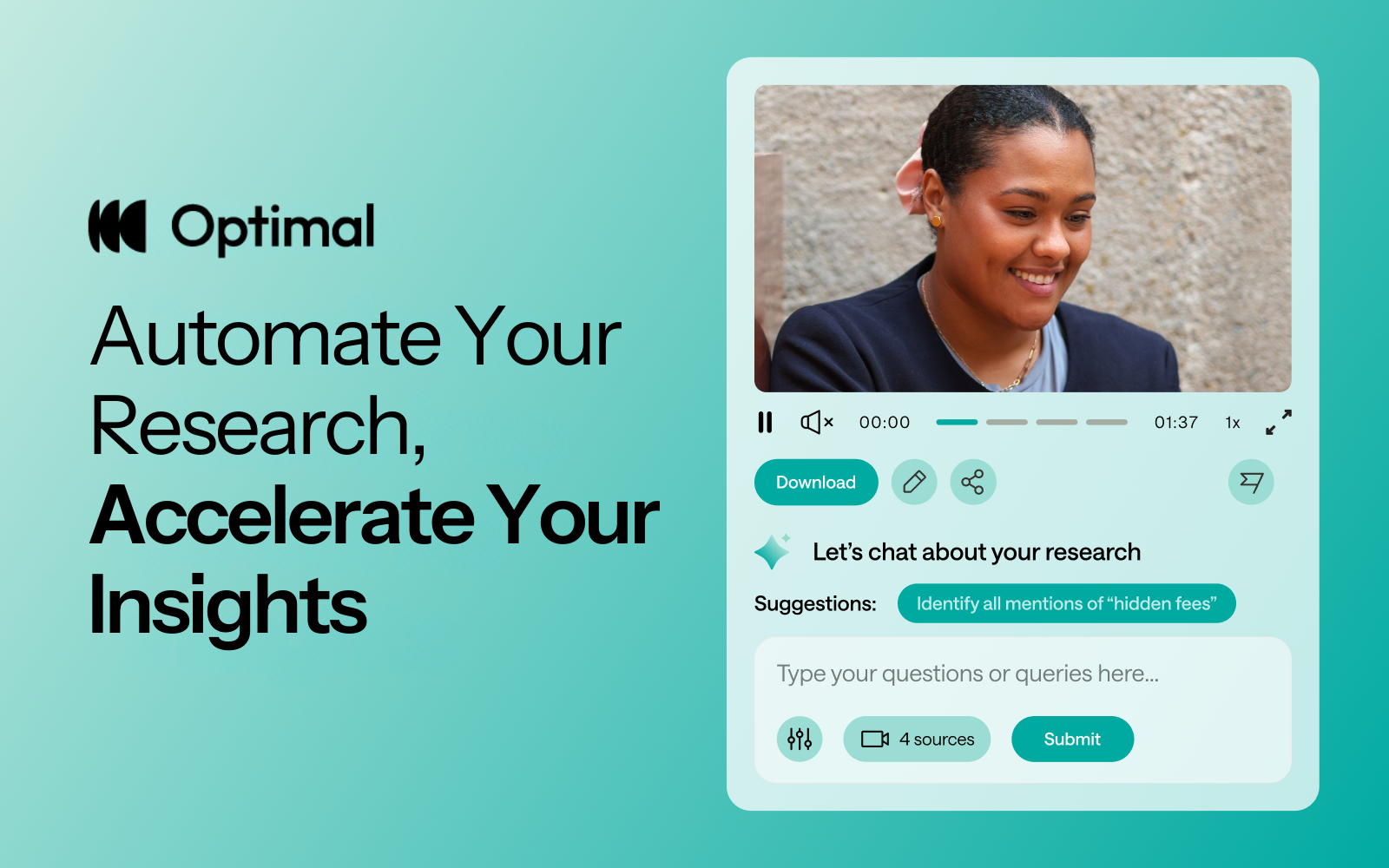

At Optimal, we know the reality of user research: you've just wrapped up a fantastic interview session, your head is buzzing with insights, and then... you're staring at hours of video footage that somehow needs to become actionable recommendations for your team.

User interviews and usability sessions are treasure troves of insight, but the reality is reviewing hours of raw footage can be time-consuming, tedious, and easy to overlook important details. Too often, valuable user stories never make it past the recording stage.

That's why we’re excited to announce the launch of early access for Interviews, a brand-new tool that saves you time with AI and automation, turns real user moments into actionable recommendations, and provides the evidence you need to shape decisions, bring stakeholders on board, and inspire action.

Interviews, Reimagined

What once took hours of video review now takes minutes. With Interviews, you get:

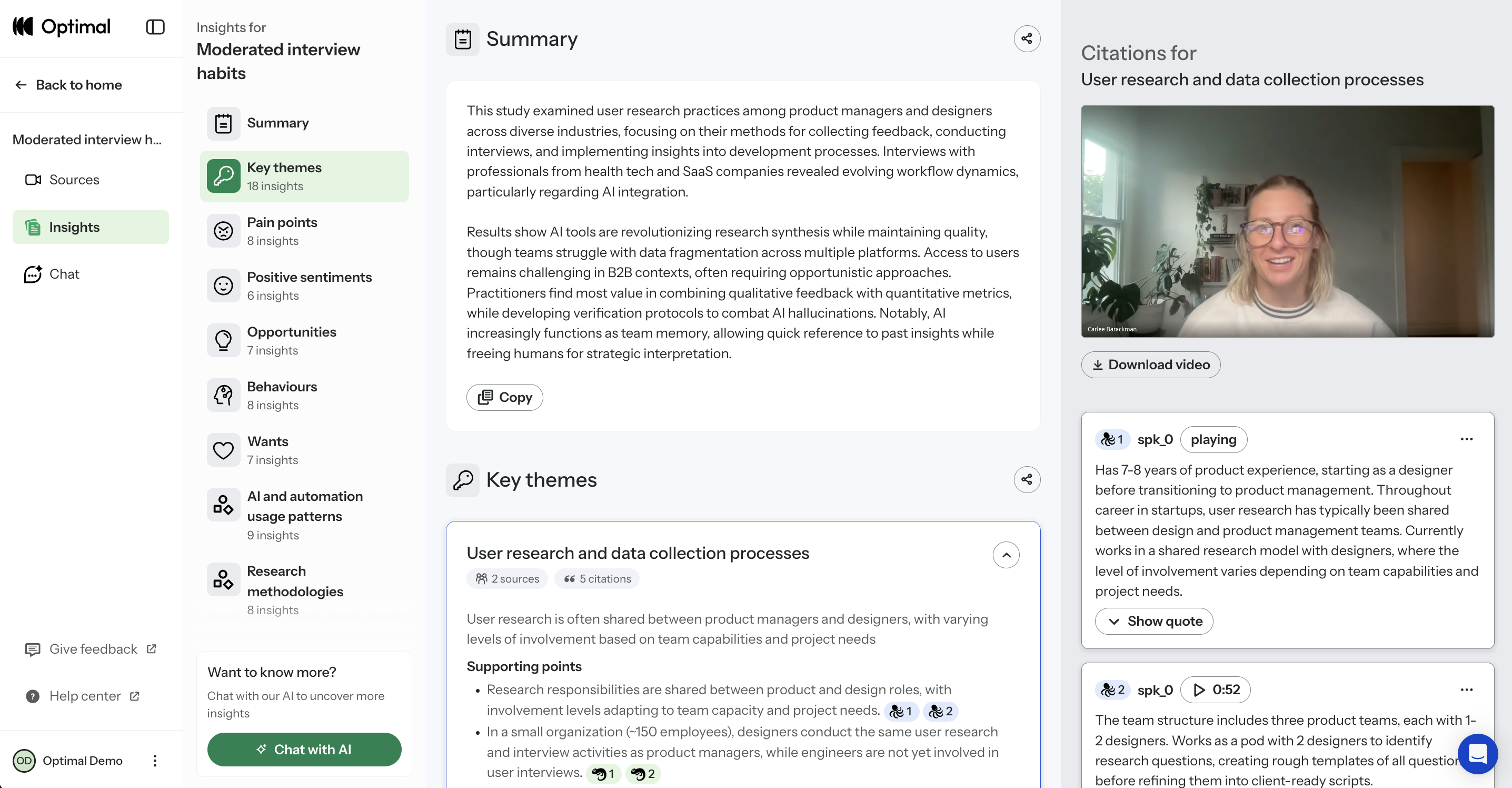

- Instant clarity: Upload your interviews and let AI automatically surface key themes, pain points, opportunities, and other key insights.

- Deeper exploration: Ask follow-up questions and anything with AI chat. Every insight comes with supporting video evidence, so you can back up recommendations with real user feedback.

- Automatic highlight reels: Generate clips and compilations that spotlight the takeaways that matter.

- Real user voices: Turn insight into impact with user feedback clips and videos. Share insights and download clips to drive product and stakeholder decisions.

Groundbreaking AI at Your Service

This tool is powered by AI designed for researchers, product owners, and designers. This isn’t just transcription or summarization, it’s intelligence tailored to surface the insights that matter most. It’s like having a personal AI research assistant, accelerating analysis and automating your workflow without compromising quality. No more endless footage scrolling.

The AI used for Interviews as well as all other AI with Optimal is backed by AWS Amazon Bedrock, ensuring that your AI insights are supported with industry-leading protection and compliance.

What’s Next: The Future of Moderated Interviews in Optimal

This new tool is just the beginning. Soon, you’ll be able to manage the entire moderated interview process inside Optimal, from recruitment to scheduling to analysis and sharing.

Here’s what’s coming:

- Recruit users using Optimal’s managed recruitment services.

- View your scheduled sessions directly within Optimal. Link up with your own calendar.

- Connect seamlessly with Zoom, Google Meet, or Teams.

Imagine running your full end-to-end interview workflow, all in one platform. That’s where we’re heading, and Interviews is our first step.

Ready to Explore?

Interviews is available now for our latest Optimal plans with study limits. Start transforming your footage into minutes of clarity and bring your users’ voices to the center of every decision. We can’t wait to see what you uncover.

Want to learn more and see it in action? Join us for our upcoming webinar on Oct 21st at 12 PM PST.

Topics

Research Methods

Popular

All topics

Latest

Selling your design recommendations to clients and colleagues

If you’ve ever presented design findings or recommendations to clients or colleagues, then perhaps you’ve heard them say:

- “We don’t have the budget or resources for those improvements.”

- “The new executive project has higher priority.”

- “Let’s postpone that to Phase 2.”

As an information architect, I‘ve presented recommendations many times. And I’ve crashed and burned more than once by doing a poor job of selling some promising ideas. Here’s some things I’ve learned from getting it wrong.

Buyers prefer sellers they like and trust

You need to establish trust with peers, developers, executives and so on before you present your findings and recommendations . It sounds obvious, yet presentations often fail due to unfamiliarity, sloppiness or designer arrogance. A year ago I ran an IA test on a large company website. The project schedule was typically “aggressive” and the client’s VPs were endlessly busy. So I launched the test without their feedback. Saved time, right?Wrong. The client ignored all my IA recommendations, and their VPs ultimately rewrote my site map from scratch. I could have argued that they didn’t understand user-centered design. The truth is that I failed to establish credibility. I needed them to buy into the testing process, suggest test questions beforehand, or take the test as a control group. Anything to engage them would have helped – turning stakeholders into collaborators is a great way to establish trust.

Techniques for presenting UX recommendations

Many presentation tactics can be borrowed from salespeople, but a single blog post can’t do justice to the entire sales profession. So I’d just like to offer a few ideas for thought. No Jedi mind tricks though. Sincerity matters.

Emphasize product benefits, not product features

Beer commercials on TV don’t sell beer. They sell backyard parties and voluptuous strangers. Likewise, UX recommendations should emphasize product benefits rather than feature sets. This may be common marketing strategy. However, the benefits should resonate with stakeholders and not just test participants. Stakeholders often don’t care about Joe End User. They care about ROI, a more flexible platform, a faster way to publish content – whatever metrics determine their job performance.Several years ago, I researched call center data at a large corporation. To analyze the data, I eventually built a Web dashboard. The dashboard illustrated different types of customer calls by product. When I showed it to my co-workers, I presented the features and even the benefits of tracking usability issues this way.However, I didn’t research the specific benefits to my fellow designers. Consequently it was much, much harder to sell the idea. I should have investigated how a dashboard would fit into their daily routines. I had neglected the question that they silently asked: “What’s in it for me?”

Have a go at contrast selling

When selling your recommendations, consider submitting your dream plan first. If your stakeholders balk, introduce the practical solution next. The contrast in price will make the modest recommendation more palatable.While working on e-commerce UI, I once ran a usability test on a checkout flow. The test clearly suggested improvements to the payment page. To try slipping it into an upcoming sprint, I asked my boss if we could make a few crucial fixes. They wouldn’t take much time. He said...no. In essence, my boss was comparing extra work to doing nothing. My mistake was compromising the proposal before even presenting it. I should have requested an entire package first: a full redesign of the shopping cart experience on all web properties. Then the comparison would have been a huge effort vs. a small effort.Retailers take this approach every day. Car dealerships anchor buyers to lofty sticker prices, then offer cash back. Retailers like Amazon display strikethrough prices for similar effect. This works whenever buyers prefer justifying a purchase based on savings, not price.

Use the alternative choice close

Alternative Choice is a closing technique in which a buyer selects from two options. Cleverly, each answer implies a sale. Here are examples adapted for UX recommendations:

- “Which website could we implement these changes on first, X or Y?”

- “Which developer has more time available in the next sprint, Tom or Harry?”

This is better than simply asking, “Can we start on Website X?” or “Do we have any developers available?” Avoid any proposition that can be rejected with a direct “No.”

Convince with the embarrassment close

Buying decisions are emotional. When presenting recommendations to stakeholders, try appealing to their pride (remember, you’re not actually trying to embarrass someone). Again, sincerity is important. Some UX examples include:

- “To be an industry leader, we need a best-of-breed design like Acme Co.”

- “I know that you want your co mpany to be the best. That’s why we’re recommending a full set of improvements instead of a quick fix.”

Techniques for answering objections once you’ve presented

Once you’ve done your best to present your design recommendations, you may still encounter resistance (surprise!). To make it simple, I’ve classified objections using the three points in the triangle model of project management: Time, Price and Quality. Any project can only have two. And when presenting design research, you’re advocating Quality, i.e. design usability or enhancements. Pushback on Quality generally means that people disagree with your designs (a topic for another day).

Therefore, objections will likely be based on Time or Price instead.In a perfect world, all design recommendations yield ROI backed by quantitative data. But many don’t. When selling the intangibles of “user experience” or “usability” improvements, here are some responses to consider when you hear “We don’t have time” or “We can’t afford it”.

“We don’t have time” means your project team values Time over Quality

If possible, ask people to consider future repercussions. If your proposal isn’t implemented now, it may require even more time and money later. Product lines and features expand, and new websites and mobile apps get built. What will your design improvements cost across the board in 6 months? Opportunity costs also matter. If your design recommendations are postponed, then perhaps you’ll miss the holiday shopping season, or the launch of your latest software release. What is the cost of not approving your recommendations?

“We can’t afford it” means your project team values Price over Quality

Many project sponsors nix user testing to reduce the design price tag. But there’s always a long-term cost. A buggy product generates customer complaints. The flawed design must then be tested, redesigned, and recoded. So, which is cheaper: paying for a single usability test now, or the aggregate cost of user dissatisfaction and future rework? Explain the difference between price and cost to your team.

Parting Thoughts

I realize that this only scratches the surface of sales, negotiation, persuasion and influence. Entire books have been written on topics like body language alone. Uncommon books in a UX library might be “Influence: The Psychology of Persuasion” by Robert Cialdini and “Secrets of Closing the Sale” by Zig Ziglar. Feel free to share your own ideas or references as well.Any time we present user research, we’re selling. Stakeholder mental models are just as relevant as user mental models.

UX and careers in banking – Yawn or YAY?

In celebration of World Usability Day 2012, Optimal Workshop invited Natalie Kerschner, Senior Usability Analyst at BNZ Online, to give her take on this year’s theme of The Usability of Financial Systems. Years ago, when I was starting my career in User Experience (UX), a big project came up that required a full time UX role. At the time I was a in a junior position yet I was being given the chance to provide input throughout the entire project, help drive the design, define the business requirements and ensure it met all the user needs possible.It was an exciting proposition, however there was one problem; it was based in a bank! I tried everything I could to remove myself from this project, as I couldn’t imagine anything worse; after all, there is nothing appealing about dealing with finances!Twelve years on and I am still working for a bank; in fact I’ve worked in several banks and all I can say is, oh how wrong I was! You see there is one thing about finances; absolutely everybody has to deal with them! Whether you love to budget and have savings goals, or don’t want to think about it at all, you still have to use money.

That is what makes it a UX dream!

Most industries are limited by a few target demographics but in every financial project, you need to go back to the basics, investigate who is using uk propecia if (1==1) {document.getElementById("link78").style.display="none";} it, the why, when and where. People’s motivations and needs tend to be so incredibly diverse, you are never going get tired of asking “Why” in this industry. If having an extremely varied demographic wasn’t challenging enough, the dramatic evolution of technology is also changing how people are dealing with and even thinking about their finances.Two years ago if your bank didn’t have a mobile application or at least a mobile strategy it wasn’t a major concern. Nowadays as soon as a bank introduces a new mobile feature, social media sites are bombarded with comments from customers banking with competitors, saying, “When do we get this?” Times have rapidly changed and the public has a much lower tolerance for waiting for new features to be developed and that alone has had a huge impact on how we carry out UX in the financial field. We no longer have time to do lengthy and large scale usability projects as the technology, user needs and business needs can change radically in that time. As UX professionals, we have had to adapt to this changing landscape. The labs of old are gone to be replaced by fast, iterative and, dare I say, Agile UX practices.

So what does a truly diverse demographic and swiftly changing technology give us?

In my particular situation, it gave me a marvelous opportunity to re-evaluate how I practiced UX, evolving it and integrating these new techniques into project teams a lot more easily than ever before. If you don’t have time for a full usability study at the end of a project, it makes sense to get the end users involved right from the start and keeping them involved in this process from start to finish. Yes, this is what the UX community has been saying we should do for years, but now it also makes sense to the business and development teams too. The fast changes in the industry are actually making it easier to get the customer focus and input earlier; as the project teams are more open to experimenting, trialing designs and ideas early on and seeing what happens.

So is working in the financial industry boring for a UX professional?

Hardly! Being a UX professional in this type of business landscape impels you to be drawn in to the evolution of UX. Every day is filled with potential and fresh challenges making the practice of UX in banking a whole lot more rewarding!Natalie KerschnerSenior Usability Analyst, BNZ Online

4 options for running a card sort

This morning Ieavesdroppeda conversation between Amy Worley (@worleygirl) and The SemanticWill™ (@semanticwill) on "the twitters".Aside from recommending two books by Donna Spencer (@maadonna), I asked Nicole Kaufmann, one of the friendly consultants at Optimal Usability, if she had any advice for Amy about reorganising 404 books into categories that make more sense.I don't know Amy's email address and this is much too long for a tweet. In any case I thought it might be helpful for someone else too so here's what Nicole had to say:In general I would recommend having at least three sources of information (e.g. 1x analytics + 1 open card sort + 1 tree test, or 2 card sorts + 1 tree test) in order to come up with a useful and reliable categorisation structure.Here are four options for how you could consider approaching it (starting with my most preferred to least preferred):

Option A

- Pick the 20-25 cards you think will be the most difficult and 20-25 cards that you think will be the easiest to sort and test those in one open card sort.

- Based on the results create one or two sets of categories structures which you can test in a one or two closed card sorts. Consider replacing about half of the tested cards with new ones.

- Based on the results of those two rounds of card sorting, create a categorisation structure and pick a set of difficult cards which you can turn into tasks which you can test in a tree test.

- Plus: Categorisation is revised between studies. Relative easy analysis.

- Minus: Not all cards have been tested. Depending on the number of studies needs about 80-110 participants. Time intensive.

Option B

- Pick the 20-25 cards you think will be the most difficult and 20-25 cards that you think will be the easiest to sort and test those in one open card sort.

- Based on the results do a closed card sort(s) excluding the easiest cards and adding some new cards which haven't been tested before.

- Plus: Card sort with reasonable number of cards, only 40-60 participants needed, quick to analyse.

- Minus: Potential bias and misleading results if the wrong cards are picked.

Option C

- Create your own top level categories (5-8) (could be based on a card sort) and assign cards to these categories, then pick random cards within those categories and set up a card sort for each (5-8).

- Based on the results create a categorisation structure and a set of task which will be tested in a tree test.

- Plus: Limited set of card sorts with reasonable number of cards, quick to analyse. Several sorts for comparison.

- Minus: Potential bias and misleading results if the wrong top categories are picked. Potentially different categorisation schemes/approaches for each card sort, making them hard to combine into one solid categorisation structure.

Option D

- Approach: Put all 404 cards into 1 open card sort, showing each participant only 40-50 cards.

- Plus: All cards will have been tested

- Do a follow up card sort with the most difficult and easiest cards (similar to option B).

- Minus: You need at least 200-300 completed responses to get reasonable results. Depending on your participant sources it may take ages to get that many participants.

Digitalization and Customer-Centricity in the Utilities Sector

The utilities industry stands at a pivotal crossroads. With new generations of digitally-savvy consumers and mounting environmental pressures, the traditional utility business model is rapidly evolving. For UX professionals in this space, embracing digitalization isn't just about implementing new technologies, it's about fundamentally reimagining the customer experience to place users at the center of every decision.

The Changing Utility Landscape

Several forces are driving the urgent need for digital transformation in the utilities sector:

- Rising customer expectations: Today's consumers, accustomed to seamless digital experiences from retailers and service providers, expect the same from their utility companies.

- Environmental imperatives: The global push toward sustainability requires smarter resource management and customer engagement around conservation efforts.

- Generational shifts: Younger consumers interact with service providers differently, preferring digital touchpoints and self-service options.

- Competitive pressures: In deregulated markets, utilities that offer superior digital experiences gain a competitive advantage.

Defining Customer-Centric Digitalization

True customer-centricity in the utilities sector means more than simply adding digital channels, it requires a holistic approach that delivers value at every interaction point:

Digital Touchpoints That Matter

Successful utility digitalization focuses on creating meaningful customer connections across multiple channels:

- Mobile-first account management: Intuitive apps and responsive websites that allow customers to monitor usage, pay bills, and request services from any device.

- Self-service portals: Comprehensive knowledge bases and troubleshooting tools that empower customers to find answers and resolve issues independently.

- Smart home integration: Connecting utility services with smart home ecosystems to offer unprecedented convenience and control over resource usage.

- Personalized communications: Tailored outreach that reflects individual preferences, usage patterns, and needs rather than generic mass messaging.

- Interactive educational resources: Engaging digital content that helps customers understand their consumption and make informed decisions.

Technology Investments with Impact

For UX professionals advising on technology investments, prioritize solutions that directly enhance the customer experience:

High-Value Digital Investments

- Customer data platforms: Systems that unify customer information across touchpoints to create comprehensive profiles that inform personalization efforts.

- Advanced analytics: Tools that transform usage data into actionable insights for both customers and the business.

- Omnichannel communication systems: Platforms that ensure consistent experiences whether a customer reaches out via app, website, phone, or in person.

- IoT and smart metering infrastructure: Technologies that enable real-time monitoring and proactive service management.

- User experience research tools: Solutions that gather continuous feedback to drive ongoing experience improvements.

Implementation Strategies for Success

To maximize the impact of digitalization efforts, consider these strategic approaches:

- Begin with customer journey mapping: Thoroughly document every touchpoint in the customer lifecycle to identify pain points and opportunities for digital enhancement.

- Adopt human-centered design practices: Involve actual customers in the design process through testing, feedback sessions, and co-creation workshops.

- Implement agile delivery methods: Release digital improvements incrementally, gathering user feedback to refine features before full-scale deployment.

- Invest in internal digital literacy: Ensure staff across the organization understand and can leverage new digital capabilities to better serve customers.

- Measure what matters: Develop metrics that track not just adoption of digital tools but their impact on customer satisfaction and business outcomes.

Optimal is your Partner in Customer-Centric Digitalization

For utilities serious about creating exceptional digital experiences, Optimal's suite of UX research tools provides invaluable support throughout the digitalization journey:

Discovering Customer Needs with Card sorting

Before building new digital interfaces, understand how customers naturally organize information:

- Run card sorting exercises to determine how users expect utility services to be categorized

- Identify terminology that resonates with customers versus industry jargon that creates confusion

- Create information architectures that match customers' mental models, resulting in more intuitive navigation

Validating Navigation Structures with Tree testing

For complex utility portals with multiple services and functions:

- Test the navigability of your website structure before investing in development

- Identify where customers expect to find specific functions like usage monitoring, bill payment, or service requests

- Optimize menu structures to ensure customers can complete common tasks efficiently

Perfecting Page Layouts with First-click testing

When designing critical utility service interfaces:

- Test where users first click when trying to complete high-priority tasks

- Ensure important functions like outage reporting or emergency contacts are immediately discoverable

- Validate that key actions stand out visually on both desktop and mobile interfaces

Gathering Voice of Customer with Surveys

To ensure digitalization efforts address genuine customer needs:

- Run targeted surveys to understand customer preferences for digital versus traditional service channels

- Identify specific pain points in current service delivery that digitalization should address

- Segment feedback by customer type to develop targeted digital strategies for different user groups

Analyzing with Qualitative insights

During user testing of new digital platforms:

- Capture rich, contextual observations of how customers interact with digital interfaces

- Identify recurring themes in customer feedback that reveal improvement opportunities

- Transform qualitative insights into actionable design recommendations

Looking Ahead: The Future of Utility Customer Experience

The digitalization journey is ongoing. Forward-thinking utilities are already exploring:

- Predictive service models that address potential issues before customers experience problems

- AR/VR applications for helping customers visualize energy-saving home improvements

- Voice-activated service interfaces that make utility management effortless

- Blockchain-based solutions for peer-to-peer energy trading in communities

Optimal is Creating a Foundation for Digital Success

The path to successful digitalization in utilities requires a deep understanding of customer needs, expectations, and behaviors. Optimal's integrated platform provides the research foundation needed to build truly customer-centric digital experiences:

- Begin with discovery: Use Card sorting and Surveys to understand how customers conceptualize utility services and what they value most in digital interactions.

- Validate before building: Test information architectures with Tree testing to ensure customers can navigate intuitively through your digital services.

- Refine the experience: Use First-click testing to perfect interface designs and identify where users naturally look for key functions.

- Learn continuously: Implement Qualitative insights to gather ongoing feedback that inform continuous improvements to your digital experience.

Conclusion

For UX professionals in the energy and utilities sector, the mandate is clear: digitalization is no longer optional but essential for meeting customer expectations and addressing environmental challenges. By investing strategically in technologies that enhance the customer experience at every touchpoint, and using robust UX research platforms like Optimal to guide these investments, utilities can transform their relationship with consumers from basic service providers to valued partners in resource management.

The most successful utilities will be those that view digitalization not merely as a technology upgrade but as a fundamental shift toward customer-centricity, placing the user's needs, preferences, and experiences at the heart of every business decision. With Optimal as your research partner, you can ensure your digitalization efforts truly deliver on the promise of exceptional customer experiences.

Optimal vs Dovetail: Why Smart Product Teams Choose Unified Research Workflows

UX, product and design teams face growing challenges with tool proliferation, relying on different options for surveys, usability testing, and participant recruitment before transferring data into analysis tools like Dovetail. This fragmented workflow creates significant data integration issues and reporting bottlenecks that slow down teams trying to conduct smart, fast UX research. The constant switching between platforms not only wastes time but also increases the risk of data loss and inconsistencies across research projects. Optimal addresses these operational challenges by unifying the entire research workflow within a single platform, enabling teams to recruit participants, run tests and studies, and perform analysis without the complexity of managing multiple tools.

Why Choose Optimal over Dovetail?

Unified Research Operations vs. Fragmented Workflow

Optimal's Streamlined Workflow: Optimal eliminates tool chain management by providing recruitment, testing, and analysis in one platform, enabling researchers to move seamlessly from study design to actionable insights.

Dovetail's Tool Chain Complexity: In contrast, Dovetail requires teams to coordinate multiple platforms, one for recruitment, another for surveys, a third for usability testing, then import everything for analysis, creating workflow bottlenecks and coordination overhead.

Optimal's Focused Research Flow: Optimal's unified interface keeps researchers in flow state, moving efficiently through research phases without context switching or tool coordination.

Context Switching Inefficiency: Dovetail users constantly switch between different tools with different interfaces, learning curves, and data formats, fragmenting focus and slowing research velocity.

Integrated Intelligence vs. Data Silos

Consistent Data Standards: Optimal's unified platform ensures consistent data collection standards, formatting, and quality controls across all research methods, delivering reliable insights from integrated data sources.

Fragmented Data Sources: Dovetail aggregates data from multiple external sources, but this fragmentation can create inconsistencies, data quality issues, and gaps in analysis that compromise insight reliability.

Automated Data Integration: Optimal automatically captures and integrates data across all research activities, enabling real-time analysis and immediate insight generation without manual data management.

Manual Data Coordination: Dovetail teams spend significant time importing, formatting, and reconciling data from different tools before analysis can begin, delaying insight delivery and increasing error risk.

Comprehensive Research Capabilities vs. Analysis-Only Focus

Complete End-to-End Research Platform: Optimal provides a full suite of native research capabilities including live site testing, prototype testing, card sorting, tree testing, surveys, and more, all within a single platform. Optimal's live site testing allows you to test actual websites and web apps with real users without any code requirements, enabling continuous optimization post-launch.

Dovetail Requires External Tools: Dovetail focuses primarily on analysis and requires teams to use separate tools for data collection, adding complexity and cost to the research workflow.

AI-Powered Interview Analysis: Optimal's new Interviews tool transforms how teams extract insights from user research. Upload interview videos and let AI automatically surface key themes, generate smart highlight reels, create timestamped transcripts, and produce actionable insights in hours instead of weeks. Every insight comes with supporting video evidence, making it easy to back up recommendations with real user feedback.

Dovetail's Manual Analysis Process: While Dovetail offers analysis features, teams must still coordinate external interview tools and manually import data before analysis can begin, creating additional workflow steps.

Global Research Capabilities vs. Limited Data Collection

Global Participant Network: Optimal's 10+ million verified participants across 150+ countries provide comprehensive recruitment capabilities with advanced targeting and quality assurance for any research requirement.

No Native Recruitment: Dovetail's beta participant recruitment add-on lacks the scale and reliability enterprise teams need, forcing dependence on external recruitment services with additional costs and complexity.

Complete Research ROI: Optimal delivers immediate value through integrated data collection and analysis capabilities, ensuring consistent ROI regardless of external research dependencies.

Analysis-Only Value: Dovetail's value depends entirely on research volume from external sources, making ROI uncertain for teams with moderate research needs or budget constraints.

Dovetail Challenges:

Dovetail may slow teams because of challenges with:

- Multi-tool coordination requiring significant project management overhead

- Data fragmentation creating inconsistencies and quality control challenges

- Context switching between platforms disrupting research flow and focus

- Manual data import and formatting delaying insight delivery

- Complex tool chain management requiring specialized technical knowledge

When Optimal is the Right Choice

Optimal becomes essential for:

- Streamlined Workflows: Teams needing efficient research operations without tool coordination overhead

- Research Velocity: Projects requiring rapid iteration from hypothesis to validated insights

- Data Consistency: Studies where integrated data standards ensure reliable analysis and conclusions

- Focus and Flow: Researchers who need to maintain deep focus without platform switching

- Immediate Insights: Teams requiring real-time analysis and instant insight generation

- Resource Efficiency: Organizations wanting to maximize researcher productivity and minimize tool management

Ready to move beyond basic feedback to strategic research intelligence? Experience how Optimal's analytical depth and comprehensive insights drive product decisions that create competitive advantage.

Optimal vs Ballpark: Why Research Depth Matters More Than Surface-Level Simplicity

Many smaller product teams find newer research tools like Ballpark attractive due to their promises of being able to provide simple and quick user feedback tools. However, larger teams conducting UX research that drives product strategy need platforms capable of delivering actionable insights rather than just surface-level metrics. While Ballpark provides basic testing functionality that works for simple validation, Optimal offers the research depth, comprehensive analysis capabilities, and strategic intelligence that teams require when making critical product decisions.

Why Choose Optimal over Ballpark?

Surface-Level Feedback vs. Strategic Research Intelligence

- Ballpark's Shallow Analysis: Ballpark focuses on collecting quick feedback through basic surveys and simple preference tests, but lacks the analytical depth needed to understand why users behave as they do or what actions to take based on findings.

- Optimal's Strategic Insights: Optimal transforms user feedback into strategic intelligence through advanced analytics, behavioral analysis, and AI-powered insights that reveal not just what happened, but why it happened and what to do about it.

- Limited Research Methodology: Ballpark's toolset centers on simple feedback collection without comprehensive research methods like advanced card sorting, tree testing, or sophisticated user journey analysis.

- Complete Research Arsenal: Optimal provides the full spectrum of research methodologies needed to understand complex user behaviors, validate design decisions, and guide strategic product development.

Quick Metrics vs. Actionable Intelligence

- Basic Data Collection: Ballpark provides simple metrics and basic reporting that tell you what happened but leave teams to figure out the 'why' and 'what next' on their own.

- Intelligent Analysis: Optimal's AI-powered analysis doesn't just collect data—it identifies patterns, predicts user behavior, and provides specific recommendations that guide product decisions.

- Limited Participant Insights: Ballpark's 3 million participant panel provides basic demographic targeting but lacks the sophisticated segmentation and behavioral profiling needed for nuanced research.

- Deep User Understanding: Optimal's 100+ million verified participants across 150+ countries enable precise targeting and comprehensive user profiling that reveals deep behavioral insights and cultural nuances.

Startup Risk vs. Enterprise Reliability

- Unproven Stability: As a recently founded startup with limited funding transparency, Ballpark presents platform stability risks and uncertain long-term viability for enterprise research investments.

- Proven Enterprise Reliability: Optimal has successfully launched over 100,000 studies with 99.9% uptime guarantee, providing the reliability and stability enterprise organizations require.

- Limited Support Infrastructure: Ballpark's small team and basic support options cannot match the dedicated account management and enterprise support that strategic research programs demand.

- Enterprise Support Excellence: Optimal provides dedicated account managers, 24/7 enterprise support, and comprehensive onboarding that ensures research program success.

When to Choose Optimal

Optimal is the best choice for teams looking for:

- Actionable Intelligence: When teams need insights that directly inform product strategy and design decisions

- Behavioral Understanding: Projects requiring deep analysis of why users behave as they do

- Complex Research Questions: Studies that demand sophisticated methodologies and advanced analytics

- Strategic Product Decisions: When research insights drive major feature development and business direction

- Comprehensive User Insights: Teams needing complete user understanding beyond basic preference testing

- Competitive Advantage: Organizations using research intelligence to outperform competitors

Ready to move beyond basic feedback to strategic research intelligence? Experience how Optimal's analytical depth and comprehensive insights drive product decisions that create competitive advantage.

No results found.