Definition of a website footer

The footer of a website sits at the very bottom of every single web page and contains links to various types of content on your website. It’s an often overlooked component of a website, but it plays several important roles in your information architecture (IA) – it’s not just some extra thing that gets plonked at the bottom of every page.

Getting your website footer right matters!

The footer communicates to your website visitors that they’ve reached the bottom of the page and it’s also a great place to position important content links that don’t belong anywhere else – within reason. A website footer is not a dumping ground for random content links that you couldn’t find a home for, however there are some content types that are conventionally accessed via the footer e.g., privacy policies and copyright information just to name a few.

Lastly, from a usability and navigation perspective, website footers can serve as a bit of a safety net for lost website visitors. Users might be scrolling and scrolling trying to find something and the footer might be what catches them and guides them back to safety before they give up on your website and go elsewhere. Footers are a functional and important part of your overall IA, but also have their own architecture too.

Read on to learn about the types of content links that might be found in a footer, see some real life examples and discuss some approaches that you might take when testing your footer to ensure that your website is supporting your visitors from top to bottom.

What belongs in a website footer

Deciding which content links belong in your footer depends entirely on your website. The type of footer, its intent and content depends on its audience of your customers, potential customers and more — ie your website visitors. Every website is different, but here’s a list of links to content types that might typically be found in a footer.

- Legal content that may include: Copyright information, disclaimer, privacy policy, terms or use or terms of service – always seek appropriate advice on legal content and where to place it!

- Your site map

- Contact details including social media links and live chat or chat bot access

- Customer service content that may include: shipping and delivery details, order tracking, returns, size guides, pricing if you’re a service and product recall information.

- Website accessibility details and ways to provide feedback

- ‘About Us’ type content that may include: company history, team or leadership team details, the careers page and more

- Key navigational links that also appear in the main navigation menu that is presented to website visitors when they first land on the page (e.g. at the top or the side)

Website footer examples

Let’s take a look at three diverse real life examples of website footers.

IKEA US

IKEA’s US website has an interesting double barrelled footer that is also large and complex – a ‘fat footer’ as it’s often called – and its structure changes as you travel deeper into the IA. The below image taken from the IKEA US home page shows two clear blocks of text separated by a blue horizontal line. Above the line we have the heading of ‘All Departments’ with four columns showing product categories and below the line there are seven clear groups of content links covering a broad range of topics including customer service information, links that appear in the top navigation menu and careers. At the very bottom of the footer there are social media links and the copyright information for the website.

As expected, IKEA’s overall website IA is quite large, and as a website visitor clicks deeper into the IA, the footer starts to change. On the product category landing pages, the footer is mostly the same but with a new addition of some handy breadcrumbs to aid navigation (see below image).

When a website visitor travels all the way down to the individual product page level, the footer changes again. In the below image found on the product page for a bath mat, while the blue line and everything below it is still there, the ‘All Departments’ section of the footer has been removed and replaced with non-clickable text on the left hand side that reads as ‘More Bath mats’ and a link on the right hand side that says ‘Go to Bath mats’. Clicking on that link takes the website visitor back to the page above.

Overall, evolving the footer content as the website visitor progresses deeper into the IA is an interesting approach - as the main page content becomes more focussed as does the footer while still maintaining multiple supportive safety net features.

M.A.C Cosmetics US

The footer for the US website of this well known cosmetics brand has a four part footer. At first it appears to just have three parts as shown in the image below: a wide section with seven content link categories covering a broad range of content types as the main part with a narrow black strip on either end of it making up the second and third parts. The strip above has loyalty program and live chat links and the strip below contains mostly links to legal content.

When a website visitor hovers over the ‘Join our loyalty program’ call to action (CTA) in that top narrow strip, the hidden fourth part of the footer which is slightly translucent pulls up like a drawer and sits directly above the strip capping off the top of the main section (as shown in the below image). This section contains more information about the loyalty program and contains further CTAs to join or sign in. It disappears when the cursor is moved away from the hover CTA or it can be collapsed manually via the arrow in the top right hand corner of this fourth part. It’s an interesting and unexpected interaction to have with a footer, but it adds to the overall consistent and cohesive experience of this website because it feels like the footer is an active participant in that experience.

MAC Cosmetics US website footer as it appears on the home page with all four parts visible (accessed May 2019).

Domino’s Pizza US

Domino’s Pizza’s US website has a reasonably flat footer in terms of architecture but it occupies as much space as a more complex or deeper footer. As shown in the image below, its content links are presented horizontally over three rows on the left hand side of the footer and these links are visually separated by forward slashes. It also displays social media links and some advertising content on the right hand side. The most interesting feature of this footer is the large paragraph of text titled ‘Legal Stuff’ below the links. Delightfully it uses direct, clear and plain language and even includes a note about delivery charges not including tips and to ‘Please reward your driver for awesomeness’.

Domino’s Pizza US website footer as it appears on the home page (accessed May 2019).

How to test a website footer

Like every other part of your website, the only way you’re going to know if your footer is supporting your website visitors is if you test it with them. When testing a website’s IA overall, the footer is often excluded. This might be because we want to focus on other areas first or maybe it’s because testing everything at once has the potential to be overwhelming for our research participants.

Testing a footer is fairly easy thing to do and there’s no right or wrong approach – it really does depend on where you are up to in your project, the resources you have available to you and the size and complexity of the footer itself!

If you’re designing a footer for a new website there’s a few ways you might approach ensuring your footer is best supporting your website visitors. If you’re planning to include a large and complex footer, it’s a good idea to start by running an open card sort just on those footer links. An open card sort will help you understand how your website visitors expect those content links in your footer to be grouped and what they think those groups should be called.

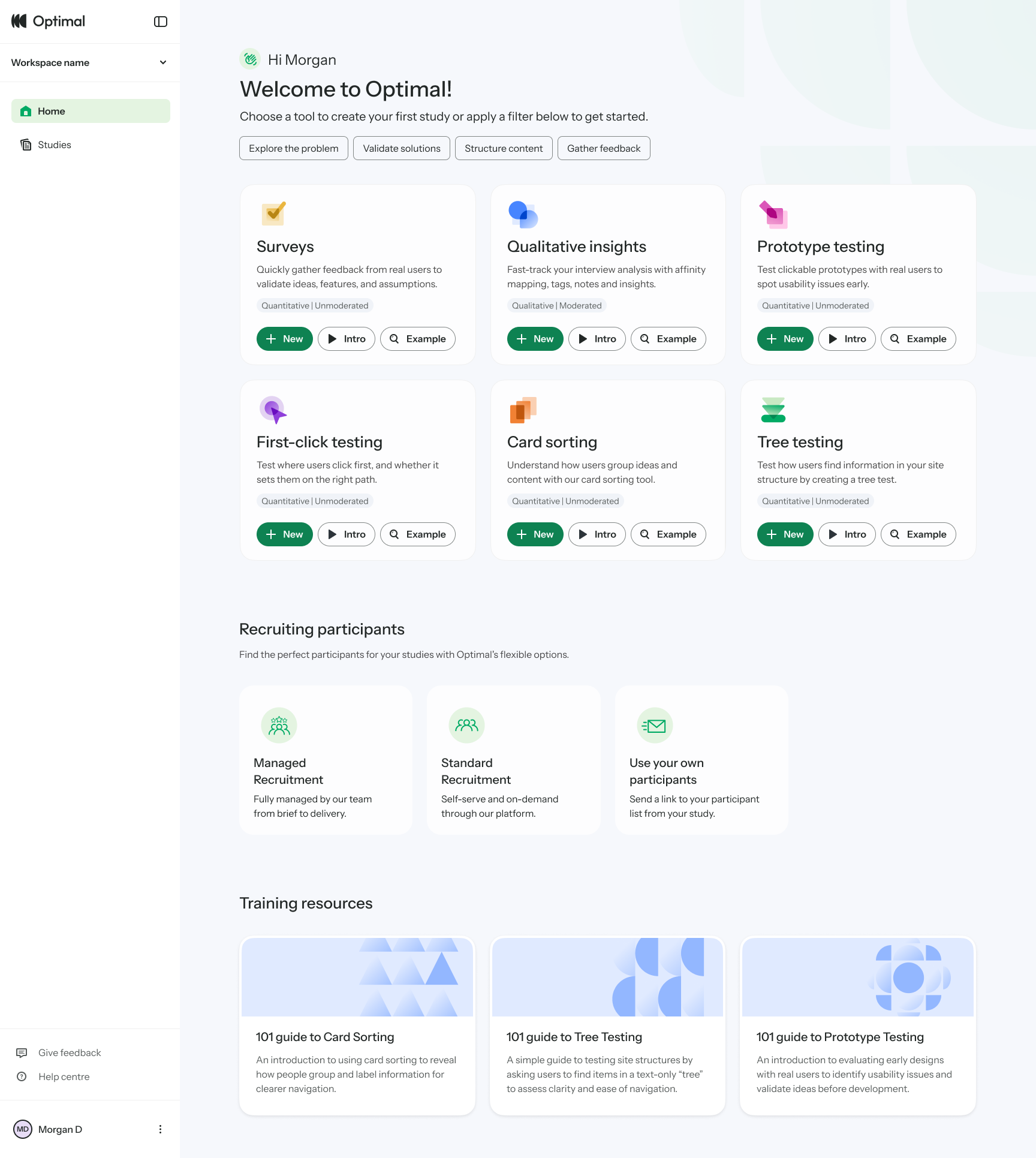

If you’re redesigning an existing website, you might first run a tree test on the existing footer to benchmark test it and to pinpoint the exact issues. You might tree test just the footer in the study or you might test the whole website including the footer. Optimal's tree testing is really flexible and you can tree test just a small section of an IA or you can do the whole thing in one go to find out where people are getting lost in the structure. Your approach will depend on your project and what you already know so far. If you suspect there may be issues with the website’s footer, for example, if no one is visiting it and/or you’ve been receiving customer service requests from visitors to help them find content that only lives in the footer, it would be a good idea to consider isolating it for testing. This will help you avoid any competition between the footer and the rest of your IA as well as any potential confusion that may arise from duplicated tree branches (i.e. when your footer contains duplicate labels).

If you’re short on time and there aren’t any known issues with the footer prior to a redesign, you might tree test the entire IA in your benchmark study, iterate your design and then along with everything else, include testing activities for your footer in your moderated usability testing plan. You might include a usability testing scenario or question that requires your participants to complete a task that involves finding content that can only be found in the footer (e.g., shipping information if it’s an ecommerce website). Also keep a close eye on how your participants are moving around the page in general and see if/when the footer comes into play – is it helping people when they’re lost and scrolling? Or is it going unnoticed? If so, why and so on. Talk to your research participants like you would about any other aspect of your website to find out what’s going on there. When resources are tight, use your best judgement and choose the research approach that’s best for your situation, we’ve all had moments where we’ve had to be pragmatic and do our best with what we have.

When you’re at a stage in your design process where you have a visual design or concept for your footer, you could also run a first-click test. First-click tests are quick and easy and will help you determine how your website visitors are faring once they reach your footer and if they can identify the correct content link to complete their task. Studies can be run remotely or in person and just like the rest of the tools in Optimal's user research platform, are super quick to run and great for reaching website visitors all over the world simply by sharing a link to the study.