Hello, my name is Rick and I’m a sociologist. All together, “Hi, Rick!” Now that we’ve got that out of the way, let me tell you about how I use card sorting in my research. I'll soon be running a series of in-person, moderated card sorting sessions. This article covers why card sorting is an integral part of my research, and how I've designed the study toanswer specific questions about two distinct parts of society.

Card sorting to establish how different people comprehend their worlds

Card sorting,or pile sorting as it’s sometimes called, has a long history in anthropology, psychology and sociology. Anthropologists, in particular, have used it to study how different cultures think about various categories. Researchers in the 1970s conducted card sorts to understand how different cultures categorize things like plants and animals. Sociologists of that era also used card sorts to examine how people think about different professions and careers. And since then, scholars have continued to use card sorts to learn about similar categorization questions.

In my own research, I study how different groups of people in the United States imagine the category of 'religion'. Asthose crazy 1970s anthropologists showed, card sorting is a great way to understand how people cognitively understand particular social categories. So, in particular,I’m using card sorting in my research to better understand how groups of people with dramatically different views understand 'religion' — namely, evangelical Christians and self-identified atheists. Thinkof it like this. Some people say that religion is the bedrock of American society.

Others say that too much religion in public life is exactly what’s wrong with this country. What's not often considered is these two groups oftenunderstand the concept of 'religion' in very different ways. It’s like the group of blind men and the elephant: one touches the trunk, one touches the ears, and one touches the tail. All three come away with very different ideas of what an elephant is. So you could say that I study how different people experience the 'elephant' of religion in their daily lives. I’m doing so using primarily in-person moderated sorts on an iPad, which I’ll describe below.

How I generated the words on the cards

The first step in the process was to generate lists of relevant terms for my subjects to sort. Unlike in UX testing, where cards for sorting might come from an existing website, in my world these concepts first have to be mined from the group of people being studied. So the first thing I did was have members of both atheist and evangelical groups complete a free listing task. In a free listing task, participants simply list as many words as they can that meet the criteria given. Sets of both atheist and evangelical respondents were given the instructions: "What words best describe 'religion?' Please list as many as you can.” They were then also asked to list words that describe 'atheism', 'spirituality', and 'Christianity'.

I took the lists generated and standardizedthem by combining synonyms. For example, some of my atheists used words like 'ancient', 'antiquated', and 'archaic' to describe religion. SoI combined all of these words into the one that was mentioned most: 'antiquated'. By doing this, I created a list of the most common words each group used to describe each category. Doing this also gave my research another useful dimension, ideal for exploring alongside my card sorting results. Free lists can beanalyzed themselves using statistical techniques likemulti-dimensional scaling, so I used this technique for apreliminary analysis of the words evangelicals used to describe 'atheism':

Now that I’m armed with these lists of words that atheist and evangelicals used to describe religion, atheism etc., I’m about to embark on phase two of the project: the card sort.

Why using card sorting software is a no-brainer for my research

I’ll be conducting my card sorts in person, for various reasons. I have relatively easy access to the specific population that I’m interested in, and for the kind of academic research I’m conducting, in-person activities are preferred. In theory, I could just print the words on some index cards and conduct a manual card sort, but I quickly realized that a software solution would be far preferable, for a bunch of reasons.

First of all, it's important for me to conductinterviews in coffee shops and restaurants, and an iPad on the table is, to put it mildly, more practical than a table covered in cards — no space for the teapot after all.

Second, usingsoftwareeliminates the need for manual data entry on my part. Not only is manual data entry a time consuming process, but it also introduces the possibly of data entry errors which may compromise my research results.

Third, while the bulk of the card sorts are going to be done in person, having an online version will enable meto scale the project up after the initial in-person sorts are complete. The atheist community, in particular, has a significant online presence, making a web solution ideal for additional data collection.

Fourth, OptimalSort gives the option to re-direct respondents after they complete a sort to any webpage, which allows multiple card sorts to be daisy-chained together. It also enables card sorts to be easily combined with complex survey instruments from other providers (e.g. Qualtrics or Survey Monkey), so card sorting data can be gathered in conjunction with other methodologies.

Finally, and just as important, doing card sorts on a tablet is more fun for participants. After all, who doesn’t like to play with an iPad? If respondents enjoy the unique process of the experiment, this is likely to actually improve the quality of the data, andrespondents are more likely to reflect positively on the experience, making recruitment easier. And a fun experience also makes it more likely that respondents will complete the exercise.

What my in-person, on-tablet card sorting research will look like

Respondents will be handed an iPad Air with 4G data capability. While the venues where the card sorts will take place usually have public Wi-Fi networks available, these networks are not always reliable, so the cellular data capabilities are needed as a back-up (and my pre-testing has shown that OptimalSort works on cellular networks too).

The iPad’s screen orientation will be locked to landscape and multi-touch functions will be disabled to prevent respondents from accidentally leaving the testing environment. In addition, respondents will have the option of using a rubber tipped stylus for ease of sorting the cards. While I personally prefer to use a microfiber tipped stylus in other applications, pre-testing revealed that an old fashioned rubber tipped stylus was easier for sorting activities.

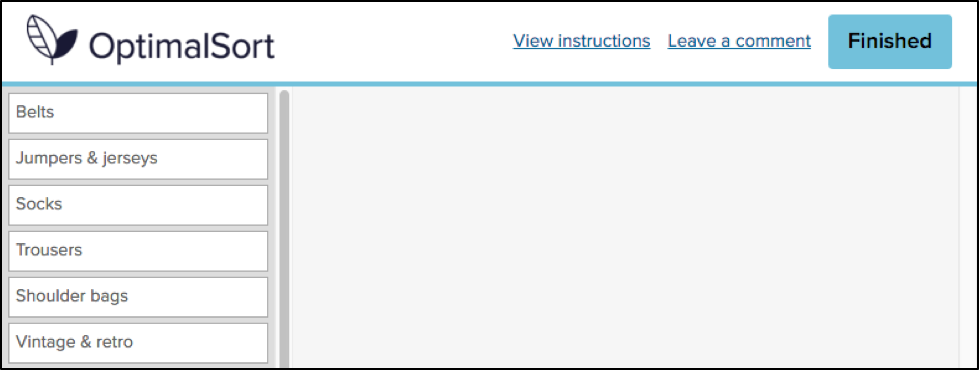

When the respondent receives the iPad, the card sort first page with general instructions will already be open on the tablet in the third party browser Perfect Web. A third party browser is necessary because it is best to run OptimalSort locked in a full screen mode, both for aesthetic reasons and to keep the screen simple and uncluttered for respondents. Perfect Web is currently the best choice in the ever shifting app landscape.

I'll give respondents their instructions and then go to another table to give them privacy (because who wants the creepy feeling of some guy hanging over you as you do stuff?). Altogether, respondents will complete two open card sorts and a fewsurvey-style questions, all chained together by redirect URLs. First, they'll sort 30 cards into groups based on how they perceive 'religion', and name the categories they create. Then, they'll complete a similar card sort, this time based on how they perceive 'atheism'.

Both atheist and evangelicals will receive a mixture of some of the top words that both groups generated in the earlier free listing tasks. To finish, they'll answer a few questions that will provide further data on how they think about 'religion'. After I’ve conducted these card sorts with both of my target populations, I’ll analyze the resulting data on its own and also in conjunction with qualitative data I’ve already collected via ethnographic research and in-depth interviews. I can't wait, actually. In a few months I’ll report back and let you know what I’ve found.