The term ‘usability’ captures sentiments of how usable, useful, enjoyable, and intuitive a website or app is perceived by users. By its very nature, usability is somewhat subjective. But what we’re really looking for when we talk about usability is how well a website can be used to achieve a specific task or goal. Using this definition we can analyze usability metrics (standard units of measurement) to understand how well user experience design is performing.

Usability metrics provide helpful insights before and after any digital product is launched. They help us form a deeper understanding of how we can design with the user front of mind. This user-centered design approach is considered the best-practice in building effective information architecture and user experiences that help websites, apps, and software meet and exceed users' needs.

In this article, we’ll highlight key usability metrics, how to measure and understand them, and how you can apply them to improve user experience.

Understanding Usability Metrics

Usability metrics aim to understand three core elements of usability, namely: effectiveness, efficiency, and satisfaction. A variety of research techniques offer designers an avenue for quantifying usability. Quantifying usability is key because we want to measure and understand it objectively, rather than making assumptions.

Types of Usability Metrics

There are a few key metrics that we can measure directly if we’re looking to quantify effectiveness, efficiency, and satisfaction. Here are four common examples:

- Success rate: Also known as ‘completion rate’, success rate is the percentage of users who were able to successfully complete the tasks.

- Time-based efficiency: Also known as ‘time on task’, time-based efficiency measures how much time a user needs to complete a certain task.

- Number of errors: Sounds like what it is! It measures the average number of times where an error occurred per user when performing a given task.

- Post-task satisfaction: Measures a user's general impression or satisfaction after completing (or not completing) a given task.

How to Collect Usability Metrics

Usability metrics are outputs from research techniques deployed when conducting usability testing. Usability testing in web design, for example, involves assessing how a user interacts with the website by observing (and listening to) users completing defined tasks, such as purchasing a product or signing up for newsletters.

Conducting usability testing and collecting usability metrics usually involves:

- Defining a set of tasks that you want to test

- Recruitment of test participants

- Observing participants (remotely or in-person)

- Recording detailed observations

- Follow-up satisfaction survey or questionnaire

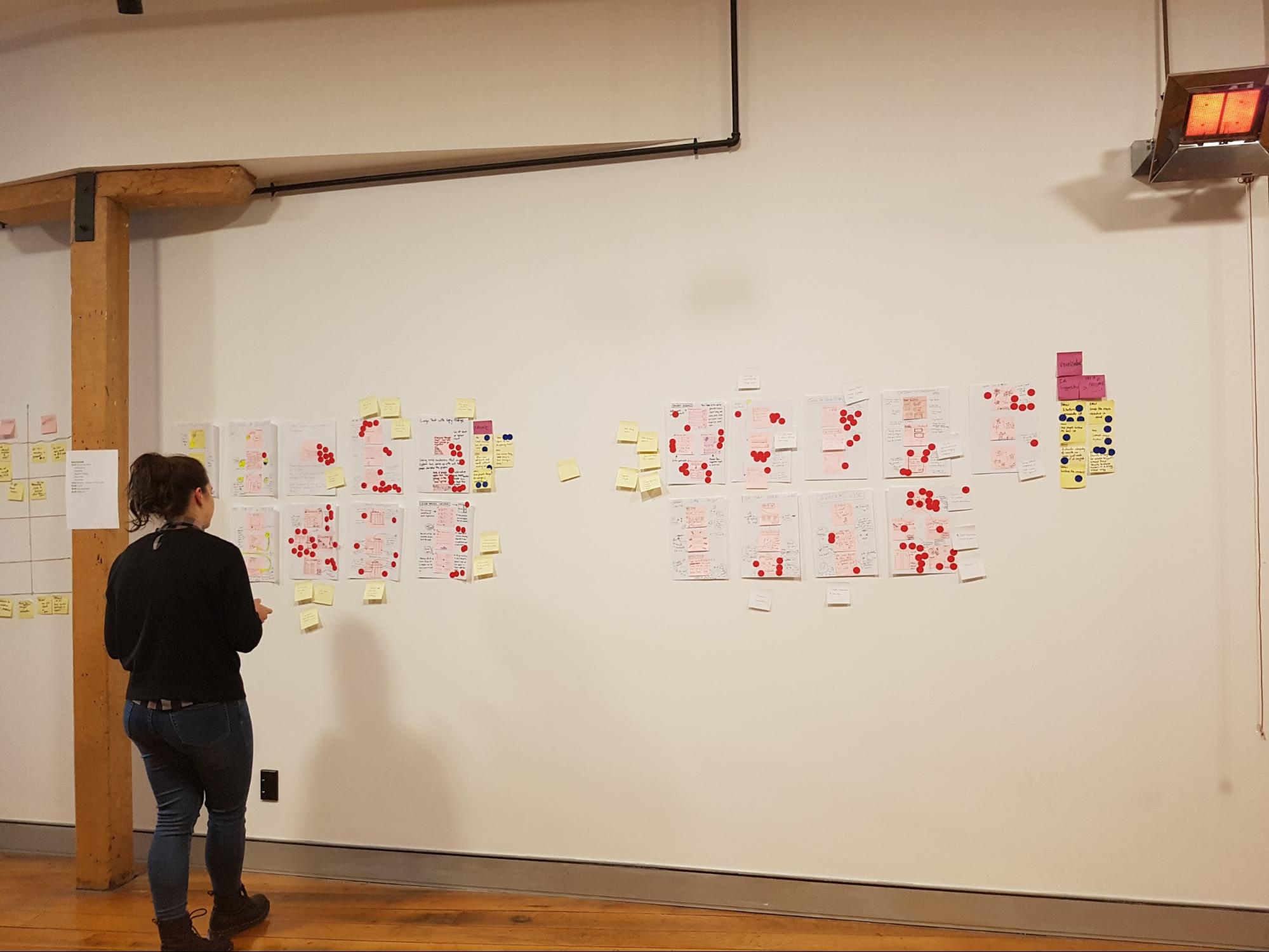

Tools such Reframer are helpful in conducting usability tests remotely, and they enable live collaboration of multiple team members. It is extremely handy when trying to record and organize those insightful observations! Using paper prototypes is an inexpensive way to test usability early in the design process.

The Importance of Usability Metrics in User-Centered Design

User-centered design challenges designers to put user needs first. This means in order to deploy user-centered design, you need to understand your user. This is where usability testing and metrics add value to website and app performance; they provide direct, objective insight into user behavior, needs, and frustrations. If your user isn’t getting what they want or expect, they’ll simply leave and look elsewhere.

Usability metrics identify which parts of your design aren’t hitting the mark. Recognizing where users might be having trouble completing certain actions, or where users are regularly making errors, are vital insights when implementing user-centered design. In short, user-centered design relies on data-driven user insight.

But why hark on about usability metrics and user-centered design? Because at the heart of most successful businesses is a well-solved user problem. Take Spotify, for example, which solved the problem of dodgy, pirated digital files being so unreliable. People liked access to free digital music, but they had to battle viruses and fake files to get it. With Spotify, for a small monthly fee, or the cost of listening to a few ads, users have the best of both worlds. The same principle applies to user experience - identify recurring problems, then solve them.

Best Practices for Using Usability Metrics

Usability metrics should be analyzed by design teams of every size. However, there are some things to bear in mind when using usability metrics to inform design decisions:

- Defining success: Usability metrics are only valuable if they are being measured against clearly defined benchmarks. Many tasks and processes are unique to each business, so use appropriate comparisons and targets; usually in the form of an ‘optimized’ user (a user with high efficiency).

- Real user metrics: Be sure to test with participants that represent your final user base. For example, there’s little point in testing your team, who will likely be intimately aware of your business structure, terminology, and internal workflows.

- Test early: Usability testing and subsequent usability metrics provide the most impact early on in the design process. This usually means testing an early prototype or even a paper prototype. Early testing helps to avoid any significant, unforeseen challenges that could be difficult to rewind in your information architecture.

- Regular testing: Usability metrics can change over time as user behavior and familiarity with digital products evolve. You should also test and analyze the usability of new feature releases on your website or app.

Remember, data analysis is only as good as the data itself. Give yourself the best chance of designing exceptional user experiences by collecting, researching, and analyzing meaningful and accurate usability metrics.

Conclusion

Usability metrics are a guiding light when it comes to user experience. As the old saying goes, “you can’t manage what you can’t measure”. By including usability metrics in your design process, you invite direct user feedback into your product. This is ideal because we want to leave any assumptions or guesswork about user experience at the door.

User-centered design inherently relies on constant user research. Usability metrics such as success rate, time-based efficiency, number of errors, and post-task satisfaction will highlight potential shortcomings in your design. Subsequently, they identify where improvements can be made, AND they lay down a benchmark to check whether any resulting updates addressed the issues.

Ready to start collecting and analyzing usability metrics? Check out our guide to planning and running effective usability tests to get a head start!